A PyTorch implementation of Multi-digit Number Recognition from Street View Imagery using Deep Convolutional Neural Networks

If you're interested in C++ inference, move HERE

| Steps | GPU | Batch Size | Learning Rate | Patience | Decay Step | Decay Rate | Training Speed (FPS) | Accuracy |

|---|---|---|---|---|---|---|---|---|

| 54000 | GTX 1080 Ti | 512 | 0.16 | 100 | 625 | 0.9 | ~1700 | 95.65% |

$ python infer.py -c=./logs/model-54000.pth ./images/test-75.png

length: 2

digits: 7 5 10 10 10

$ python infer.py -c=./logs/model-54000.pth ./images/test-190.png

length: 3

digits: 1 9 0 10 10

-

Python 3.6

-

torch 1.0

-

torchvision 0.2.1

-

visdom

$ pip install visdom -

h5py

In Ubuntu: $ sudo apt-get install libhdf5-dev $ sudo pip install h5py -

protobuf

$ pip install protobuf -

lmdb

$ pip install lmdb

-

Clone the source code

$ git clone https://github.com/potterhsu/SVHNClassifier-PyTorch $ cd SVHNClassifier-PyTorch -

Download SVHN Dataset format 1

-

Extract to data folder, now your folder structure should be like below:

SVHNClassifier - data - extra - 1.png - 2.png - ... - digitStruct.mat - test - 1.png - 2.png - ... - digitStruct.mat - train - 1.png - 2.png - ... - digitStruct.mat

-

(Optional) Take a glance at original images with bounding boxes

Open `draw_bbox.ipynb` in Jupyter -

Convert to LMDB format

$ python convert_to_lmdb.py --data_dir ./data -

(Optional) Test for reading LMDBs

Open `read_lmdb_sample.ipynb` in Jupyter -

Train

$ python train.py --data_dir ./data --logdir ./logs -

Retrain if you need

$ python train.py --data_dir ./data --logdir ./logs_retrain --restore_checkpoint ./logs/model-100.pth -

Evaluate

$ python eval.py --data_dir ./data ./logs/model-100.pth -

Visualize

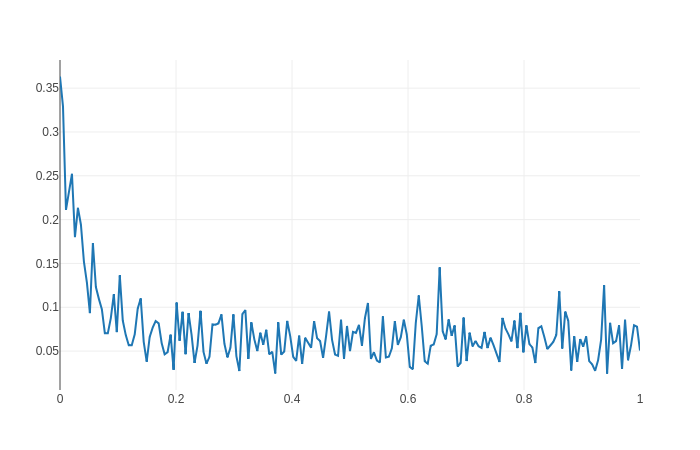

$ python -m visdom.server $ python visualize.py --logdir ./logs -

Infer

$ python infer.py --checkpoint=./logs/model-100.pth ./images/test1.png -

Clean

$ rm -rf ./logs or $ rm -rf ./logs_retrain