This code provides an analysis and experimental evaluation of the paper "A Watermark for Large Language Models". It replicates key experiments on a smaller dataset to gain insights into the watermarking approach.

The paper proposes a method to imperceptibly watermark text generated by large language models. The key idea is to bias the model to overuse a randomized "green list" of tokens, enabling statistical detection.

This code examines the approach by:

- Summarizing text from the CNN dataset using a T5-Small model

- Applying the watermarking technique during summarization

- Measuring watermark strength (z-score) on generated summaries

- Evaluating loss in summarization quality (perplexity)

- summarizer.py - Summarization model with watermarking

- utils.py - Functions for perplexity and detection

- watermark_for_LLM.ipynb - Main experiments

The main experiments replicate analyses from the paper:

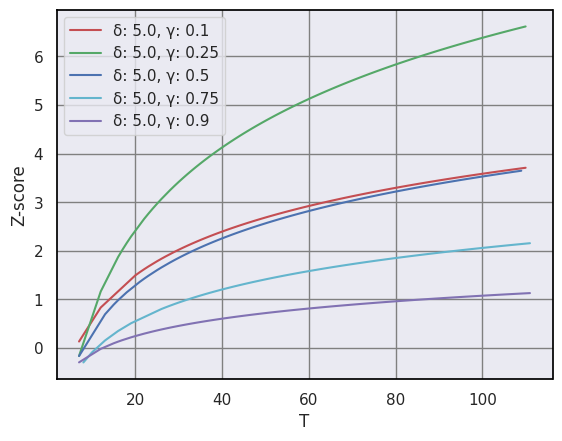

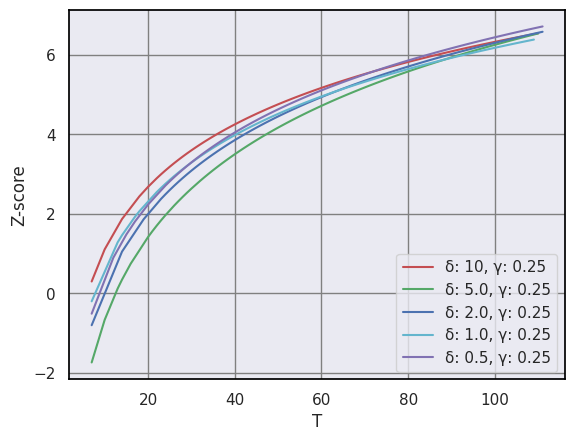

- Watermark strength vs sequence length

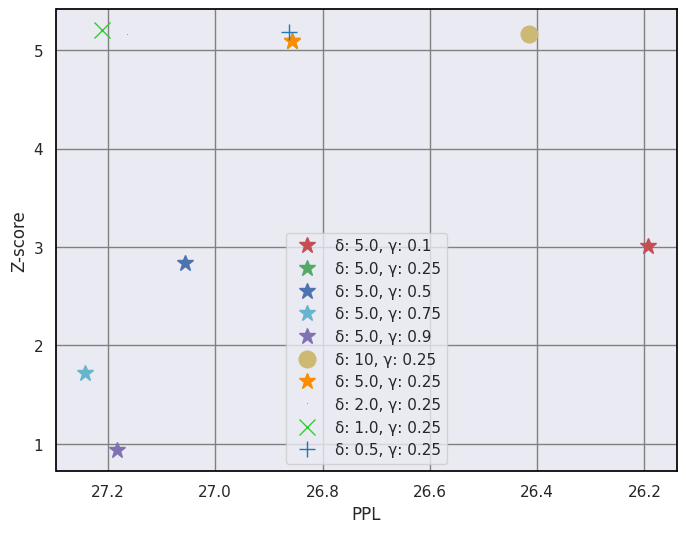

- Tradeoff between quality and detection

- The code evaluates how z-score and perplexity change for different watermark hyperparameters δ and γ.

The analyses validate core trends from the paper, providing insights into the approach:

- Sequence length improves detectability

- Higher perplexity indicates weaker watermarks

- However, effectiveness depends on the dataset properties.

pip install -r requirements.txtRun watermark_for_LLM.ipynb This will output figures and metrics for the different experiments.

J. Kirchenbauer et al. "A Watermark for Large Language Models" https://arxiv.org/abs/2301.10226