Documentation | Github Actions

This template is designed to be used as a starting point for new python projects with support for dockerized development and deployment. This template focuses on standardization (static analysis, code quality) and automation (workflows).

Use this template > Create a new repository: First, you can clone this template from the UI by clicking on the upper left repository button.Use this template > Open in codespace: Second, you can directly try it out in Github codespace (build time <= 1.30min). Codespace is a Github feature that allows you to develop directly in the cloud using VSCode devcontainer. For more information, please refer to Github Codespace.git clone https://github.com/povanberg/python-poetry-template.git: Third, you can clone this template from the command line.

Once the project cloned or the codespace ready, you can install the project dependencies. For development, you can use the dev extra to install the development dependencies.

poetry install --with=devor using pip:

pip install -e .[dev]This template includes the following features:

- Poetry - Python dependency management and packaging made easy.

- Pre-commit - A framework for managing and maintaining multi-language pre-commit hooks. Supports black, pylint, flake8, isort, mypy and more.

- Containers - Build, ship, and run any application, anywhere.

- Devcontainer - Develop inside a Docker container while your code runs on the host.

- MkDocs - Fast, simple and beautiful documentation with Markdown.

- Github Pages - Publish your project documentation directly from your repository.

- Github Actions - Automate, customize, and execute your software development workflows right in your repository.

Prior to developement, you should update the following values according to your project. Do not forget to update the LICENSE.md file.

| Configuration | |||

|---|---|---|---|

| Parameter | Value | References | |

| Path | Variable | ||

AUTHOR_NAME |

author | pyproject.toml | tool.poetry.authors |

| mkdocs.yml | site_author | ||

PACKAGE_NAME |

pypoetry_template | src | pypoetry_template |

| pyproject.toml | tool.poetry.name | ||

| pyproject.toml | tool.poetry.package.include | ||

| mkdocs.yml | repo_name | ||

| mkdocs.yml | plugins.mkdocstrings.watch | ||

REPO_URL |

https://github.com/povanberg/python-poetry-template | pyproject.toml | tool.poetry.repository |

| mkdocs.yml | repo_url | ||

This template is not intended to be a one-size-fits-all solution. It is designed to be a starting point for new dockerized python projects. It is up to you to decide which features you want to keep and which ones you want to remove. The goal is to provide a starting point that is easy to use and that can be easily adapted to your needs.

Optional directory can be safely removed if you do not need them.

| Directory | Status | Description |

|---|---|---|

.devcontainer |

Optional | Devcontainer is a VSCode feature that allows you to develop inside a Docker container while your code runs on the host. For more information, please refer to VSCode documentation. |

.github |

Optional | Github configuration, primary for actions is a feature that allows you to automate, customize, and execute your software development workflows right in your repository. For more information, please refer to Github documentation. |

assets |

Optional | Assets are used to store additional files that are used within your project. e.g. configuration files, images, etc. |

dockers |

Required | This template is designed with support for multi-containers applications. For this reason, the conventional root dockerfile is moved within a hierarichal structure. Such hierarchical pattern allow, the application to preserve consistency through docker composition paths. e.g. dockers/dev/dockerfiledockers/webapp/dockerfile |

docs |

Optional | The docs directory contains everything related to the documentation of your project. It contains the documention styles, assets and pages which will be orchestrated by the mkdocs.yml configuration. |

lib |

Optional | Some projects require external library to be imported. While it is usually a bad practice, to directly include library sources in your project, some specific use cases require to directly embedes the library within the codebase. |

notebooks |

Optional | The notebooks directory contains all the Jupyter notebooks that are used to develop your project. |

scripts |

Optional | The scripts directory contains all the scripts that are used to develop your project. |

src |

Required | The src directory contains all the source code of your project. The source super structure, while note being mandatory, offers a clear separation between the source code and the rest of the project. |

tests |

Required | The tests directory contains all the tests of your project and should follow the same hierachical structure than the src directory. |

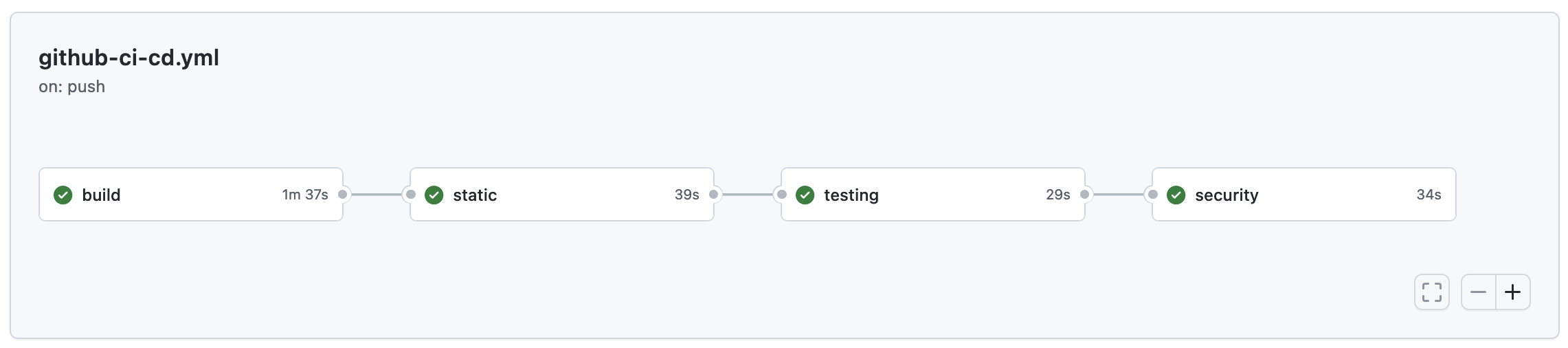

This template is designed to be used with Github Actions. It includes a set of workflows that are triggered on push and merge request events. The workflows are defined in the .github/workflows directory. The following workflows are included:

-

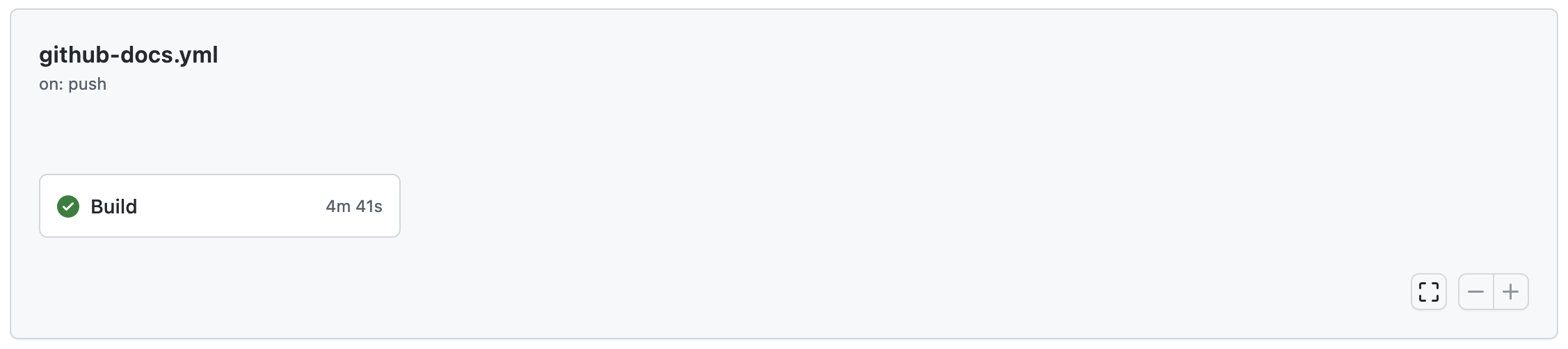

- Build and serve MKDocs.

MkDocs is a fast, simple and downright gorgeous static site generator that's geared towards building project documentation. Documentation source files are written in Markdown, and configured with a single YAML configuration file. For more information, please refer to mkdocs documentation.

This package includes the following plugins:

- mkdocs-material - Material for MkDocs is a theme for MkDocs, a static site generator geared towards building project documentation.

- mkdocstrings - MkDocs plugin to generate API documentation from Python docstrings.

- mkdocs-gen-files

- mkdocs-literate-nav

- mkdocs-section-index

And additional features:

- Automatic reference to the source code (docs/gen_reference_pages.py) and imports the associated docstring for each function.

- Support for LaTeX equations directly within the code docstring thanks to

mathjax.

To deploy the MKDocumentation locally, run the following command:

poetry run mkdocs serve

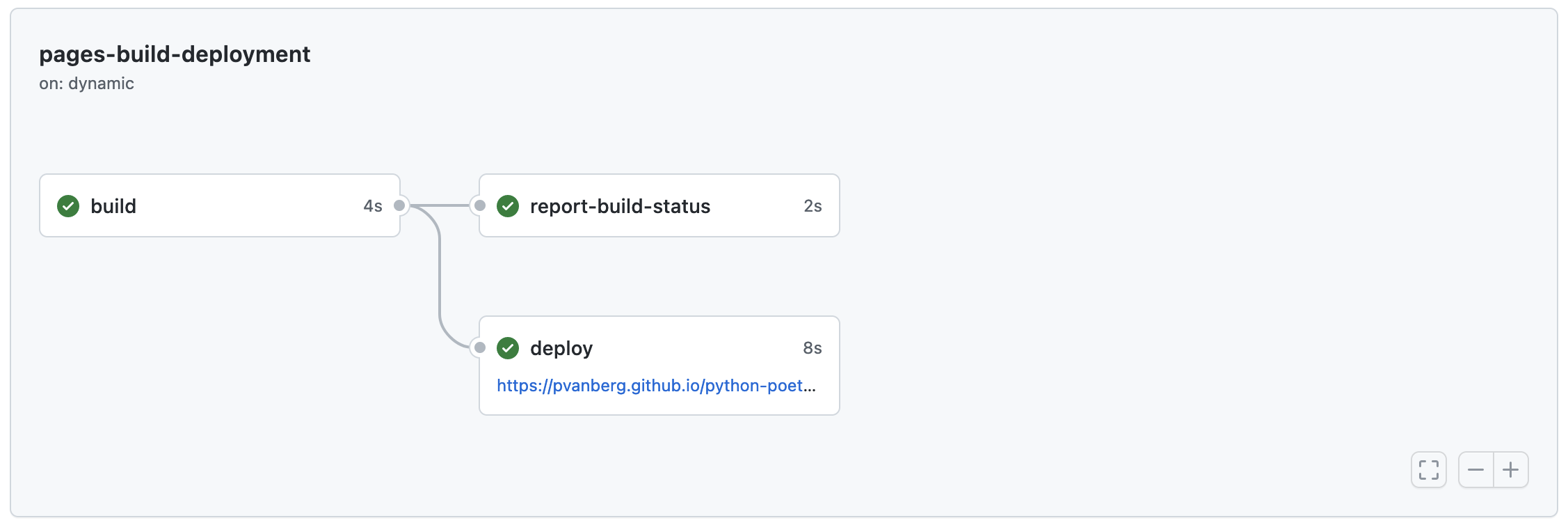

The MkDocs documentation is automatically generated and published to Github Pages using the github-docs.yml workflow. The workflow is triggered on each push to the main branch and on each release. The workflow will build the documentation and push it to the `gh-pages` branch. Then, a second workflow will be trigered on the `gh-pages` branch focused on the deployment. Once completed, the documentation website is available at https://<USERNAME>.github.io/<REPOSITORY_NAME>/.

NOTE: Prior to deployment make sure you have activated the Github Pages feature (repository > settings > pages) in the repository settings.

Semver, or Semantic Versioning, is a widely accepted versioning system that provides clear and unambiguous communication about changes in software packages. It consists of a major version, minor version, and patch version, each with a specific meaning. For example, the first release of the template is tagged MAJOR.MINOR.PATCH = 0.1.0. For more information, please refer to semver specification.

Semver is used to tag the docker image during Github workflow. This task is automated trough the github-ci-cd.yml workflow using metadata-action. The workflow is triggered on each push to the main branch and on each release.

Commitizen is a command-line tool that helps developers create standardized commit messages (conventional commits) following the conventions of the Semver versioning system. Use Commitizen to create your commit messages by running poetry run cz commit instead of git commit. This will prompt you to fill in the commit fields following the conventional format. The commit message will be automatically formatted and will internally update the project versioning according to the level of change (patch, minor, major). Finally, the CHANGELOG.md file may be automatically updated by running poetry run cz bump to bump the version of your package.

Note The version bump and the

CHANGELOG.mdfile update is manually triggered by the developer but it can also be automated by the Github workflow, see commitizen-action.

Each time you create a new release on GitHub, you can trigger a workflow to publish your image. We use the Docker login-action and build-push-action actions to build the Docker image and, if the build succeeds, push the built image to Docker Hub. (see docs.github.com/publishing-docker-images)

-

Go to https://hub.docker.com and login or register.

-

Optional Once logged in, create a new repository named

PROJECT_NAME(private or public). Warning If you skip this step, the image will be pushed using the default access. For free account, the default access is limited to 1 private repository. -

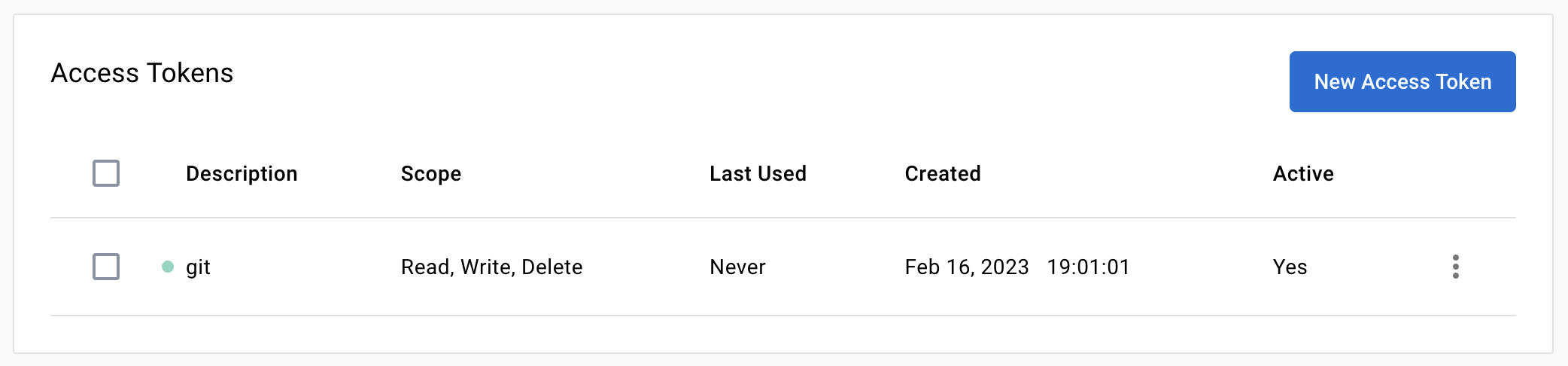

Go to the account Settings > Security and create a new access token.

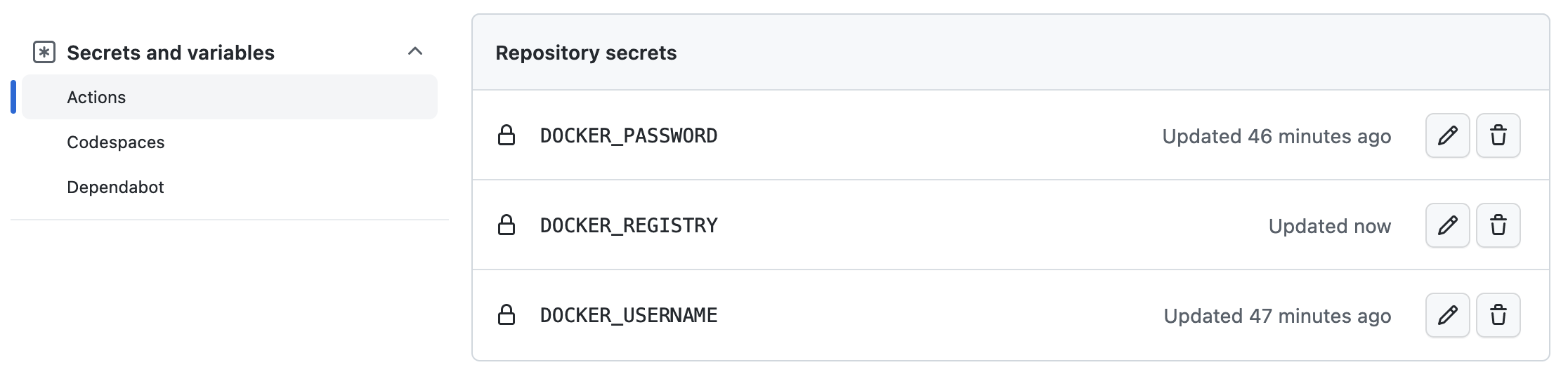

- Go back to github and go to your Repository then Settings > Secrets and Variables > Actions > Action secrets. Add your docker registry (= docker.io), username and password (step 3 created access token) as secrets (

DOCKER_REGISTRYDOCKER_USERNAMEandDOCKER_PASSWORD). If

- You are done. The workflow will automatically build and push the image to docker hub. Finally once your workflow run is completed, you can verify that your image is available on you docker hub (example for this template).

- Retrieve docker registry url and credentials.

- Update Github secrets - step (4) of the Docker Hub configuration.

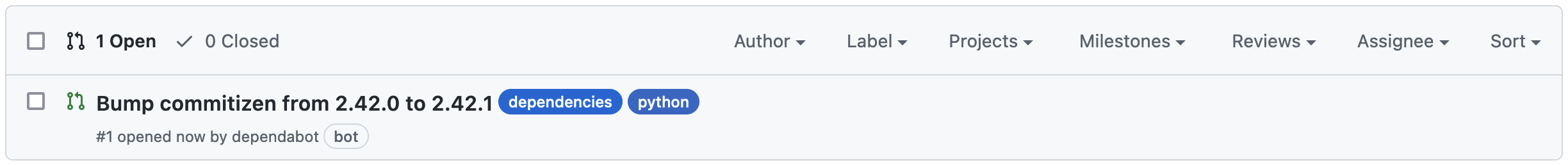

Dependabot is an automated dependency management tool that helps keep your GitHub repositories secure and up-to-date. It monitors your repository for outdated or vulnerable dependencies, and automatically creates pull requests to update them. With Dependabot, you can easily stay on top of security vulnerabilities and new versions of dependencies without the need for manual intervention (cf. docs.github.com/dependabot).

python = ">=3.8.1,<=3.11"

wheel = "^0.38.1"

notebook = "^6.5.2"-

Go to your repository > Insights > Dependency graph > Dependabot and verify that the dependabot configuration (

.github/dependabot.yml) is correctly recognized and loaded. -

Then enable Dependabot alerts, go to your repository > Settings > Code security & analysis > Dependabot alerts and enable the option.

-

You are done. Dependabot will automatically create pull requests to update your dependencies (PRs usually appear within few minutes). Note that if all your dependencies are up-to-date, no PR will be created.