This is the official Pytorch implementation of Object Pursuit, proposed in the paper Object Pursuit: Building a Space of Objects via Discriminative Weight Generation.

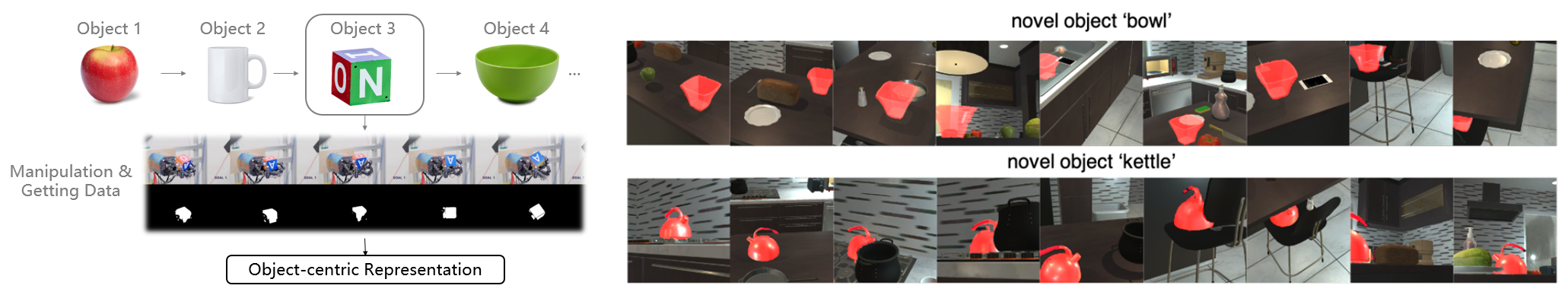

Inspired by human infants and robots who learn object knowledge through continual interaction, we propose Object Pursuit, which continuously learns object-centric representations using training data collected from interactions with individual objects. Throughout learning, objects are streamed one by one in random order with unknown identities, and are associated with latent codes that can synthesize discriminative weights for each object through a convolutional hypernetwork.

Our code implements the following three parts proposed in our paper:

- Object Pursuit pipeline, learning object-centric representation continually with re-identification, low-dimensional check, and forgetting prevention strategy. Code resides in

./object_pursuitfold. - Joint Training/Pretraining, learning objects together and building low-dimensional object representation space at once. Pretraining is strongly recommended before Object Pursuit. Code resides in

./pretrainfold. - One-shot Learning Application, evaluating the usefulness of the learned object base representations to see how it helps learn a new object by one-shot and few-shot learning. Code resides in

./application/oneshotfold.

Also, you could find the implementation of the hypernetwork, the segmentation blocks, and latent representation in ./model/coeffnet. It defines the following network structure:

Clone the repo:

git clone https://github.com/pptrick/Object-Pursuit

cd Object-PursuitRequirements

- Python 3.7+ (numpy, Pillow, tqdm, matplotlib)

- Pytorch >= 1.6 (torch, torchvision)

- conda virtual environment is recommended, but optional

dataset(optional)

-

iThor synthetic dataset: we collect synthetic data within the iThor environment, simulating a robot agent exploring the scene. The data collection code can be found in

data_collector. Please organize your data into the following structure:<synthetic data root> │ └─── <object 1> | │ | └─── imgs | │ | └─── masks └─── <object 2> └─── <object 3> └─── ...Put rgb images in

imgsand binary masks inmasks. The names of corresponding images inimgsandmasksshould be the same. -

DAVIS 2016 dataset: a video object segmentation dataset in the real scene. Download 'TrainVal' from DAVIS 2016. The DAVIS dataset has the following structure:

<DAVIS root> │ └─── Annotations └─── JPEGImages └─── ImageSets └─── README.md -

CO3D dataset: The CO3D dataset contains 18,619 videos of 50 MS-COCO categories, containing segmentation annotations. Download. Please organize data into the following structure:

<CO3D root> │ └─── <category 1> | └─── <object 1> | └─── images | └─── masks | └─── ... (depth masks, depths, pointcloud.ply) | └─── <object 2> | └─── ... └─── <category 2> └─── <category 3> └─── ... -

Youtube VOS dataset

-

For custom dataset, we suggest you organize your data in the same way as 'iThor synthetic dataset'.

To run object pursuit learning process from scratch:

# synthetic dataset

python main.py --dataset iThor --data <synthetic data root> --zs None --bases None --backbone None --hypernet None --use_backbone

# DAVIS dataset

python main.py --dataset DAVIS --data <DAVIS root> --zs None --bases None --backbone None --hypernet None --use_backboneRun object pursuit based on pretrained hypernet and backbone:

# DAVIS dataset

python main.py --dataset DAVIS --data <DAVIS root> --zs None --bases None --backbone <path to backbone model .pth> --hypernet <path to hypernet model .pth> --use_backbone-

To generate the whole primary network's weights (backbone included) by hypernet, remove

--use_backboneflag. This would change the hypernet's structure, so please keep this setting in other stages (evaluation, pretraining, one-shot learning). -

To set latent z's dimension, use

--z_dim <dim>, default to 100. -

To set quality measure accuracy threshold

$\tau$ , use--thres <threshold>, default to 0.7. -

Use

--out <dir>to set output directory. All checkpoints and log files will be stored in this directory.

To evaluate object pursuit, use --eval:

# DAVIS dataset

python main.py --dataset DAVIS --data <DAVIS root> --out <out dir> --use_backbone --evalTo run joint pretraining:

# DAVIS dataset

python joint_pretrain.py --dataset DAVIS --data <DAVIS root> --model Multinet --use_backbone --save_ckpt

# Multiple dataset

python joint_pretrain.py --dataset iThor DAVIS --data <synthetic data root> <DAVIS root> --model Multinet --use_backbone --save_ckptTo run and evaluate one-shot learning:

# DAVIS dataset

python one-shot.py --model coeffnet --dataset DAVIS --n 1 --imgs <path to imgs> --masks <path to masks> --bases <path to bases> --backbone <path to backbone> --hypernet <path to hypernet> --use_backbone- To view visualization results, use

--save_vizflag.

Quick Demo

Here's a sample model file that contains parameters of the hypernet, the backbone, and the bases. Download it, and set its path to <model dir>/multinet_vos_davis.pth. Suppose you have downloaded DAVIS 2016 dataset and set the data directory to <DAVIS root>, then you can run one-shot learning:

python one-shot.py --model coeffnet --dataset DAVIS --n 1 --z_dim 100 --imgs <DAVIS root>/JPEGImages/480p/<object> --masks <DAVIS root>/Annotations/480p/<object> --bases <model dir>/multinet_vos_davis.pth --backbone <model dir>/multinet_vos_davis.pth --hypernet <model dir>/multinet_vos_davis.pth --use_backboneChange the content within a <>.

If you find our work useful to your research, please consider citing:

@article{pan2021object,

title={Object Pursuit: Building a Space of Objects via Discriminative Weight Generation},

author={Pan, Chuanyu and Yang, Yanchao and Mo, Kaichun and Duan, Yueqi and Guibas, Leonidas},

journal={arXiv preprint arXiv:2112.07954},

year={2021}

}This code and model are available for scientific research purposes as defined in the LICENSE file. By downloading and using the code and model you agree to the terms in the LICENSE.