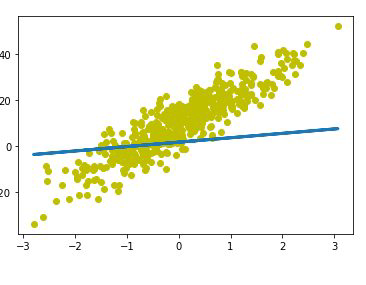

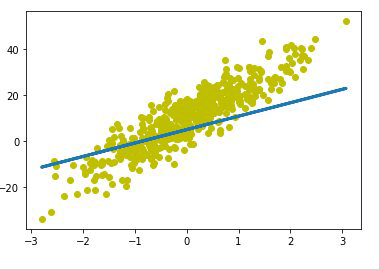

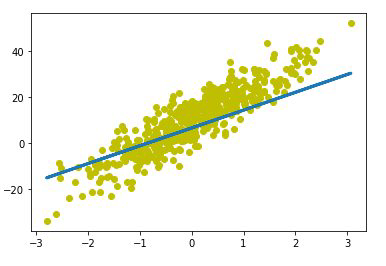

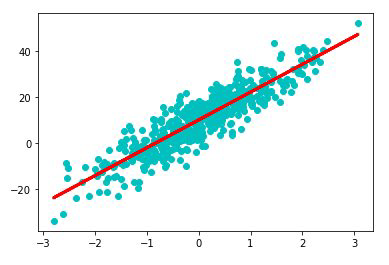

In this repository, I have implemented gradient descent algorithm from scratch in python.

def gradient_descent(X,y):

m = b = 0

learning_rate = 0.01 # Small steps by which the cost function is optimised along the slope

epochs = 1000 # The iterations during which cost function is optimised

n = len(X)

for epoch in range(epochs):

y_predicted = m*X + b # Predicted function with respect to features

cost = (1/(2*n))*sum([val**2 for val in (y_predicted-y)]) # Cost function

derivative_wrt_m = (1/n)*sum((y_predicted-y)*X) # Gives the slope of cost function along m

derivative_wrt_b = (1/n)*sum(y_predicted-y) # Gives the slope of cost function along b

m = m - learning_rate * derivative_wrt_m # Steps by which cost is minimized along m

b = b - learning_rate * derivative_wrt_b # Steps by which cost is minimized along b

print("m: {}, b: {}, Epoch: {}, cost: {} ".format(m,b,epoch,cost))

return m,b,cost