Information Retrieval System for Research Papers using Python.

Course Assignment for CS F469- Information Retrieval @ BITS Pilani, Hyderabad Campus.

Done under the guidance of Dr. Aruna Malapati, Assistant Professor, BITS Pilani, Hyderabad Campus.

- Design Document

- reSEARCH

- Introduction

- Data

- Text Preprocessing

- Data Structures used

- Time complexity of Inserting and Querying

- Tf-Idf formulation

- Machine specs

- Results

- Screenshots

- Members

Table of contents generated with markdown-toc

For setup, run the following commands in order:

python scraper.pypython process_files.pypython create_trie.py l #lemmatized tokens

or

python create_trie.py s #stemmed tokens

or

python create_trie.py n #no stemming/lemmatization

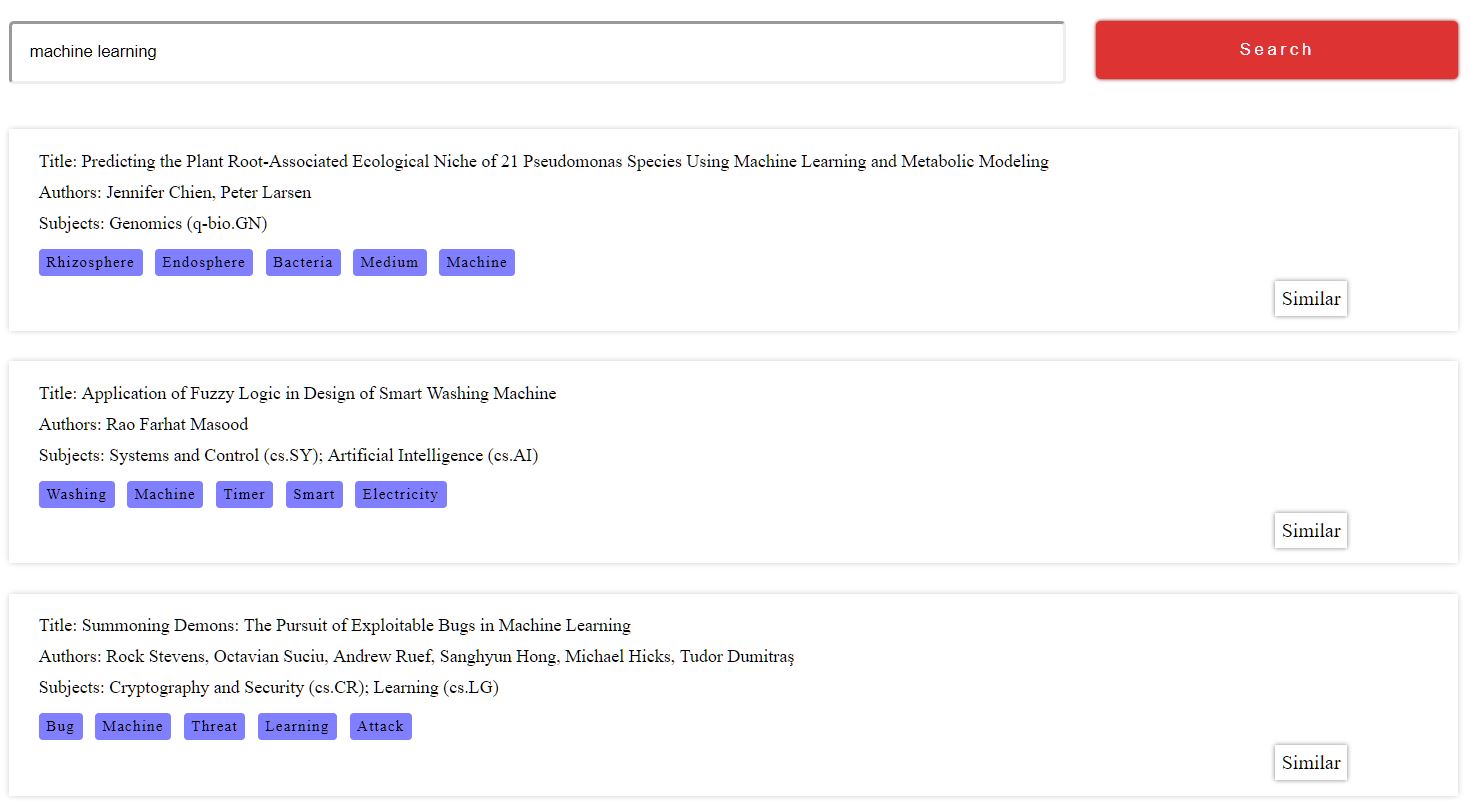

python doc2idx.py python run.pyA tf-idf based Search Engine for research papers on Arxiv. The main purpose of this project is understand how vector space based retrieval models work. More on Tf-Idf.

The data has been scraped from Arxiv. The scraper is present in scraper.py which can be found in the directory scraper.

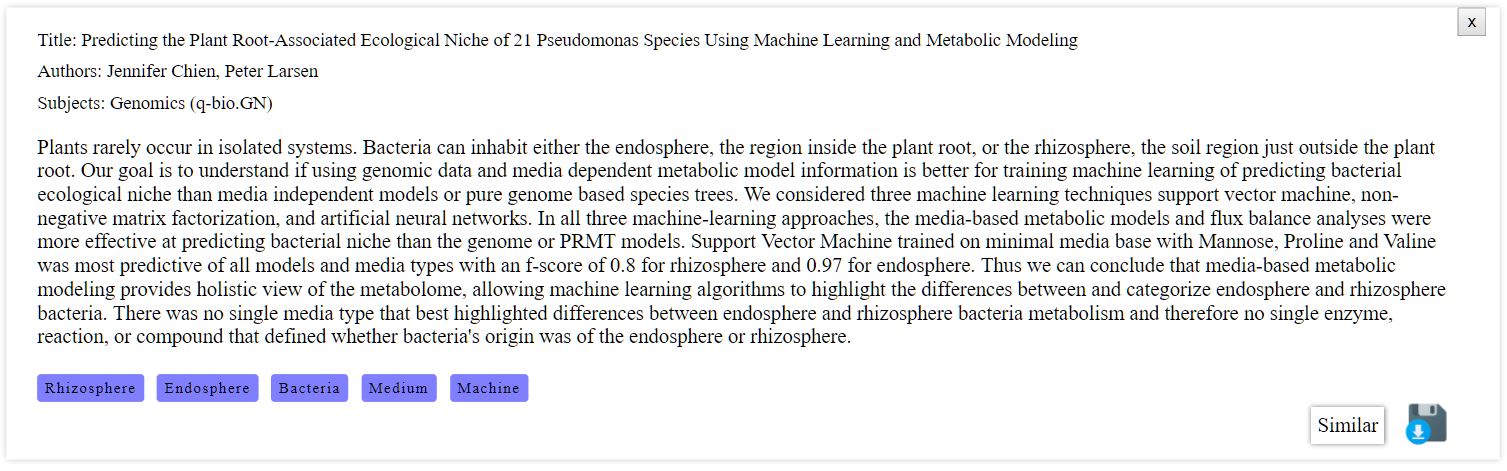

We use the following data of papers from all categories present on Arxiv:

- Title

- Abstract

- Authors

- Subjects

Total terms in vocabulary = 38773. Total documents in corpus = 15686. Note: Only Abstract data has been used for searching.

The data is organized into directories as follows:

Data/

├── abstracts (text files containing the abstract)

├── authors (text files containing authors)

├── link (text files containing link to the pdf of the paper)

├── subject (text files containing subjects)

└── title (text files containing the title)

We processed the raw text scraped from Arxiv by applying the following operations-

- Tokenization

- Stemming

- Lemmatization

- Stopwords removal

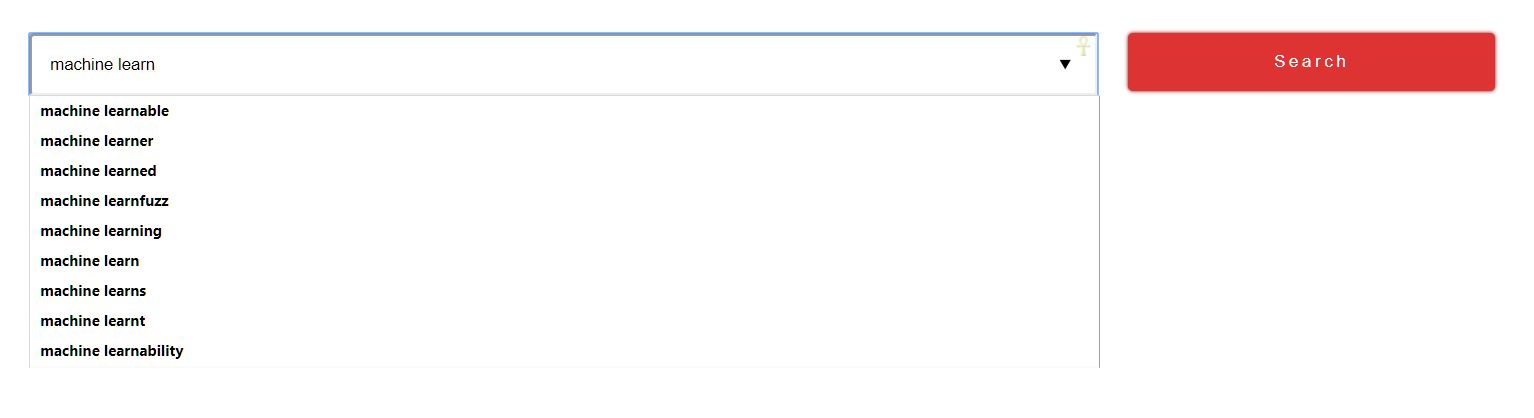

We used a Trie to store words and their Term frequencies in the different Documents and their Document Frequencies. The Trie is also used to generate typing suggestions while querying.

The time complexity of querying and inserting an element into the Trie is O(n). n → number of charcters in the query term.

Tf-Idf score = tf*log(N/df)

tf → Term frequency of the term in the current document df → Document frequency of the term N → Total number of documents in corpus

Processor: i7 Ram: 8 GB DDR4 OS: Ubuntu 16.04 LTS

Index building time: * No stemming/lemmatization - 41.67s * Lemmatized text - 76.97s * Stemmed text - 146.13 s

Memory usage: around 410 MB.

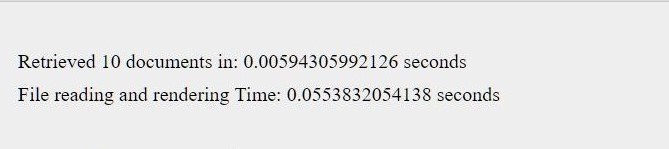

Retrieval time statistics: