A DDoS attack is one of the most serious threats to the current Internet. Router throttling is a popular method to response against DDoS attacks. Currently, coordinated team learning (CTL) has adopted tile coding for continuous state representation and strategy learning. It is suitable for this distributed challenge but lacks robustness. Our first contribution is that we adapt deep network as function approximation for continuous state representation, as deep reinforcement learning approach is robust in many different Atari games with little modification of the learning architecture

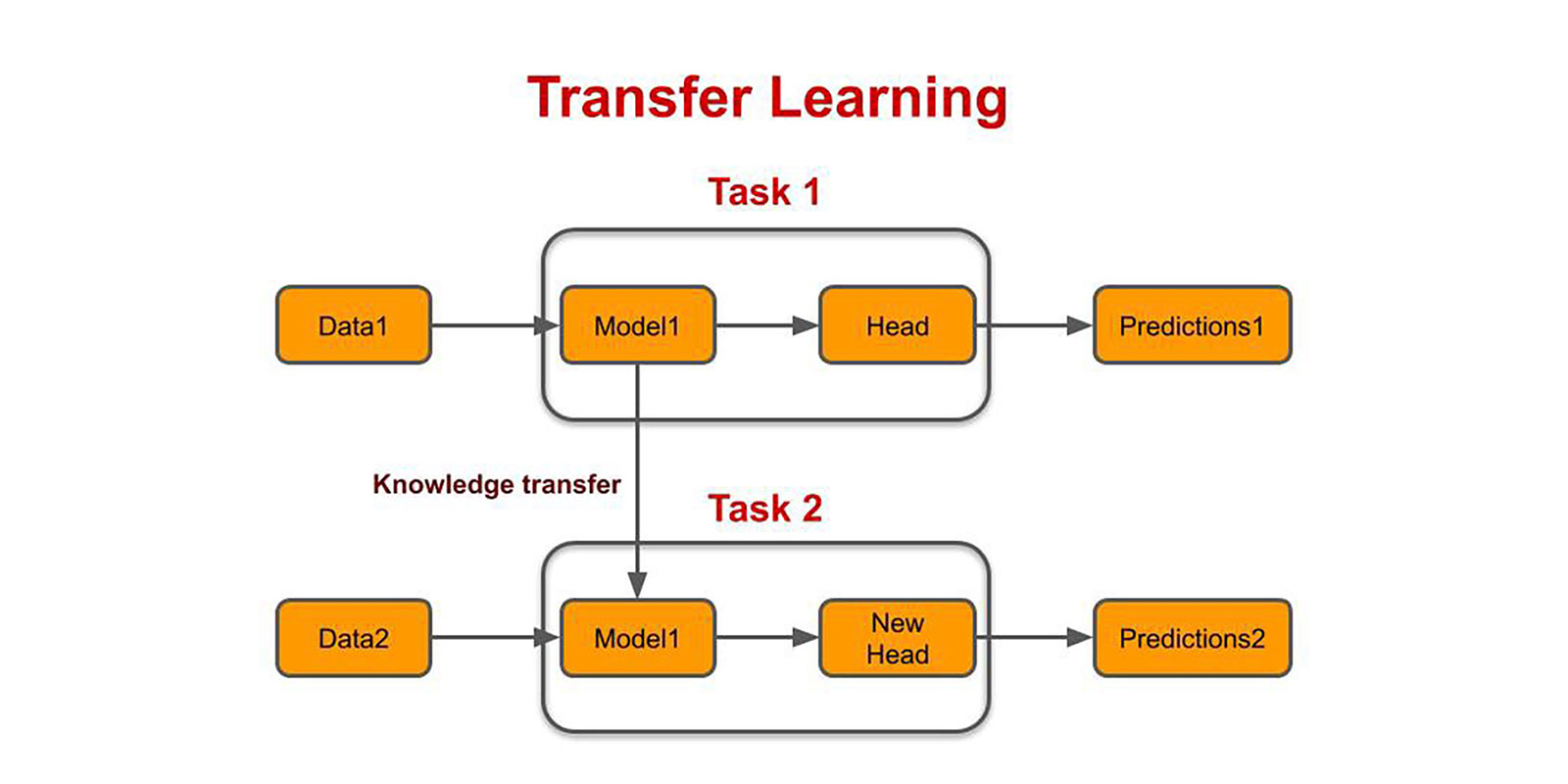

Data mining and machine learning technologies have already achieved significant success in classification, regression, and clustering. Transfer learning is shown in Fig. However, many machine learning methods work well based on the assumption that the training and test data are drawn from the same feature space and the same distribution. When the distribution changes, most statistical models need to be rebuilt from scratch using newly collected training data.

In many real applications, it is expensive or impossible to recollect the needed training data and rebuild the models from scratch. It would be nice to reduce the effort to recollect the training data. In such cases, knowledge transfer or transfer learning between task domains would be desirable. To some extent for the DDoS response problem, deep network based methods can achieve better performance than tile coding based methods, but the time needed for training and learning may be much longer. Transfer Learning in Reinforcement Learning (RL) is an important topic. In the DDoS response problem, the source and target task have the same state variables and actions. For both the homogeneous team scenario and the heterogeneous team scenario, we can adopt transfer learning from a simple scenario to a more complex scenario.

It remains for future work to study how to increase the performance of transfer learning for a more complex environment. The limitation is that the structure information of agents from the same team remains the same in our approach . However, in real-world settings, all agents may have a different structure. Moreover, the number of agents is not as large as the real network environment. Thus, we anticipate investigating how our method can achieve the capability of modeling a more complex environment, given that our ultimate goal is to apply the proposed method to the real environment. All our experiment is conducted in a simulated environment, there is still a lot of problems should be solved, so that we can implement our method method in real-world scenarios. Any help would be appreciated via PR's. Cheers 🍻!