DiffDreamer: Consistent Single-view Perpetual View Generation with Conditional Diffusion Models

Shengqu Cai, Eric Ryan Chan, Songyou Peng, Mohamad Shahbazi, Anton Obukhov, Luc Van Gool, Gordon Wetzstein

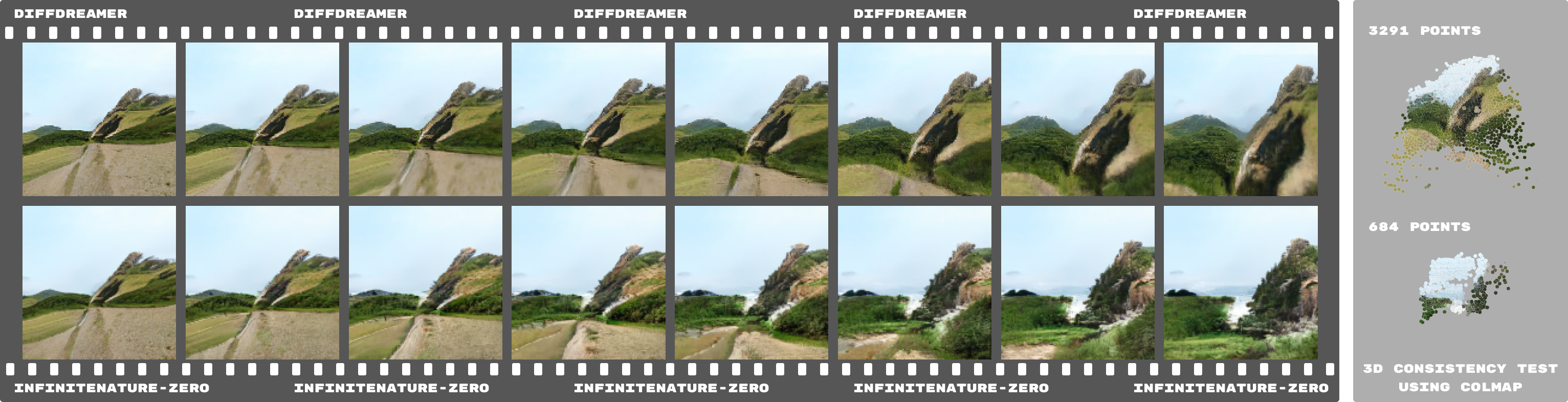

Abstract: Perpetual view generation—the task of generating long- range novel views by flying into a given image—has been a novel yet promising task. We introduce DiffDreamer, an unsupervised framework capable of synthesizing novel views depicting a long camera trajectory while training solely on internet-collected images of nature scenes. We demonstrate that image-conditioned diffusion models can effectively per- form long-range scene extrapolation while preserving both local and global consistency significantly better than prior GAN-based methods.