- Kubernetes tutorial

- Why is it needed

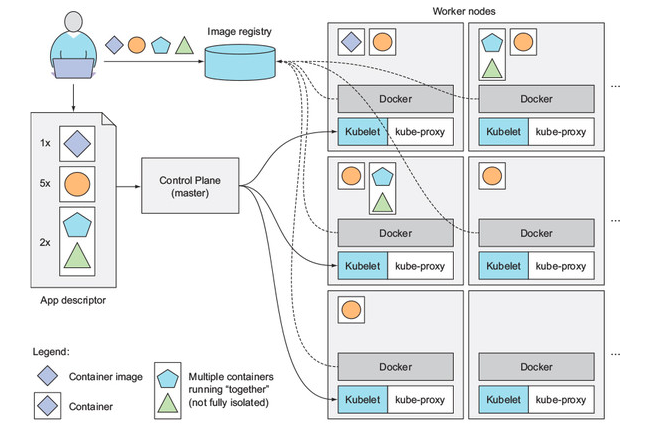

- Architecture

- Pod

- Service

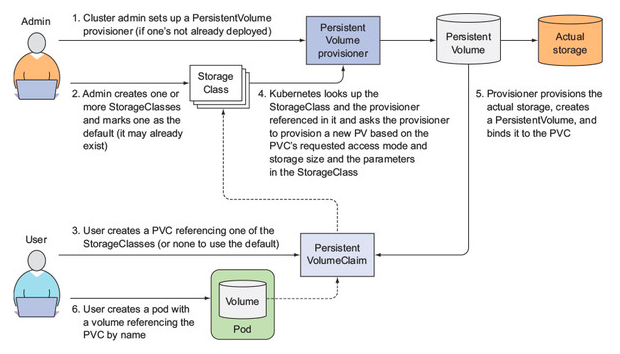

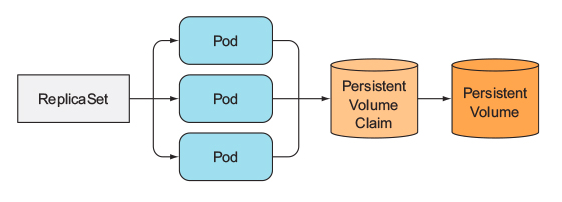

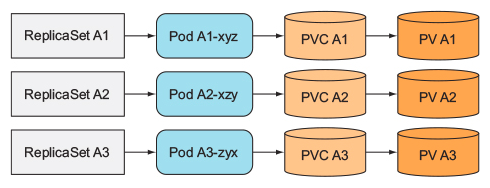

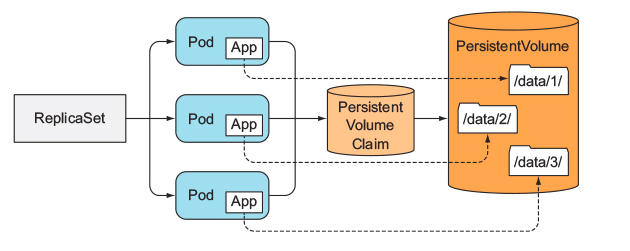

- Persistence

- ENV vars, Configmaps, secrets

- Deployment

- Orchestration

- Hands-on

- 1. Download and install kind

- 2. Use kind to bootstrap a local kubernetes cluster

- 3. See status of cluster and confirm it is running

- 4. Get a docker-compose file

- 5. Create namespace

- 5. Prepare kubectl

- 6. Generate kubernetes resource files

- 7. Create configmaps

- 8. Create secret if pulling image from external sources

- 9. Deploy resources

- 10. Confirm deployment

- kubectl Cheatsheet in zsh

- Docs

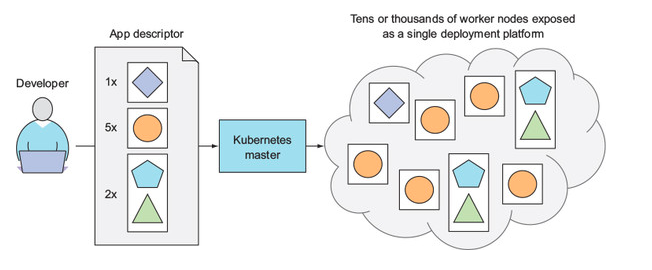

Kubernetes enables developers to deploy their applications themselves and as often as they want, without requiring any assistance from the operations (ops) team. But Kubernetes doesn’t benefit only developers. It also helps the ops team by automatically monitoring and rescheduling those apps in the event of a hardware failure. The focus for system administrators (sysadmins) shifts from supervising individual apps to mostly supervising and managing Kubernetes and the rest of the infrastructure, while Kubernetes itself takes care of the apps.

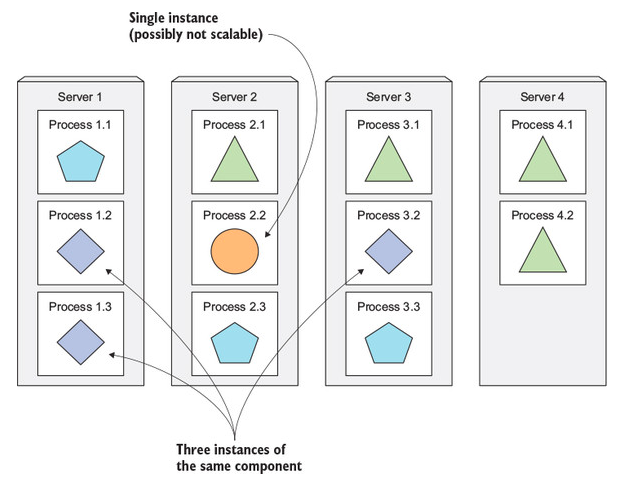

Kubernetes abstracts away the hardware infrastructure and exposes your whole datacenter as a single enormous computational resource. It allows you to deploy and run your software components without having to know about the actual servers underneath.

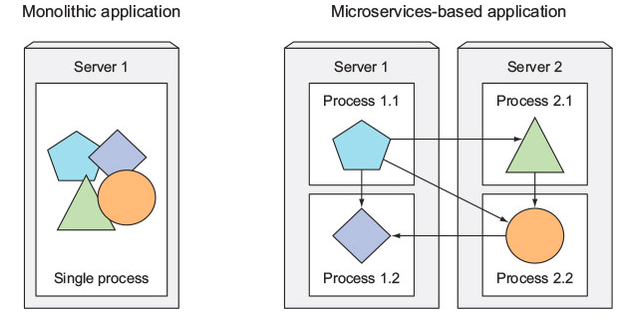

Because of movement from monoliths to microservices, there needs to be

- A system for orchestration of microservices

- Granular control of microservices

- Providing a consistent environment to applications

- Moving to continuous delivery

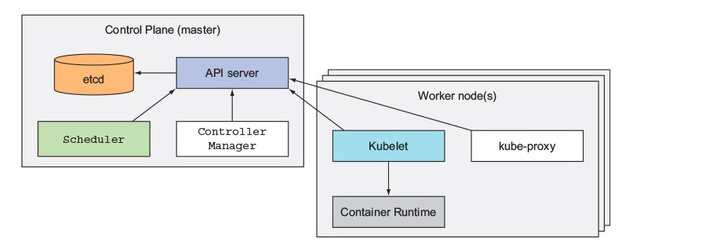

Components:

- API server: communication for developers and other components

- Scheduler: app scheduler - schedule resource allocation

- Control manager: cluster-level functions: replicating components, track worker nodes, handling node failures etc

- etcd: distributed state and config storage, part of coreos (alt zookeeper)

- Container runtime: deprecated docker moved to CRI, supports containerd and CRIO

- Kubelet: local container manager, sync with api server

- kube-proxy: handles network routing for containers

Handles 4 basic resources:

- Compute

- Memory

- Storage

- Network

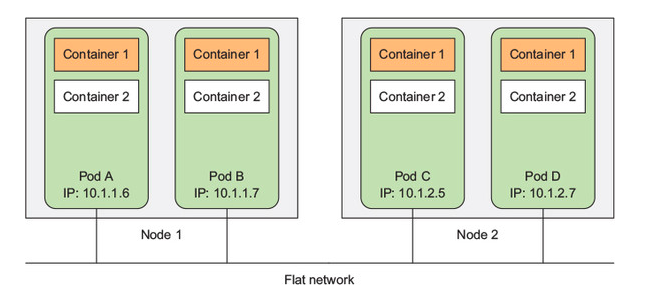

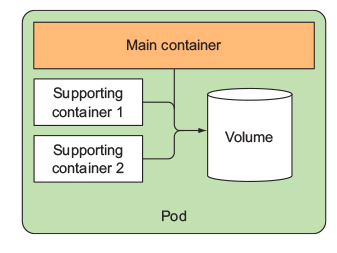

Pod is a co-located group of containers and represents the basic building block in Kubernetes.

Mostly, a pod = a container. Actual usage of pods containing multiple containers in very niche usecases like container placement constraints:

- Sharing network interface & ip address

- Sharing hostname

- IPC / unix socket communication

- Filesystem isolation

- Functions as a scaling unit

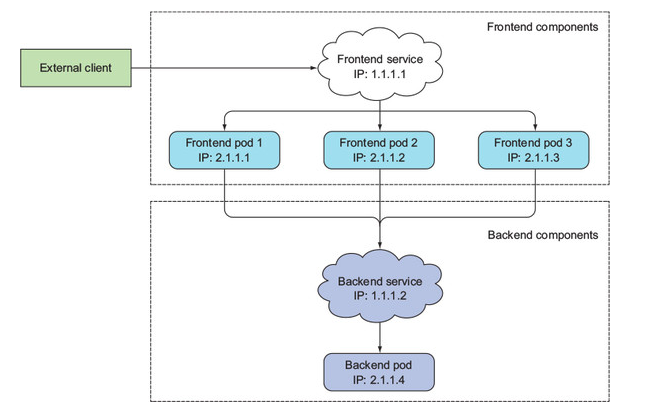

Concept introduced to group pods providing similar services for networking & service discovery.

- Single IP address & port

- Routing and hostname resolution

- Connect to external load balancers

Application layer routing:

- NodePort

- Reserve a port across all nodes

- All nodes running service can be used

- LoadBalancer

- Use ELB

- Ingress controller

- Global nginx + ELB

- Cloud

- EBS

- EFS

persistentVolumeClaim- pre-provisioned

- local

configMapsecret- Git repo etc

- wtf

ENV vars: pod definitions

Configmaps:

- storage for key-value pairs

- meant for configuration storage

- treated as a volume

- passed to containers as env vars

- can be passed as command line arg or file

Secrets:

- storage for key-value pairs

- same as configmaps

- encrypted

Image pull secrets:

- same as secrets

- used to pull container images from external services

Supports multiple deployment patterns:

- Rolling

- Blue-green

- Canary

Supports various replica sets

Arch / Manjaro:

yay -Ss aur/kind-binOther Linux:

curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.10.0/kind-linux-amd64

chmod +x ./kind

mv ./kind /usr/bin/kindMac:

brew install kindWindows:

🙂kind create clusterCreating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.19.1) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Set kubectl context to "kind-kind"

You can now use your cluster with:

kubectl cluster-info --context kind-kind

Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/kubectl cluster-info --context kind-kind

kind get clustersGenerate an arda project:

export project_name=diamondhands

export project_title="Diamond hands :diamond:"

export project_description="Forever HODLer"

export db_name=gold

export db_username=diamond

export db_password=password

make new

cd diamondhands

# You should have a docker-compose.yml file hereModify as per docker v3 specs.

Or use this:

version: "3"

services:

primary-db:

image: postgres:12-alpine

env_file:

- ./env-sandbox/db.env

ports:

- "5432:5432"

volumes:

- primary-db-data:/var/lib/postgresql/data

deploy:

mode: replicated

replicas: 1

resources:

limits:

cpus: '0.001'

memory: 1024M

reservations:

cpus: '0.001'

memory: 512M

labels:

kompose.service.type: "clusterip"

volumes:

primary-db-data:mkdir env-sandbox

touch env-sandbox/db.envPOSTGRES_PASSWORD=${PGPASSWORD}

POSTGRES_USER=${PGUSER}

POSTGRES_DB=${PGDATABASE}

Or fetch your own docker-compose v3 file.

apiVersion: v1

kind: Namespace

metadata:

name: ktestStore as namespace.yaml.

KUBECTL='kubectl --namespace ktest'

# KUBECTL='kubectl --dry-run=client'

$KUBECTL apply -f ./namespace.yml

export PROJECT_NAME=diamondhands

export ENVIRONMENT=sandboxmkdir kubernetes

cd kubernetes

kompose convert --error-on-warning --provider kubernetes -c -f ../docker-compose.yml

kompose convert --error-on-warning --provider kubernetes -f ../docker-compose.ymlSet some default options to false:

for pod in *-pod.yaml; do

yq -y '.spec.enableServiceLinks = false' $pod > tmp

mv tmp $pod

doneFormat all envs into a directory .env.

for filename in env-*; do

CFG_NAME=$(echo $filename | cut -d '.' -f 1)

$KUBECTL create configmap --from-env-file ./${filename} env-${ENVIRONMENT}-${CFG_NAME}-env || true

done$KUBECTL create configmap --from-env-file ./db.env env-sandbox-db-envcd ..

ECR:

export ENVIRONMENT=sandbox

export AWS_ACCOUNT_ID=1

export AWS_DEFAULT_REGION=ap-south-1

PASS=$(aws ecr get-login-password --region $AWS_DEFAULT_REGION)

$KUBECTL create secret docker-registry $ENVIRONMENT-aws-ecr-$AWS_DEFAULT_REGION \

--docker-server=$AWS_ACCOUNT_ID.dkr.ecr.$AWS_DEFAULT_REGION.amazonaws.com \

--docker-username=AWS \

--docker-password=$PASS \

--docker-email=lel@lol.com --namespace ktestQuay:

EXISTS=$($KUBECTL get secret "quay.io" | tail -n 1 | cut -d ' ' -f 1)

if [ "$EXISTS" != "quay.io" ]; then

$KUBECTL create secret docker-registry quay.io \

--docker-server=quay.io \

--docker-username=setuinfra+your-bot-name \

--docker-password=get-your-won-key \

--docker-email=lel@lol.com --namespace ktest

fi$KUBECTL apply -f ./kubernetes/ --namespace ktestkubectl logs primary-db-79df5979cb-lrljd -n ktest --tail 100 --follow

kubectl exec --stdin --tty primary-db-79df5979cb-lrljd -n ktest -- /bin/bash

https://kubernetes.io/docs/reference/kubectl/cheatsheet/

alias k="kubectl"

source <(kubectl completion zsh)

source <(kompose completion zsh)

alias k_services="k get services --sort-by=.metadata.name --all-namespaces"

alias k_pods="k get pods --all-namespaces -o wide"

alias k_restart="k get pods --all-namespaces --sort-by='.status.containerStatuses[0].restartCount'"

alias k_volumes="k get pv --all-namespaces --sort-by=.spec.capacity.storage"

alias k_running="k get pods --all-namespaces --field-selector=status.phase=Running"

alias k_nodes="k get nodes --all-namespaces"

alias k_shell="k exec --stdin --tty $POD -- /bin/sh"

alias k_events="k get events --watch --all-namespaces"

alias k_secrets="k get secrets --all-namespaces"

alias k_configs="k get configMaps --all-namespaces"

alias k_context="k config current-context"

alias k_set_context="k config use-context "

alias k_images="kubectl get pods --all-namespaces -o jsonpath="{..image}" |\

tr -s '[[:space:]]' '\n' |\

sort |\

uniq -c"https://kubernetes.io/docs/tasks/tools/ https://kind.sigs.k8s.io/docs/user/quick-start/

https://kubernetes.io/docs/tasks/configure-pod-container/translate-compose-kubernetes/ https://kompose.io/user-guide/

https://docs.docker.com/compose/compose-file/compose-file-v3/#links https://kubernetes.io/docs/concepts/services-networking/service/#publishing-services-service-types

https://medium.com/google-cloud/kubernetes-nodeport-vs-loadbalancer-vs-ingress-when-should-i-use-what-922f010849e0 https://stackoverflow.com/questions/45079988/ingress-vs-load-balancer https://github.com/Kong/kubernetes-ingress-controller https://docs.konghq.com/kubernetes-ingress-controller/1.1.x/guides/getting-started/ https://kubernetes.github.io/ingress-nginx/

https://kubernetes.io/docs/concepts/configuration/configmap/

https://kubernetes.io/docs/concepts/configuration/secret/

https://cloud.google.com/blog/products/containers-kubernetes/your-guide-kubernetes-best-practices https://techbeacon.com/devops/one-year-using-kubernetes-production-lessons-learned

- https://kubernetes.io/docs/tasks/debug-application-cluster/debug-service/

- Shell: https://kubernetes.io/docs/tasks/debug-application-cluster/get-shell-running-container/

- For running containers: https://kubernetes.io/docs/tasks/debug-application-cluster/debug-running-pod/#container-exec

- For stopped containers: https://kubernetes.io/docs/tasks/debug-application-cluster/debug-running-pod/#ephemeral-container

- Extensions: https://github.com/aylei/kubectl-debug#install-the-kubectl-debug-plugin

Stopped container debugging: Override the default troubleshooting image

kubectl debug POD_NAME --image aylei/debug-jvmOverride entrypoint of debug container

kubectl debug POD_NAME --image aylei/debug-jvm /bin/bash- ECS

- Docker swarm

- Nomad + Consul