-

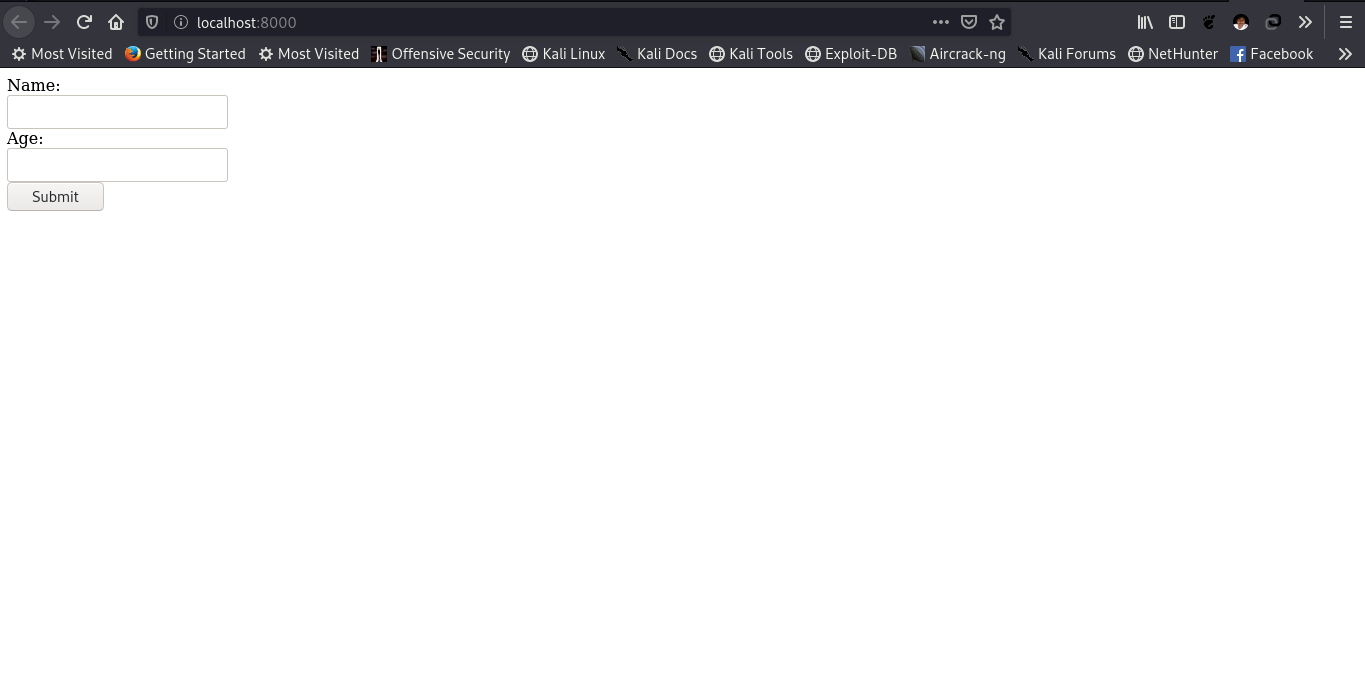

Create a virtual env, for dependency issues use python 3.7.1

*$ python3 -m venv dependency_env -

Activate virtualenv *

$ source dependency_env/bin/activate -

Virtual_env package reqirements *

$ pip install -r requirements.txt

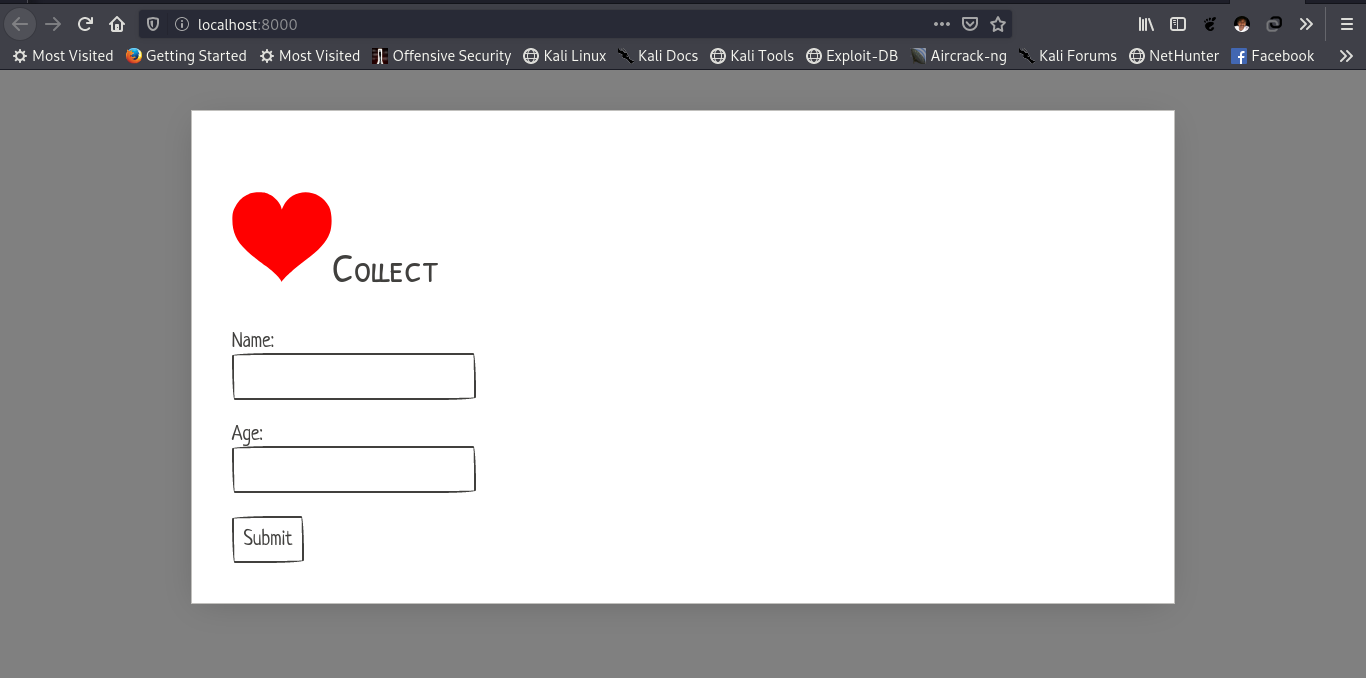

$ python3 manage.py makemigrations$ python3 manage.py migrate$ python3 manage.py runserver

$ python post_data.py

- Integrated realtime data pushing code

- Real time Api generation

Delete migrations folder and db.sqlite file.Then, create new migrations folder and a __init__.py file inside it. Finally run django 3 commands to remove th error 😀😀.

- To create config files for each broker:

-

Navigate to the kafka folder

-

Open a Terminal

-

Execute the following copy command:

$ cp config/server.properties config/server-1.properties$ cp config/server.properties config/server-2.properties -

Once you copy the config files, copy paste the following content to the config files respectively.

For config/server1.properties:

broker.id=1listeners=PLAINTEXT://:9093log.dir=/tmp/kafka-logs-1zookeeper.connect=localhost:2181For config/server2.properties:

broker.id=2listeners=PLAINTEXT://:9094log.dir=/tmp/kafka-logs-2zookeeper.connect=localhost:2181 -

Setup Kafka Systemd Unit Files (This will help to manage Kafka services to start/stop using the systemctl command)

First, create systemd unit file for Zookeeper with below command:

vim /etc/systemd/system/zookeeper.serviceThen Add below contnet:

[Unit]

Description=Apache Zookeeper server

Documentation=http://zookeeper.apache.org

Requires=network.target remote-fs.target

After=network.target remote-fs.target

[Service]

Type=simple

ExecStart=/usr/local/kafka/bin/zookeeper-server-start.sh /usr/local/kafka/config/zookeeper.properties

ExecStop=/usr/local/kafka/bin/zookeeper-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.targetSave the file and close it.

Next, to create a Kafka systemd unit file using the following command:

vim /etc/systemd/system/kafka.serviceThen ,add the below content.

[Unit]

Description=Apache Kafka Server

Documentation=http://kafka.apache.org/documentation.html

Requires=zookeeper.service

[Service]

Type=simple

Environment="JAVA_HOME=/usr/lib/jvm/java-1.11.0-openjdk-amd64"

ExecStart=/usr/local/kafka/bin/kafka-server-start.sh /usr/local/kafka/config/server.properties

ExecStop=/usr/local/kafka/bin/kafka-server-stop.sh

[Install]

WantedBy=multi-user.targetSave file and close.

Repeat for kafka1.service and kafka2.service in a similar manner as above (kafka.service)

-

systemctl daemon-reload

-

$ sudo systemctl start zookeeper -

$ sudo systemctl start kafka -

$ sudo systemctl start kafka1 -

$ sudo systemctl start kafka2To check whether brokers are running or not

-

$ sudo systemctl status kafka -

$ sudo systemctl status kafka1 -

$ sudo systemctl status kafka2

cd /usr/local/kafka$ bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 3 --partitions 10 --topic hospital

cd /usr/local/kafkabin/kafka-console-consumer.sh --bootstrap-server localhost:9092 localhost:9093 localhost:9094 --topic hospital --from-beginningOR- Run python consumer.py

- Make sure you have "spark-streaming-kafka-0-8-assembly_2.11-2.4.5.jar" folder on your pc (FOR OFFLINE MODE) ..If not , then download it

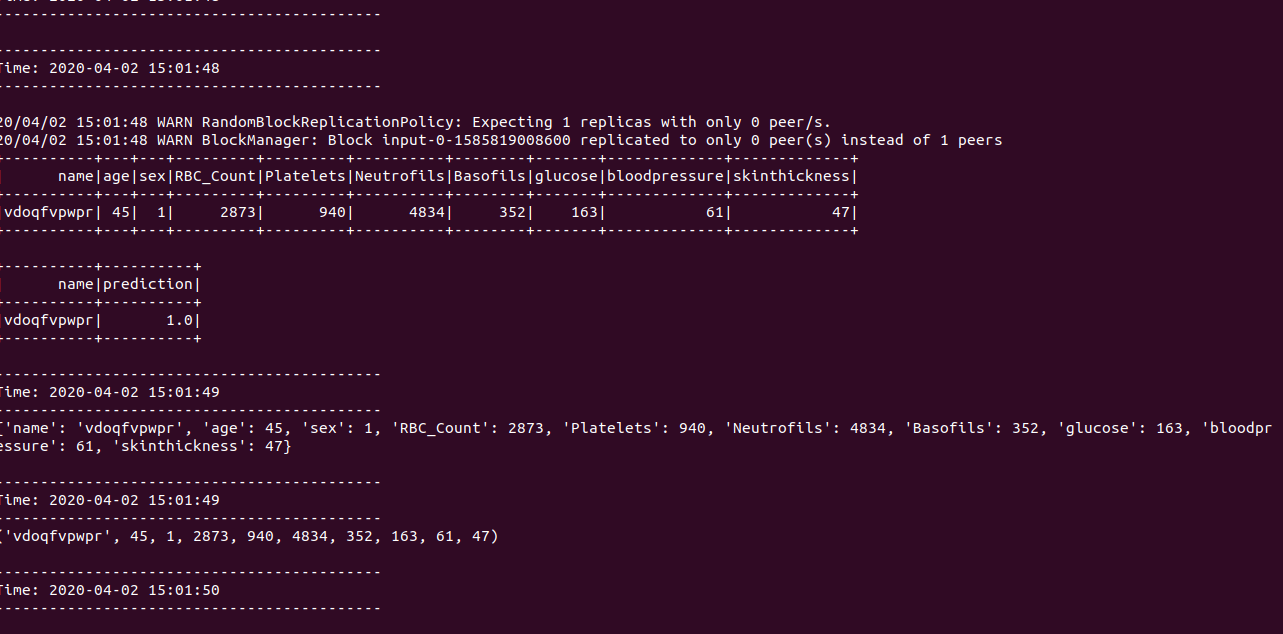

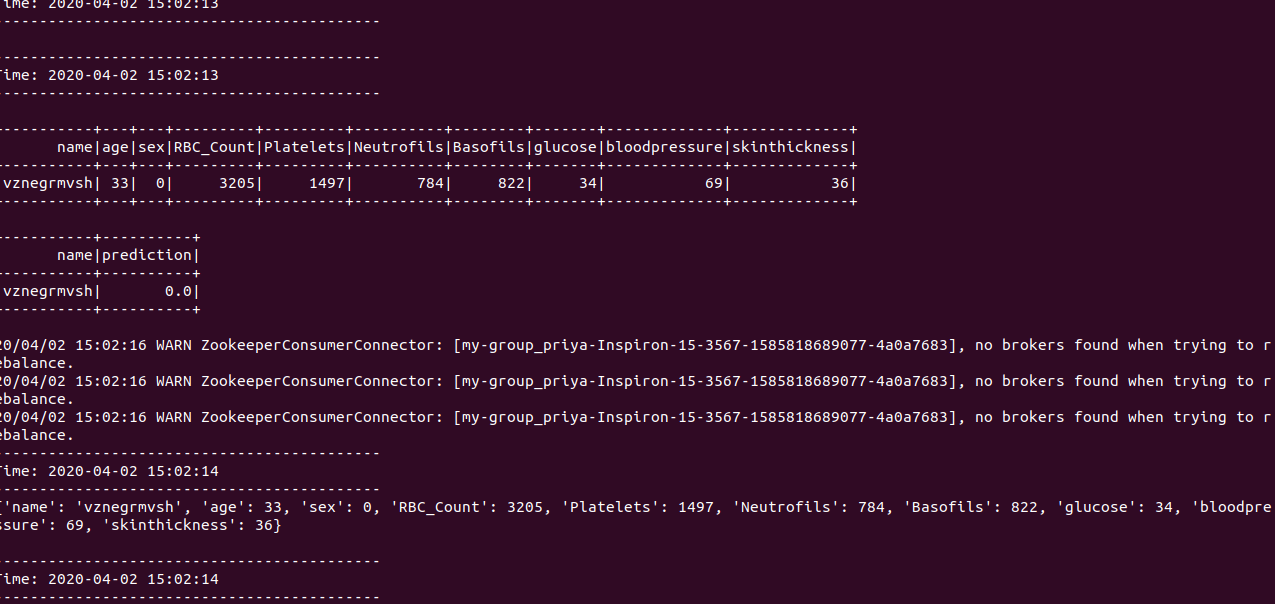

- run python spark.py

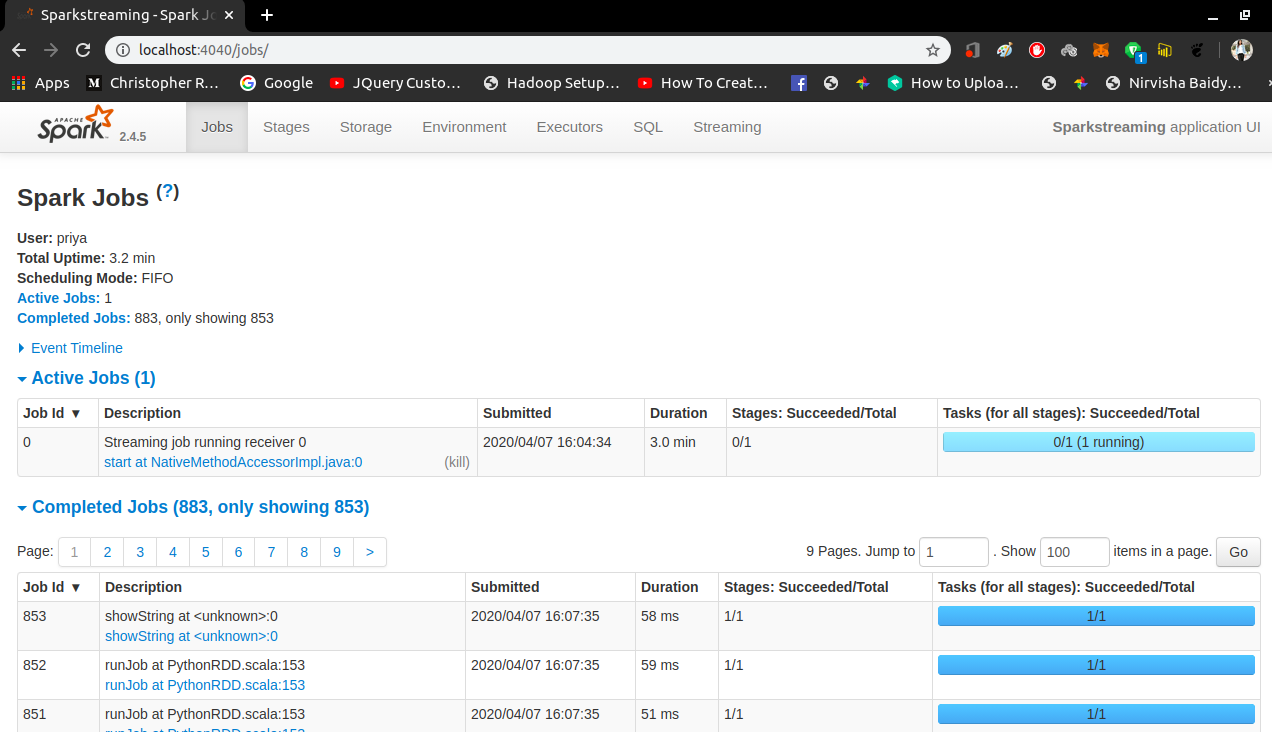

SPARK UI

-

First install cassandra driver using : pip3 install cassandra-driver

-

Open your terminal and type 'cqlsh'

-

now create a keyspace(i.e. database) using:'CREATE KEYSPACE diabetesdb WITH replication = {'class':'SimpleStrategy', 'replication_factor' : 3};'

-

Then type : 'USE diabetesdb;'

-

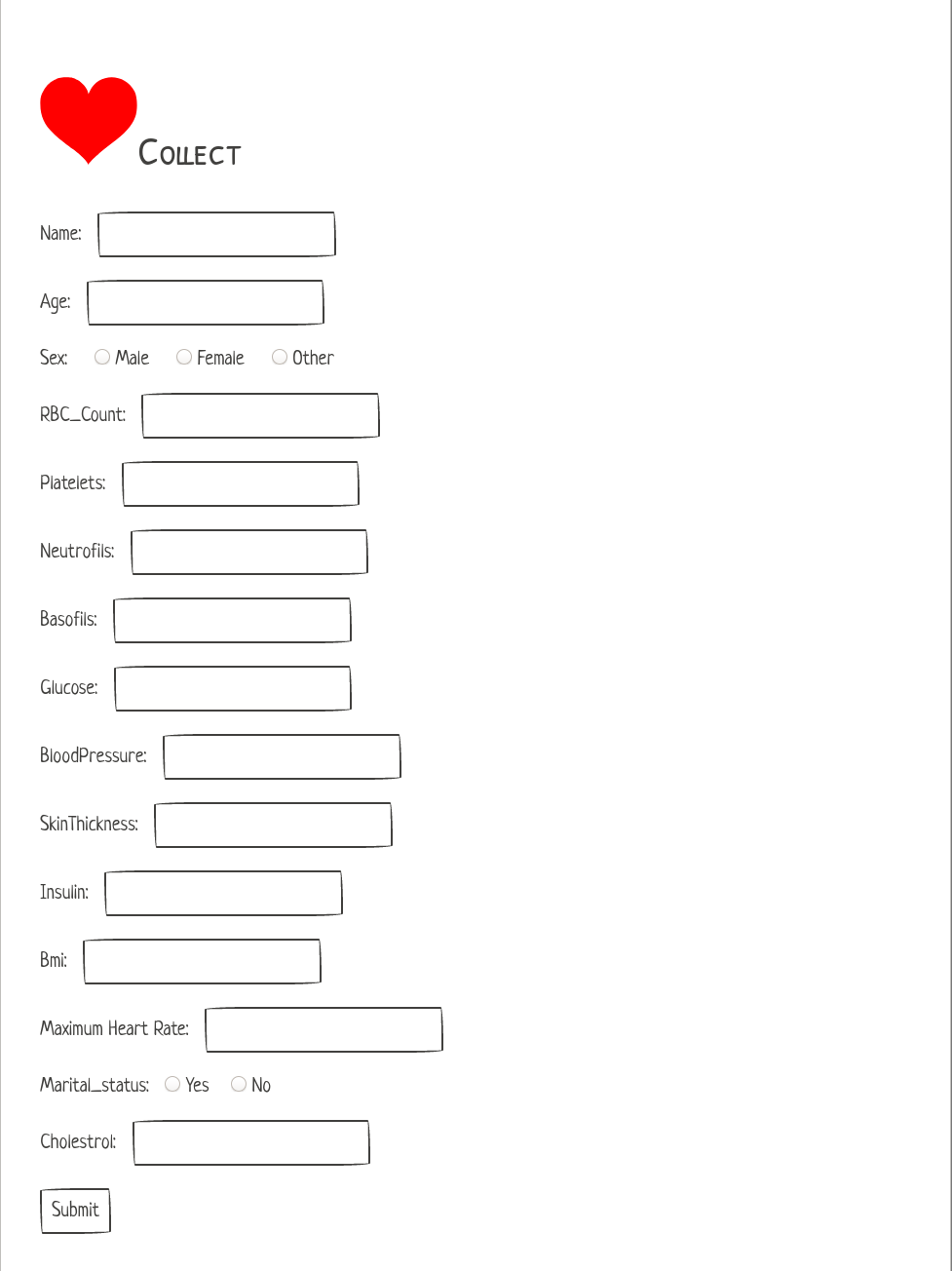

Then type: 'CREATE TABLE diabetesb( name text PRIMARY KEY, age int, sex int, basofils int, and so on (jati ota dataframe ma columns xa) );'

-

To check: Select * from diabetesb;