THIS DOCUMENT IS A WORK-IN-PROGRESS

This project concerns how we may design environments in order to facilitate the training of deep reinforcement learning based autonomous driving agents. The goal of the project is to provide a working deep reinforcement learning framework that can learn to drive in visually complex environments, with a focus on providing a solution that:

- Works out-of-the-box.

- Learns in a short time to make it easier to quickly iterate on and test hypotheses.

- Provide tailored metrics to compare agents between runs.

We have used the urban driving simulator CALRA (version 0.9.5) as our environment.

Find a detailed project write-up here.

Video of results:

Use the timestaps in the description to navigate to the experiments of your interest.

TODO- We provide two gym-like environments for CARLA*:

- Lap environment: This environment is focused on training an agent to follow a predetermined lap (see CarlaEnv/carla_lap_env.py)

- Route environment: This environment is focused on training agents that can navigate from point A to point B (see CarlaEnv/carla_route_env.py. TODO: Lap env figure

- We provide analysis of optimal PPO parameters, environment designs, reward functions, etc. with the aim of finding the optimal setup to train reinforcement learning based autonomous driving agents (see Chapter 4 of the project write-up for further details.)

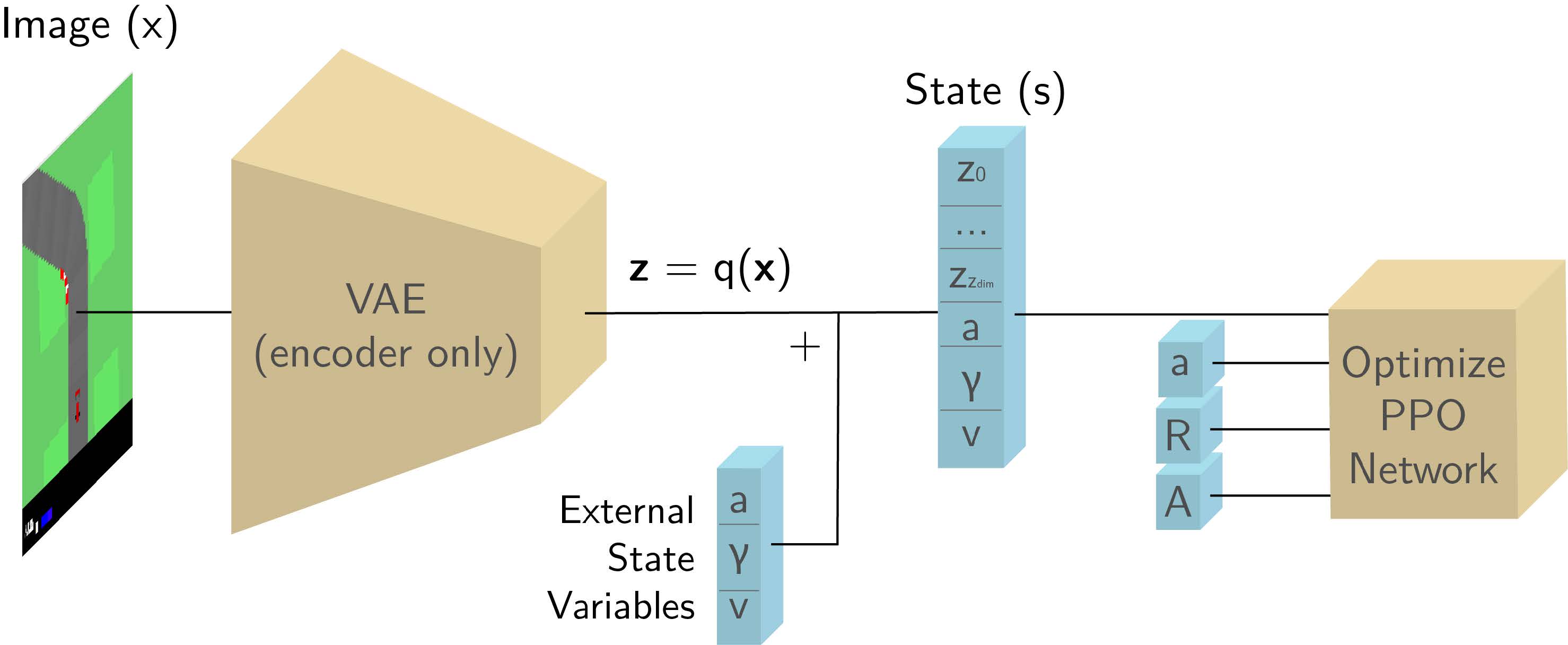

- We have shown that how we train and use a VAE can be consequential to the performance of a deep reinforcement learning agent, and we have found that major improvements can be made by training the VAE to reconstruct semantic segmentation maps instead of reconstructing the RGB input itself.

- We have devised a model that can reliably solve the lap environment in ~8 hours on average on a Nvidia GTX 970.

- We have provided an example of how sub-policies can be used to navigate with PPO, and we found it to have moderately success in the route environment (TODO add code for this).

* While there are existing examples of gym-like environments for CARLA, there is no implementation that is officially endorsed by CARLA. Furthermore, most of the third-party environments do not provide an example of an agent that works out-of-the-box.

- [https://arxiv.org/abs/1807.00412](Learning to Drive in a Day) by Kendall et. al. This paper by researchers at Wayve describes a method that showed how state representation learning through a variational autoencoder can be used to train a car to follow a straight country road in approximately 15 minutes.

- [https://towardsdatascience.com/learning-to-drive-smoothly-in-minutes-450a7cdb35f4](Learning to Drive Smoothly in Minutes) by Raffin et. al. This medium articles lays out the details of a method that was able to train an agent in a Donkey Car simulator in only 5 minutes, using a similar approach as (1). They further provide some solutions to the unstable steering we may observe when we train with the straight forward speed-as-reward reward formulation of Kendall.

- [https://arxiv.org/abs/1710.02410](End-to-end Driving via Conditional Imitation Learning) by Codevilla et. al. This paper outlines an imitation learning model that is able to learn to navigate arbitrary routes by using multiple actor networks, conditioned on what the current manouver the vehicle should take is. We have used a similar approach in our route environment agent.

This is a high-level overview of the method.

- Collect 10k 160x80x3 images by driving around manually.

- Train a VAE to reconstruct the images.

- Train an agent using the encoded state representations generated by the trained VAE and append a vector of measurements (steering, throttle, speed.) This is the input of the PPO-based agent.

- Python 3.6

- CARLA 0.9.5 (may also work with later versions)

- Our code expects the CARLA python API to be installed and available through

import carla(see this) - We also recommend building a editorless version of Carla by running the

make packagecommand in the root directory of CARLA. - Note that the map we use,

Town07, may not be include by default when runningmake package. Add+MapsToCook=(FilePath="/Game/Carla/Maps/Town07")toUnreal/CarlaUE4/Config/DefaultGame.inibefore runningmake packageto solve this.

- Our code expects the CARLA python API to be installed and available through

- TensorFlow for GPU (we have used version 1.13, may work with later versions)

- OpenAI gym (we used version 0.12.0)

- OpenCV for Python (we used version 4.0.0)

- A GPU with at least 4 GB VRAM (we used a GeForce GTX 970)

With the project, we provide a pretrained PPO agent for the lap environment.

The checkpoint file for this model is located in the models folder.

The easiest way get this model run, is to first set an environment variable named

${CARLA_ROOT} to point to the top-level directory in your CARLA installation.

Afterwards, we can simply call:

python run_eval.py --model_name pretrained_agent -start_carla

And CARLA should automatically be started and our agent driving. This particular agent should be able to drive about 850m along the designated lap (Figure TODO).

Note that our environment has only been designed to work with Town07 since this map is the one that closest

resembles the environments of Kendall et. al. and Raffin et. al.

(see TODO for more information on environment design.)

Set ${CARLA_ROOT} as is described in Running a Trained Agent.

Then use the following command to train a new agent:

python train.py --model_name name_of_your_model -start_carla

This will start training an agent with the default parameters,

and checkpoint and log files will be written to models/name_of_your_model.

Recording of the evaluation episodes will also be written to

models/name_of_your_model/videos by default,

making it easier to evaluate an agents behaviour over time.

To view the training progress of an agent, and to compare trained agents in TensorBoard, use the following command:

tensorboard --logdir models/

If you wish to collect data to train the variational autoencoder yourself, you may use the following command:

python CarlaEnv/collect_data.py --output_dir vae/my_data -start_carla

Press SPACE to begin recording frames. 10K images will be saved by default.

After you have collected data to train the VAE with, use the following command to train the VAE:

cd vae

python train_vae.py --model_name my_trained_vae --dataset my_data

To view the training progress and to compare trained VAEs in TensorBoard, use the following command:

cd vae

tensorboard --logdir models/

Once we have a trained VAE, we can use the following commad to inspect how its reconstructions look:

cd vae

python inspect_vae.py --model_dir models/my_trained_vae

Use the Set z by image button to seed your VAE with the latent z that is generated when the image

selected is passed through the encoder (useful for comparing VAE reconstructions across models,

as there is no guarantee that the features of the input will be encoded in the same indices of Z.)

We may also use the following command to see how a trained agent will behave to changes in latent space vector z by running:

python inspect_agent.py --model_name name_of_your_model

train.py Script for training a PPO agent in the lap environment

run_eval.py Script for running a trained model in eval mode

utils.py Various mathematical, tensorflow, DRL utility functions

ppo.py Script that defines the PPO model

common.py File containing all reward fomulations and other functions directly related to reward functions and state spaces.

inspect_agent.py Script that can be used to inspect the behaviour of the agent as the VAE's latent space vector z is annealed.

vae/ This folder contains all variational autoencoder related code.

vae/train_vae.py Script that trains a variational autoencoder.

vae/models.py Python file containing code for constructing MLP and CNN-based VAE models.

vae/inspect_vae.py Script used to inspect how latent space vector z affects the reconstructions of a trained VAE.

vae/data/ Folder containing the data (images) that were used when training the VAE model that is bundled with the repo.

vae/models/ Folder containing trained models and tensorboard logs.

doc/ Folder containing figures that are used in this readme, in addition to a PDF version of the accompanying thesis for this repo.

CarlaEnv/ Folder containing code that is related to the CARLA environments.

CarlaEnv/carla_lap_env.py File containing code for the CarlaLapEnv class

CarlaEnv/carla_route_env.py File containing code for the CarlaRouteEnv class

CarlaEnv/collect_data.py TODO Move to root? Script that is used to manually drive a car in the environment to collect images that can be used to train a VAE.

CarlaEnv/hud.py Code for the HUD that is displayed on the left-hand-side of the spectating window.

CarlaEnv/keyboard_control.py TODO wasn't this file removed?

CarlaEnv/planner.py Global route planner used to find routes from A to B. TODO insert link to source

CarlaEnv/wrappers.py File containing wrapper classes for several CARLA classes.

CarlaEnv/agents/ Contains code that is used by the route planner (TODO verify that these files are necessary). TODO insert link to source

models/ Folder containing trained model weights and tensorboard log files

Here we have summarized the main findings and reasoning behind various design decisions.

TODO insert reward figure

TODO insert reward functions

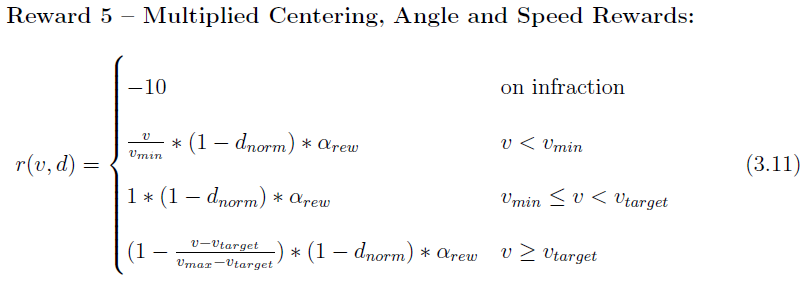

In our experiments, we have found that the reward function to give the best results in terms of creating agents that drive in the center of the lane, and at a constant target speed of 20 kmh, was reward 5.

The idea behind this reward function is that...

One thing that we quickly observed when we tried to train an agent in the lap environment, was that the agent's learning stagnated after approximately 3 hours. Beyond the 3 hour mark, the agent would spend about 2 minutes driving a well-known path until it would hit a particularly difficult turn and would immediately fail.

In order to overcome this obstacle, the agent needs to either (1) repeatedly attempt this stretch of road, and, by the help of lucky values sampled from exploration noise, sample actions that lead to better rewards, or (2) experience similar stretches of roads to eventually generalize to this road as well.

Since the agent did not learn even after 20 hours, we concluded that the repeated stretch of the lap does not aid the agent in generalizing (2). That meant that the only way the agent will overcome the obstacle is by getting lucky.

In order to expedite this process we have introduced checkpoints in the training phase of the agent. The checkpoints work by simply keeping track of the agents progress, saving the center lane waypoint every 50m of the track, and resetting the agent to that checkpoint when the environment is reset.

We found great improvements when we trained the VAE to reconstruct the semantic segmentation maps rather than the RGB input itself. The improvements suggests that more informative state representations are important in accelerating the agent's learning, and that a semantic encoding of the environment is more useful to the agent for this particular task.

As a result, training on semantic maps is enabled by default when calling

train_vae.py (disable by setting --use_segmentations False).

TODO

TODO

Explore different state representations, particularly models that take into account temporal aspects of driving.

Citation will be provided as soon as the write-up is officially published (Expected mid-August.)

TODO: Paste in gramarly