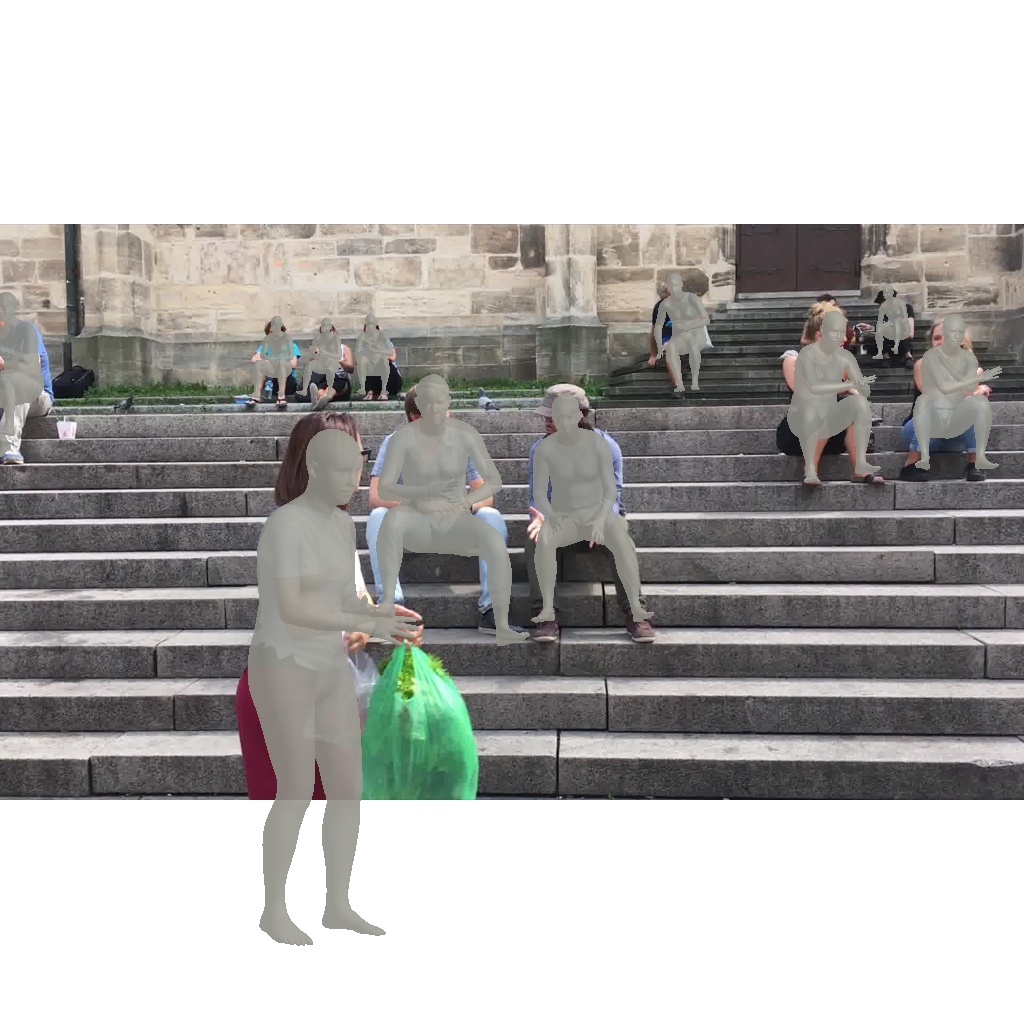

CenterHMR is a bottom-up single-shot network for real-time multi-person 3D mesh recovery from a single image It achieves ECCV 2020 3DPW Challenge Runner Up. Please refer to arxiv paper for the details!

2020/11/26: Upload a new version that contains optimization for person-person occlusion. Small changes for video support.

2020/9/11: Real-time webcam demo using local/remote server. Please refer to config_guide.md for details.

2020/9/4: Google Colab demo. Predicted results would be saved to a npy file per imag, please refer to config_guide.md for details.

Before installation, you can take a few minutes to try the prepared Google Colab demo a try.

It allows you to run the project in the cloud, free of charge.

Directly download the full-packed released package CenterHMR.zip from github, latest version v0.1.

Clone the repo:

git clone https://github.com/Arthur151/CenterHMR --depth 1Then download the CenterHMR data from Github release, Google drive or Baidu Drive with password 6hye.

Unzip the downloaded CenterHMR_data.zip under the root CenterHMR/.

cd CenterHMR/

unzip CenterHMR_data.zipThe layout would be

CenterHMR

- demo

- models

- src

- trained_modelsPlease intall the Pytorch 1.6 from the official website. We have tested the code on Ubuntu and Centos using Pytorch 1.6 only.

Install packages:

cd CenterHMR/src

sh scripts/setup.shPlease refer to the bug.md for unpleasant bugs. Feel free to submit the issues for related bugs.

Currently, the released code is used to re-implement demo results. Only 1-2G GPU memory is needed.

To do this you just need to run

cd CenterHMR/src

sh run.sh

# if there are any bugs about shell script, please consider run the following command instead:

CUDA_VISIBLE_DEVICES=0 python core/test.py --gpu=0 --configs_yml=configs/basic_test.ymlResults will be saved in CenterHMR/demo/images_results.

You can also run the code on random internet images via putting the images under CenterHMR/demo/images before running sh run.sh.

Or please refer to config_guide.md for detail configurations.

Please refer to config_guide.md for saving the estimated mesh/Center maps/parameters dict.

You can also run the code on random internet videos.

To do this you just need to firstly change the input_video_path in src/configs/basic_test_video.yml to /path/to/your/video. For example, set

video_or_frame: True

input_video_path: '../demo/sample_video.mp4' # Nonethen run

cd CenterHMR/src

CUDA_VISIBLE_DEVICES=0 python core/test.py --gpu=0 --configs_yml=configs/basic_test_video.ymlResults will be displayed on your screen.

We also provide the webcam demo code, which can run at real-time on a 1070Ti GPU / remote server.

Currently, limited by the visualization pipeline, the webcam visulization code only support the single-person mesh.

To do this you just need to run

cd CenterHMR/src

CUDA_VISIBLE_DEVICES=0 python core/test.py --gpu=0 --configs_yml=configs/basic_webcam.yml

# or please set the TEST_MODE=0 WEBCAM_MODE=1 in run.sh, then run

sh run.shPress Up/Down to end the demo. Pelease refer to config_guide.md for setting mesh color or camera id.

If you wish to run webcam demo using remote server, pelease refer to config_guide.md.

To test FPS of CenterHMR on your devices, please set configs/basic_test.yml as below

save_visualization_on_img: False

demo_image_folder: '../demo/videos/Messi_1'then run

cd CenterHMR/src

CUDA_VISIBLE_DEVICES=0 python core/test.py --gpu=0 --configs_yml=configs/basic_test.ymlThe code will be gradually open sourced according to:

- the schedule

- demo code for internet images / videos / webcam

- runtime optimization

- benchmark evaluation

- training

Please considering citing

@inproceedings{CenterHMR,

title = {CenterHMR: Multi-Person Center-based Human Mesh Recovery},

author = {Yu, Sun and Qian, Bao and Wu, Liu and Yili, Fu and Black, Michael J. and Tao, Mei},

booktitle = {arxiv:2008.12272},

month = {August},

year = {2020}

}We thank Peng Cheng for his constructive comments on Center map training.

Here are some great resources we benefit:

- SMPL models and layer is borrowed from MPII SMPL-X model.

- Webcam pipeline is borrowed from minimal-hand.

- Some functions are borrowed from HMR-pytorch.

- Some functions for data augmentation are borrowed from SPIN.

- Synthetic occlusion is borrowed from synthetic-occlusion

- The evaluation code of 3DPW dataset is brought from 3dpw-eval.

- For fair comparison, the GT annotations of 3DPW dataset are brought from VIBE