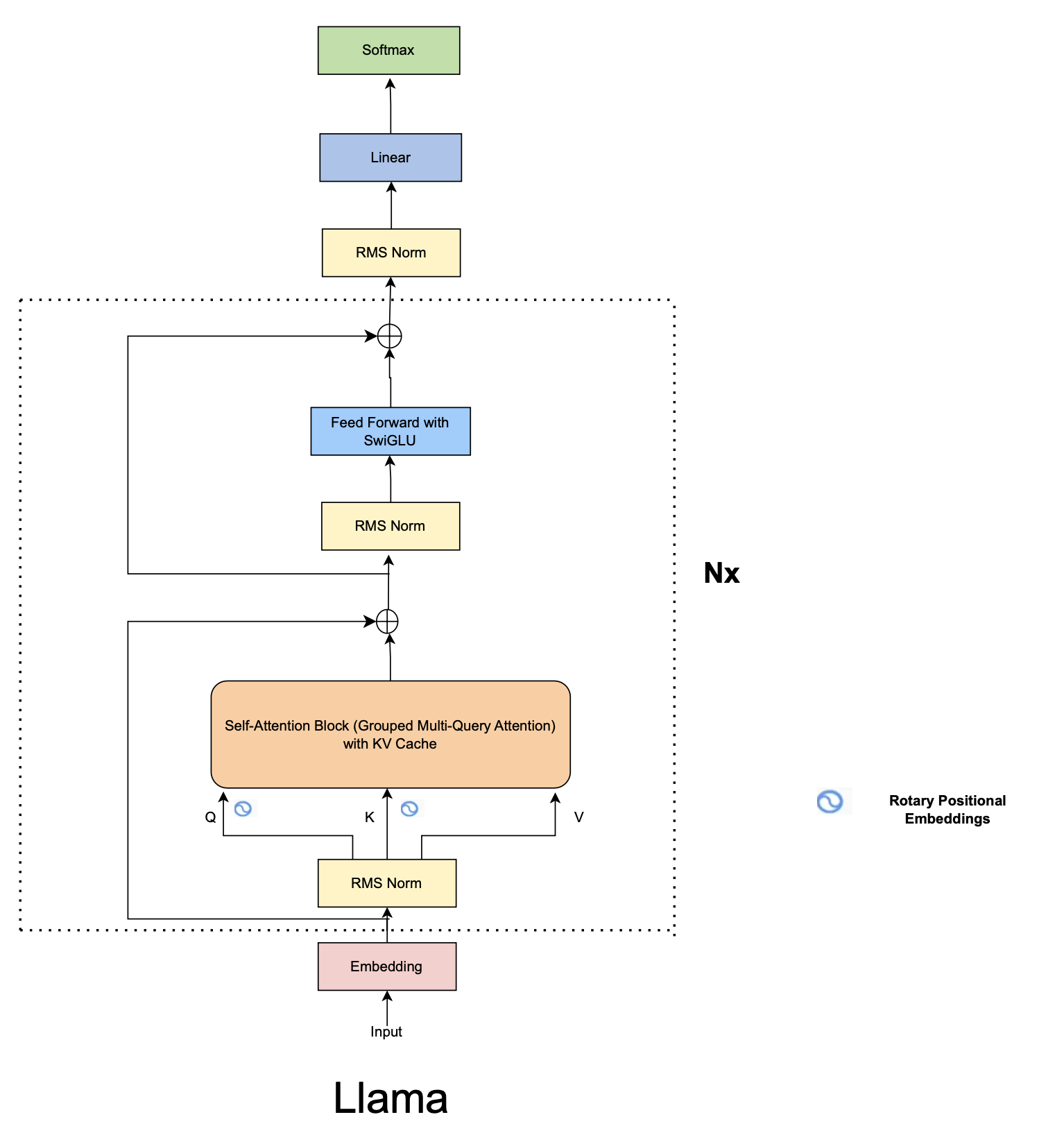

Exploring all the blocks (legos) to build the Llama model. Both coding + conceptual understanding. Part of a course taken during IIITH days.

- Architectural Difference between Vanilla Transformers and Llama

- RMS Norm (review LayerNorm from papers)

- Rotary Positional Embeddings

- KV-Cache

- Multi-Query Attention

- Grouped Multi-Query Attention

- SwiGLU Activation Function

- Place Request to download the model

- Install the Llama-CLI

pip install llama-toolchain

- Choose the model to install

llama download --source meta --model-id CHOSEN_MODEL_ID

- For Example (we get these

model-idin the mail under Model weights available):Llama-2-7bis model-id for 7 billion params modelLlama-2-13bis model-id for 13 billion params model

- For Example (we get these

- When prompted with the signed URL, use the one received on the mail.