MADS-RAG: A Helpful Chatbot for Master’s of Applied Data Science Students at the University of Michigan

Team 28: Patrick Sollars (psollars@umich.edu), Aaron Newman (newmanar@umich.edu), and Tawfiq Zureiq (tawfiqz@umich.edu)

MADS-RAG is developed to enhance the remote learning experience for MADS students, providing quick and accurate answers to queries about course schedules, policies, and content. MADS-RAG leverages a Retrieval-Augmented Generation (RAG) pipeline, integrating the latest course and program information dynamically.

- Dynamic Content Retrieval: Pulls in the most relevant and up-to-date information from various sources like course syllabi, handbooks, and transcripts.

- Familiar Interface: Easy to use interface built with Streamlit for an intuitive user experience.

- No External APIs: Runs locally (with a powerful computer), ensuring data privacy and cost efficiency.

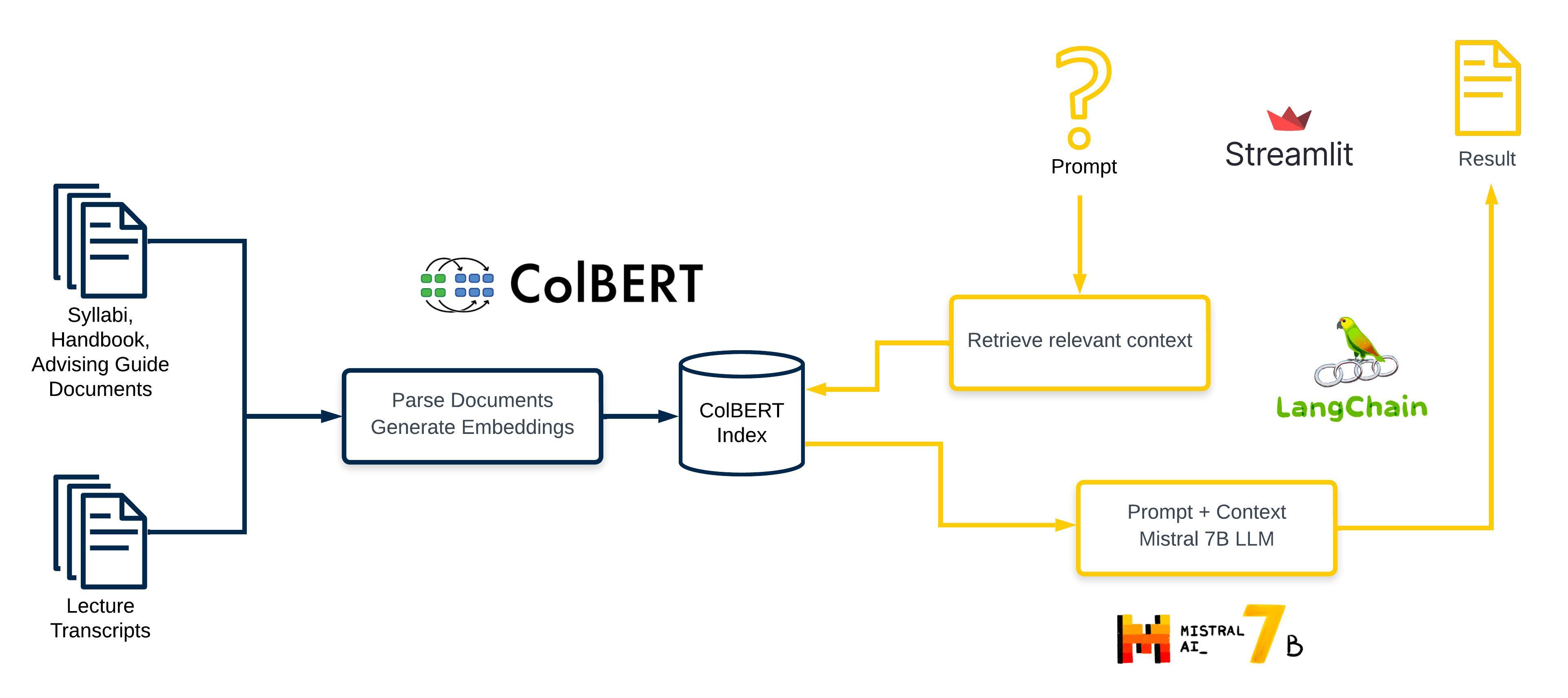

High-level architecture of the MADS-RAG chatbot

High-level architecture of the MADS-RAG chatbot

With all dependencies, models, and source data installed, the chatbot can be started by:

- navigating to the chatbot directory:

cd 04_chatbot - start the chatbot client:

pipenv run streamlit run ./chatbot.py --server.port 8501- If your machine can't handle it, you can use

mock_chatbot.pywhich uses cached responses with minimal resources (Not the RAG LLM)

- If your machine can't handle it, you can use

- Navigate to http://localhost:8501 where you can:

- Enter your prompt

- View the response generated from the MADS-RAG pipeline

- Interact with links in the response or source information

llama.cpp can be configured to run with hardware acceleration for your particular machine. See the documentation for more information on installation configuration for this package.

cd 04_chatbot

pipenv run python -m llama_cpp.server —config_file llama_cpp_config.json

# In a new shell...

pipenv run streamlit run ./chatbot_llama_cpp.py --server.port 8501 MADS-RAG chatbot interface using Streamlit

MADS-RAG chatbot interface using Streamlit

This contains relevant code to load and split the documents. Sources were later pulled from the MADS program website and other public sources and were parsed using Marker. Some of these files were manually cleaned, so the steps weren't documented in this code. The output of all document collection is in the documents directory at the root of this repo.

Many notebooks used here to test out different retrieval strategies and models. The notebooks have some report or visualization at the end about the performance of that particular configuration. Selected components for the final chatbot highlighted in bold.

- Retrievers

- ChromaDB (mmr, similarity_score, similarity_score_threshold)

- TF/IDF

- BM25

- Ensemble (chroma, tfidf, bm25)

- ColBERT

- Models

- Llama2 (7B & 13B)

- Mistral7B

- Starling7B

- Evaluation Strategies

- BERTScore

- BLEU

- ROUGE

- METEOR

- Ragas

- Just using our eyes

¯\_(ツ)_/¯

Document chunking analysis and topic modeling visualizations for the report.

Notebooks, scripts, and configuration relevant to the UI or “productionizing” the finished product. There are several ways to run the chatbot:

chatbot.py: The standard chatbot with Streamlit UIpipenv run streamlit run ./chatbot.py --server.port 8501chatbot_llama_cpp.py: Same Streamlit UI, but this connects to an instance of the LLM API running athttp://localhost:7999mock_chatbot.py: Loops through a list of canned responses, used to develop and test the UI quicklysemantic_routing_gradio_ui: This notebook provides an alternative UI and includes an optional implementation of the router- Other Notebooks: These will run the startup commands if you prefer to not use the shell, additionally they offer tunneling to your local instance if you'd like to (temporarily) share the app.

- run_chatbot_localtunnel

- run_chatbot_ngrok (requires

NGROK_AUTH_TOKEN)

This is the primary retrieval index. Only "documents" are stored in this repo. See Data Access for details on proprietary transcript data in the capstone-protected repo.

Clone the colbertv2.0 model into the root of this project. This requires git lfs to be installed and configured on your machine.

These are the raw source files in plain text markdown format. They can be fed to our embedding notebooks to create document indexes.

This directory contains chunked documents and metadata after the initial loading with ChromaDB. Many evaluation notebooks will reference these cached embeddings, but the final chatbot uses ColBERT as the retriever.

Various quantized models were tested for this project. These are very large files that can be downloaded directly from Hugging Face.

- https://huggingface.co/TheBloke/Llama-2-7B-GGUF

- https://huggingface.co/TheBloke/Mistral-7B-Instruct-v0.2-GGUF

- https://huggingface.co/TheBloke/Starling-LM-7B-alpha-GGUF

This repo uses pipenv to manage dependencies. If you don't already have it installed you can do so with:

$ pip install --user pipenv

# -or-

$ brew install pipenvOr, view the complete installation documentation. Then navigate to the repo and run these commands to install project dependencies.

# Recommended to keep the venv local to the repository

export PIPENV_VENV_IN_PROJECT=1

pipenv shell

pipenv install --devNOTE: You might also prefer to set

PIPENV_VENV_IN_PROJECT=1in your .env or .bashrc/.zshrc (or other shell configuration file) for creating the virtualenv inside your project’s directory.

Some notebooks require API keys to run, these should be stored in the .gitignored file, secrets.py, in the root of this repo.

OPENAI_API_KEY = "your_secret_key" # only for evaluation

NGROK_AUTH_TOKEN = "your_secret_key"All syllabus documents used in this project are publicly accessible through the Master of Applied Data Science Curriculum page.

Additional source documents are referenced below:

Proprietary transcripts from MADS course lectures were pulled from Coursera using coursera-dl. These exist in a private repo at the following paths. This project references data at these paths which will need to be manually copied from the private repo.

colbert_index/colbert/indexes/combinedcolbert_index/colbert/indexes/transcriptsdocuments/transcriptsembeddings/transcripts.pickle

Access to these transcripts is restricted to the Winter 2024 Capstone course students and instructors. If you would like access to this repo, please reach out to psollars@umich.edu with your request.

Quantized large language models were sourced from public HuggingFace repositories: