The official code implementation of "Late-Constraint Diffusion Guidance for Controllable Image Synthesis".

[Paper] / [Project] / [Model Weights] / [Demo]

- We have uploaded the training and testing code of LCDG. Afterwards, we would also release our pre-trained model weights as well as an interactive demo. Star the project to get notified!

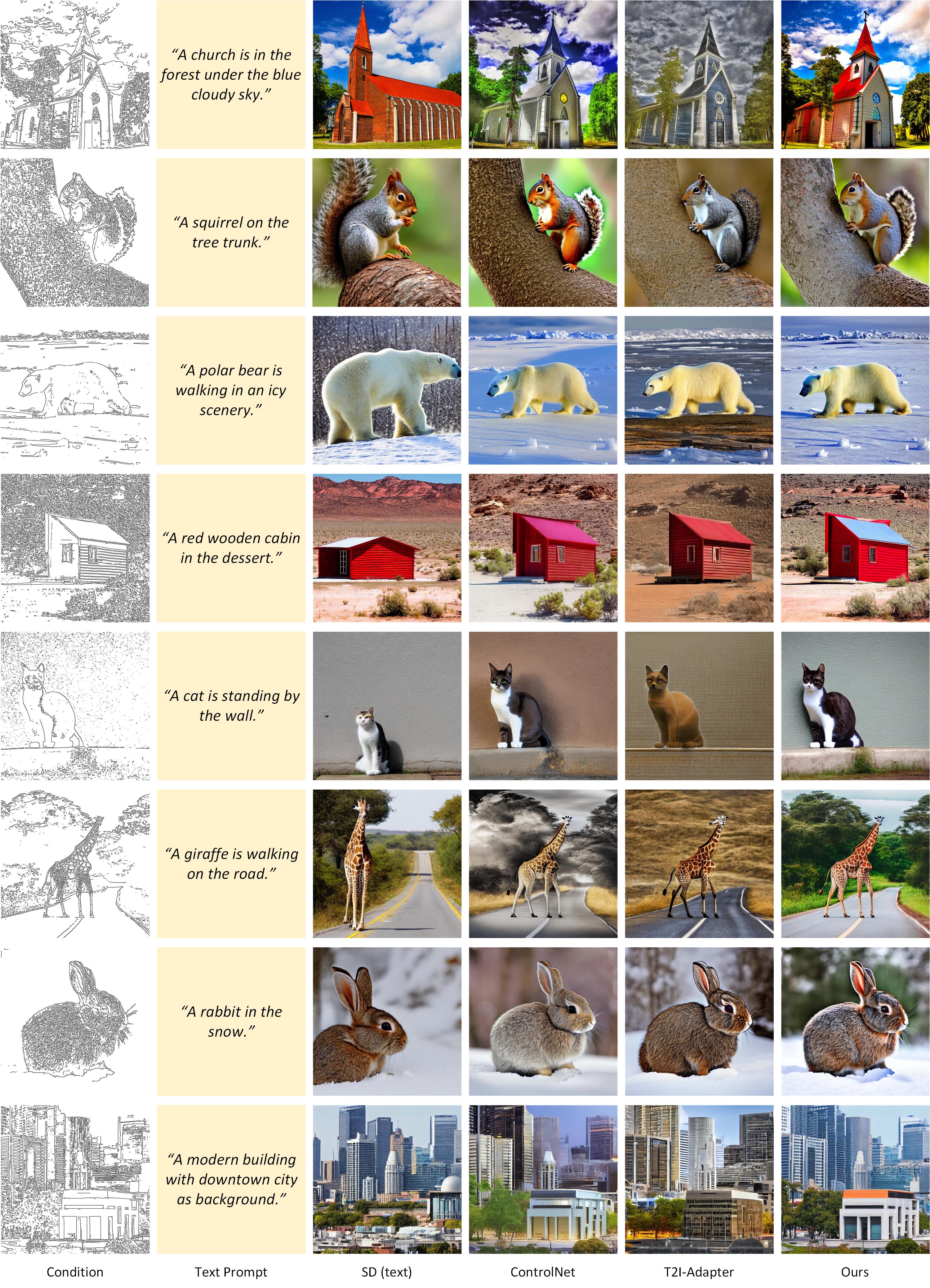

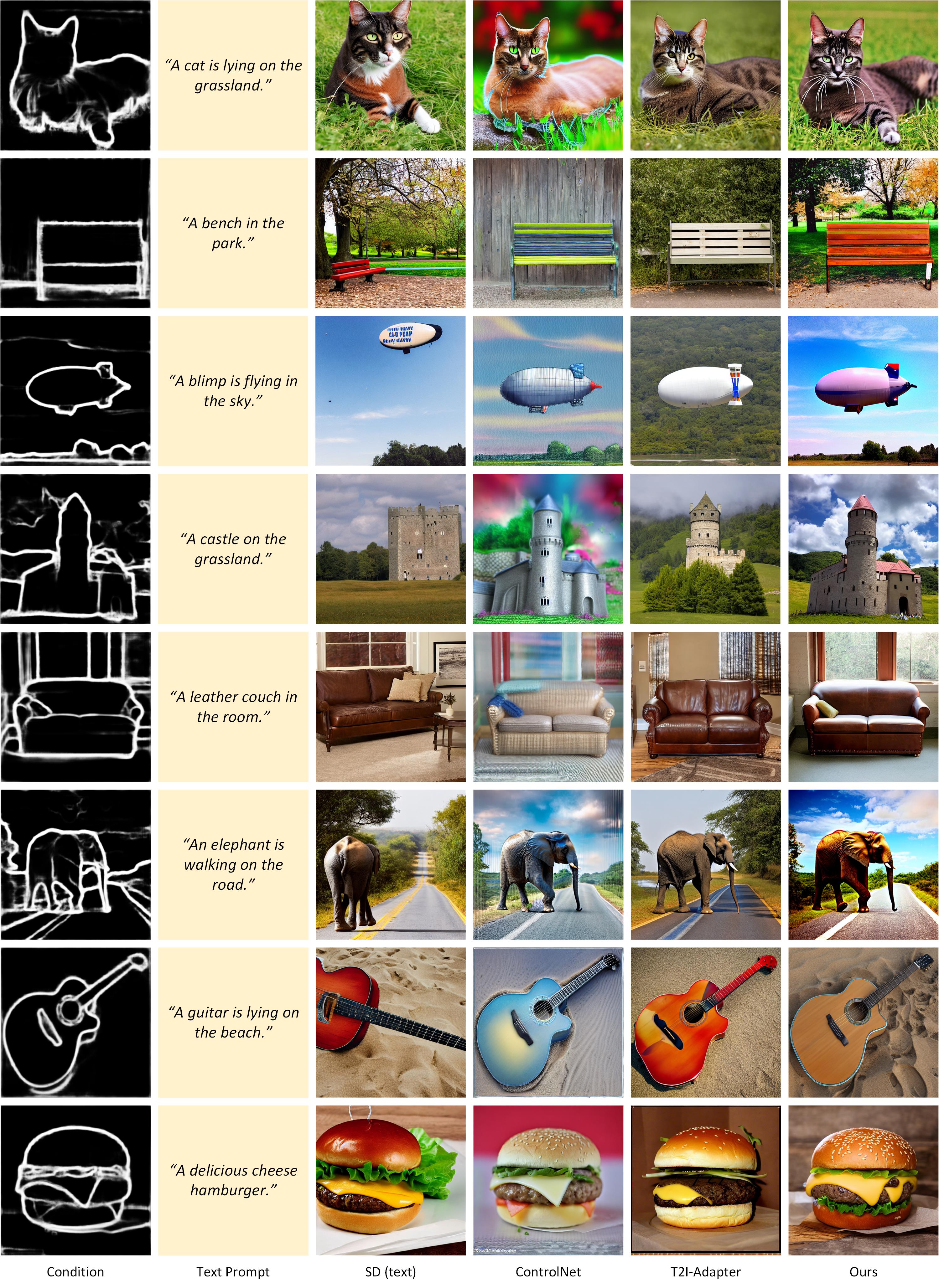

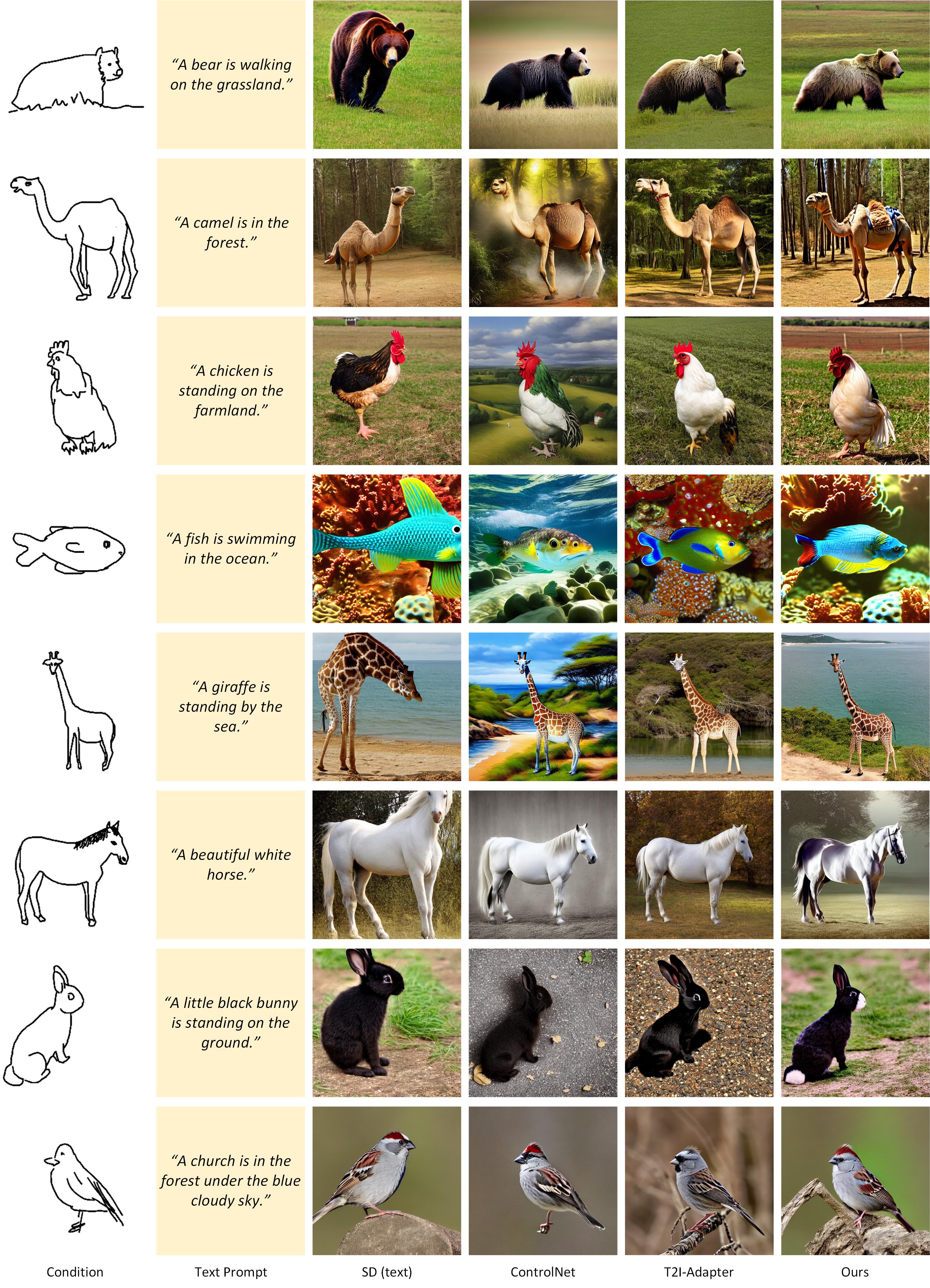

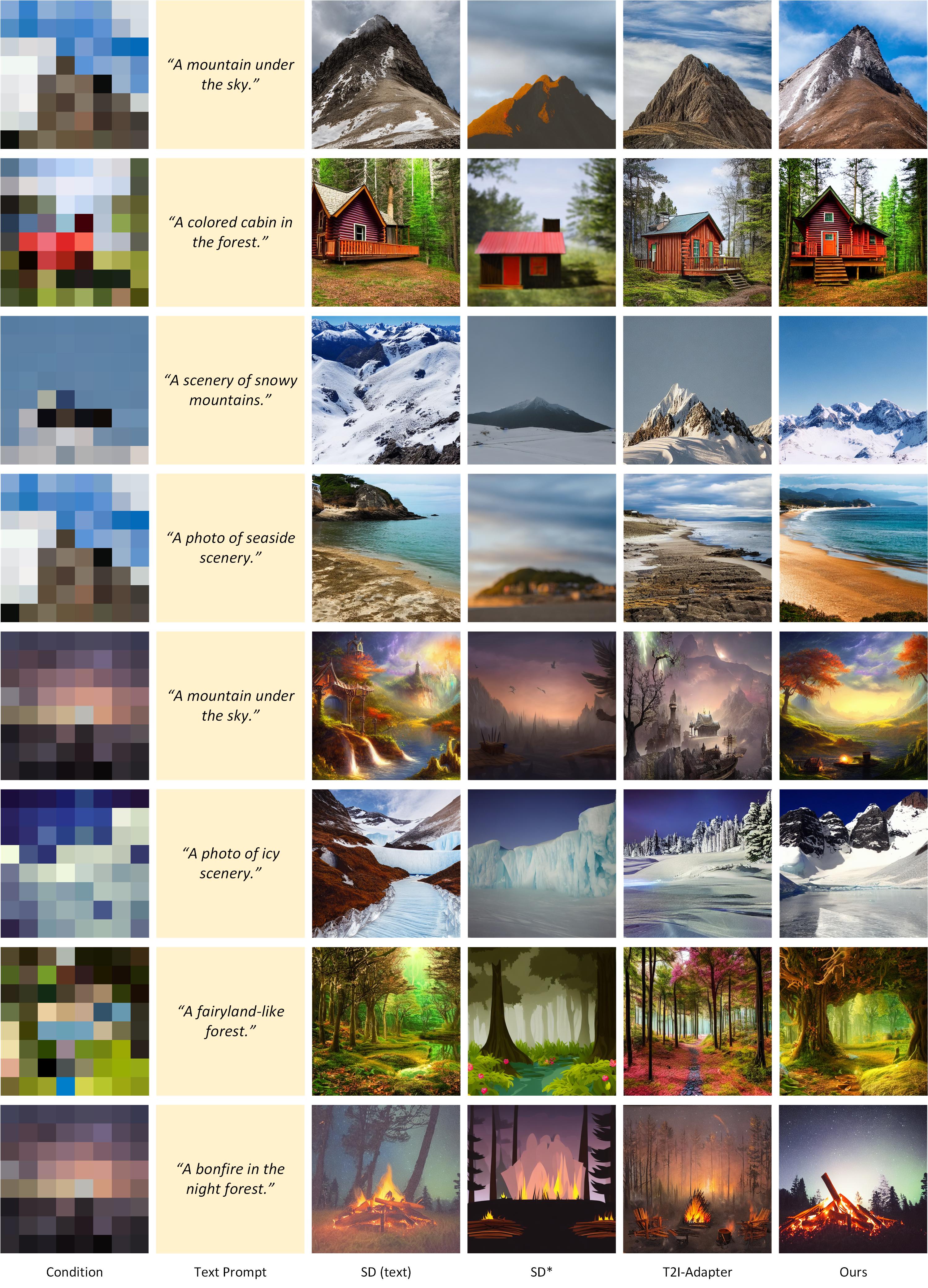

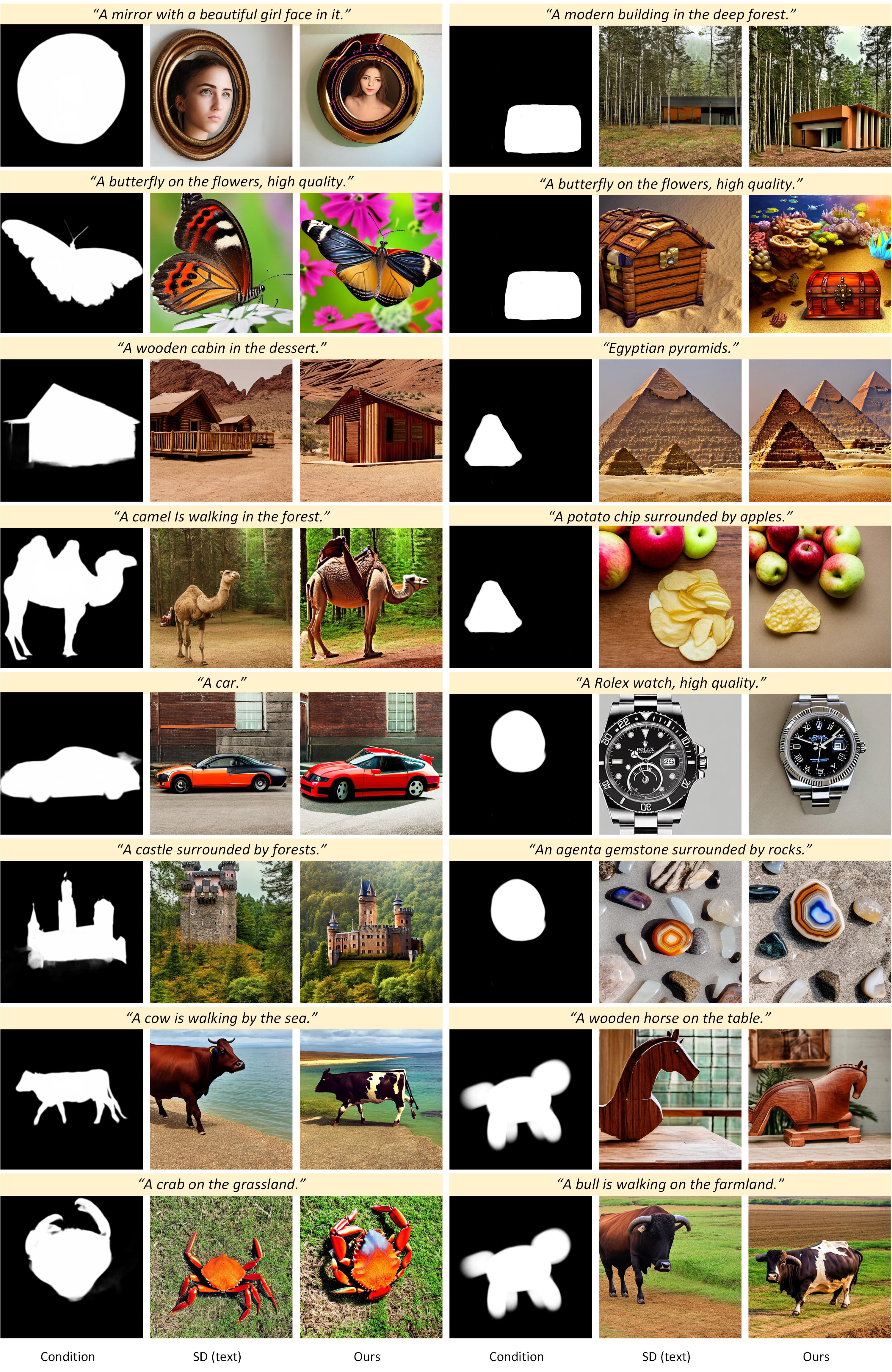

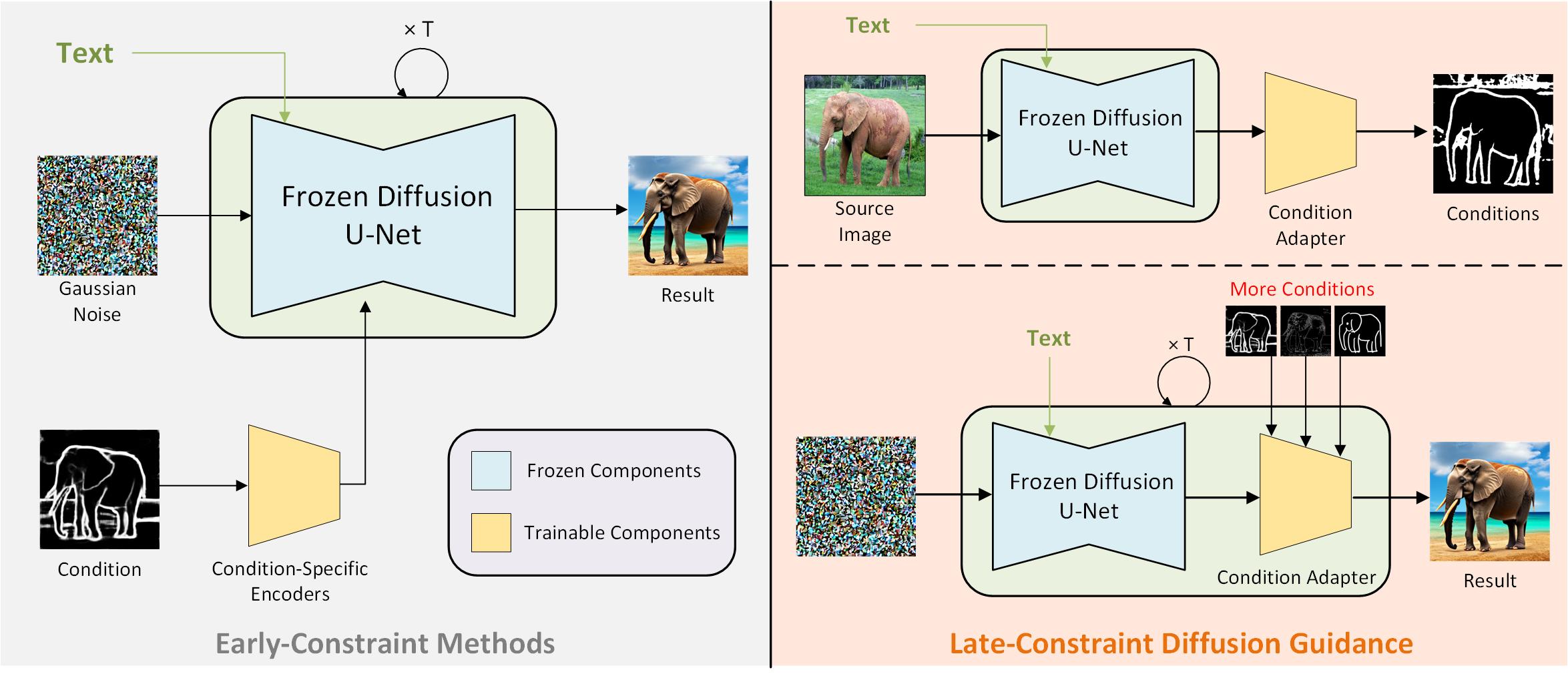

Diffusion models, either with or without text condition, have demonstrated impressive capability in synthesizing photorealistic images given a few or even no words. These models may not fully satisfy user need, as normal users or artists intend to control the synthesized images with specific guidance, like overall layout, color, structure, object shape, and so on. To adapt diffusion models for controllable image synthesis, several methods have been proposed to incorporate the required conditions as regularization upon the intermediate features of the diffusion denoising network. These methods, known as early-constraint ones in this paper, have difficulties in handling multiple conditions with a single solution. They intend to train separate models for each specific condition, which require much training cost and result in non-generalizable solutions. To address these difficulties, we propose a new approach namely late-constraint: we leave the diffusion networks unchanged, but constrain its output to be aligned with the required conditions. Specifically, we train a lightweight condition adapter to establish the correlation between external conditions and internal representations of diffusion models. During the iterative denoising process, the conditional guidance is sent into corresponding condition adapter to manipulate the sampling process with the established correlation. We further equip the introduced late-constraint strategy with a timestep resampling method and an early stopping technique, which boost the quality of synthesized image meanwhile complying with the guidance. Our method outperforms the existing early-constraint methods and generalizes better to unseen condition.

Diffusion models, either with or without text condition, have demonstrated impressive capability in synthesizing photorealistic images given a few or even no words. These models may not fully satisfy user need, as normal users or artists intend to control the synthesized images with specific guidance, like overall layout, color, structure, object shape, and so on. To adapt diffusion models for controllable image synthesis, several methods have been proposed to incorporate the required conditions as regularization upon the intermediate features of the diffusion denoising network. These methods, known as early-constraint ones in this paper, have difficulties in handling multiple conditions with a single solution. They intend to train separate models for each specific condition, which require much training cost and result in non-generalizable solutions. To address these difficulties, we propose a new approach namely late-constraint: we leave the diffusion networks unchanged, but constrain its output to be aligned with the required conditions. Specifically, we train a lightweight condition adapter to establish the correlation between external conditions and internal representations of diffusion models. During the iterative denoising process, the conditional guidance is sent into corresponding condition adapter to manipulate the sampling process with the established correlation. We further equip the introduced late-constraint strategy with a timestep resampling method and an early stopping technique, which boost the quality of synthesized image meanwhile complying with the guidance. Our method outperforms the existing early-constraint methods and generalizes better to unseen condition.

- Official instructions of installation and usage of LCDG.

- Testing code of LCDG.

- Online demo of LCDG.

- Pre-trained model weights.

- Training code of LCDG.

We integrate the basic environment to run both of the training and testing code in environment.sh using pip as package manager. Simply running bash environment.sh would get the required packages installed.

Before running the code, pre-trained model weights of the diffusion models and corresponding VQ models should be prepared locally. For v1.4 or CelebA model weights, you could refer to the GitHub repository of Stable Diffusion, and excute their provided scripts to download by running:

bash scripts/download_first_stage.sh

bash scripts/download_models.shWe provide example configuration files of edge, color and mask conditions in configs/lcdg. Modify line 5 and line 43 in these configuration files with the corresponding paths of pre-trained model weights.

As is reported in our paper, our condition adapter could be well-trained with 10,000 randomly collected images. You could formulate your data directory in the following structure:

collected_dataset/

├── bdcn_edges

├── captions

└── imagesFor edge condition, we use bdcn to generate the supervision signals from source images. For mask condition, we use u2net to detect saliency masks as supervision. For color condition, we use simulated color stroke as supervision and have incorparated corresponding code in condition_adaptor_src/condition_adaptor_dataset.py.

After the training data is ready, you need to modify line 74 to 76 with the corresponding paths of the training data. Additionally, if evaluation is required, you need to modify line 77 to 79 with corresponding paths of the splitted validation data.

Now you are ready to go by executing condition_adaptor_train.py, such as:

python condition_adaptor_train.py

-b /path/to/config/file

-l /path/to/output/pathAfter hours of training, you could try sampling images with the trained condition adapter. We provide an example execution command as follows:

python sample_single_image.py

--base /path/to/config/path

--indir /path/to/target/condition

--caption "text prompt"

--outdir /path/to/output/path

--resume /path/to/condition/adapter/weightsThis work is licensed under MIT license. See the LICENSE for details.

If you find our work enlightening or the codebase is helpful to your work, please cite our paper:

@misc{liu2023lateconstraint,

title={Late-Constraint Diffusion Guidance for Controllable Image Synthesis},

author={Chang Liu and Dong Liu},

year={2023},

eprint={2305.11520},

archivePrefix={arXiv},

primaryClass={cs.CV}

}