contact: robbiebarrat (at) gmail (dot) com

Modified version of Soumith Chintala's torch implementation of DCGAN with a focus on generating artworks.

Due to the nature of github, and the 100+ MB nature of the pre-trained networks, you'll have to click a link to get the pre-trained models, but it's worth it. Below are some of them and examples of what they can generate. When using any outputs of the models, credit me. Don't sell the outputs of the pre-trained models, modified or not. If you have any questions email me before doing anything.

There is no download for abstract landscapes, yet. Scroll to the bottom to find out how to train your own from the regular landscapes network (involves switching the dataset towards the end of training).

-

Doubled image size - now 128x128 instead of 64x64 (added a layer in both networks)

-

Ability to resume training from checkpoints (simply pass -netG=[path_to_network], and -netD=[path_to_network]). While this is convenient, it also allows for experimentation with training on one set of images, and then later in training shifting to another set of images. This allows you to train a landscape network, and then shift to abstract for a very short duration to get abstract landscapes (see example below in the "resume from checkpoint" section) - acting like a sort of style transfer for GANs.

-

Included a simple shell script that will keep the checkpoints folder reasonably empty - it is meant for leaving running when training a GAN. Default behavior is to keep the most recent 5 checkpoints of both the discriminator and generator for each different experiment name.

-

Added a python 3 script (utils/genre-scraper.py) that allows easy image-scraping from wikiart into the format the GAN can draw from.

-

Added a script (utils/gpu2cpu.lua) that converts checkpoints trained on a gpu to models that can be used by a cpu.

-

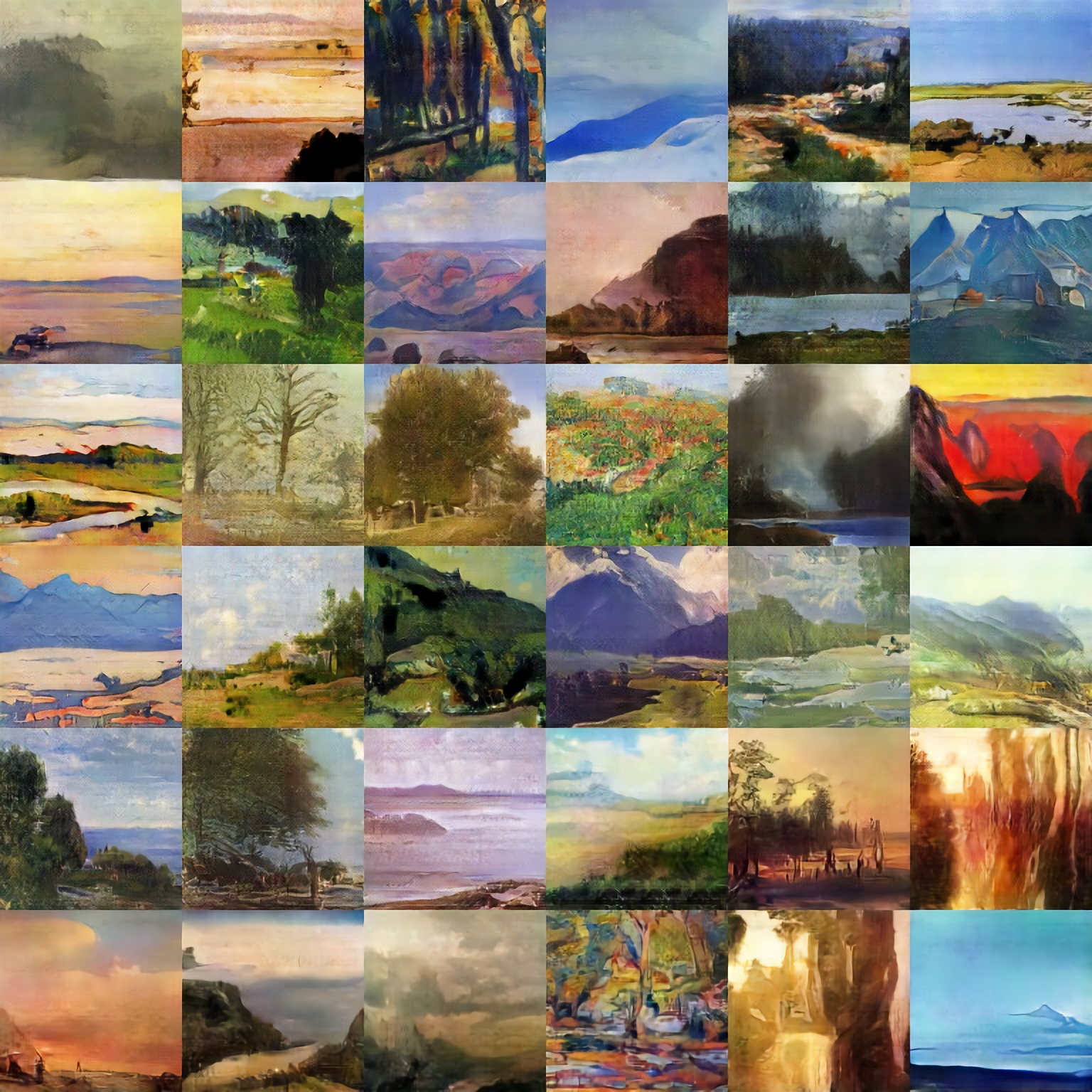

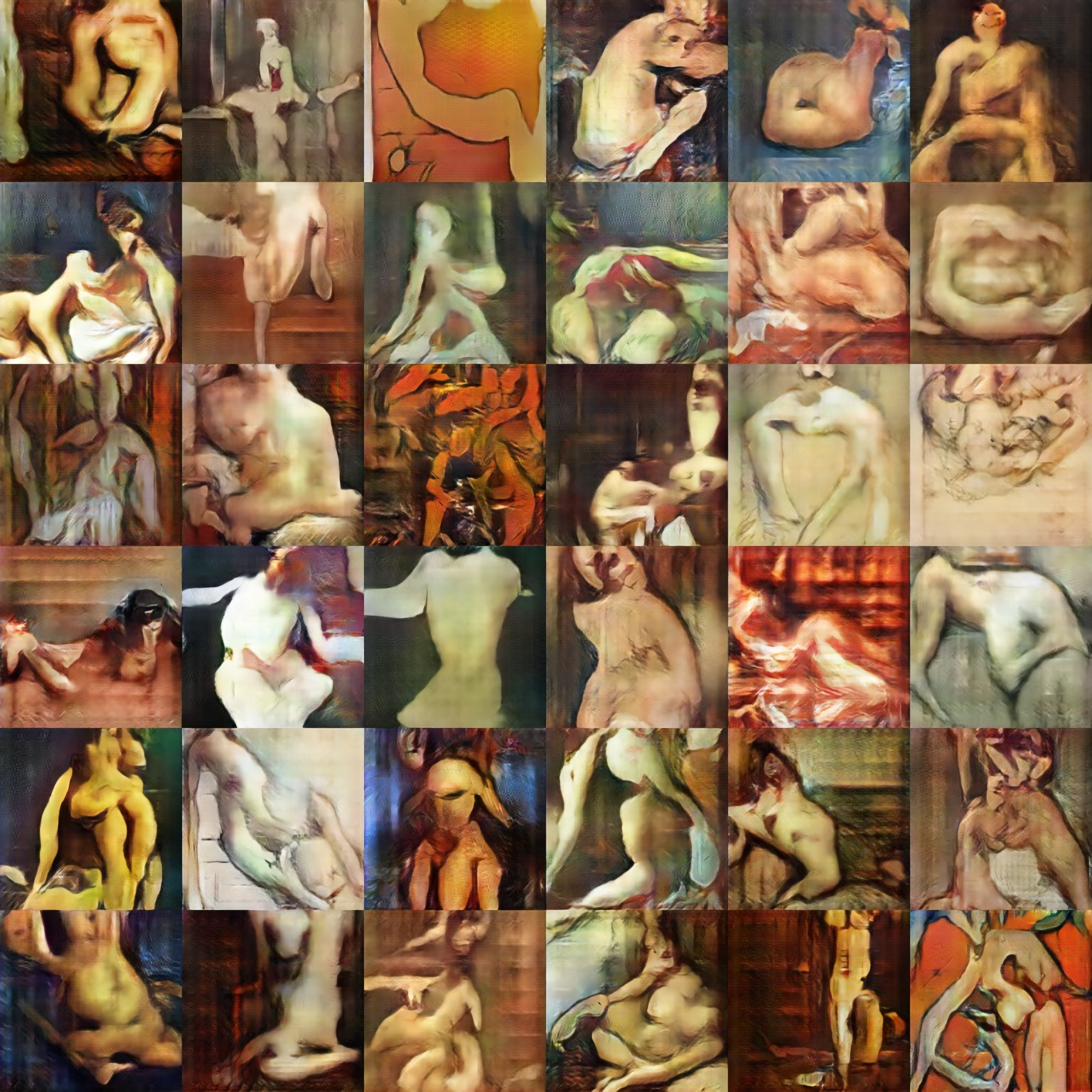

The inclusion of multiple pre-trained GAN's (.t7 files) that can generate various types of images, including 128x128 landscape oil paintings, 128x128 nude oil paintings, and others highlighted below.

See INSTALL.md

The usage is identical to Soumith's - with the exception of loading from a checkpoint, and the fact that an artwork scraper is included with this project.

genre-scraper.py will allow you to scrape artworks from wikiart based on their genres. The usage is quite simple.

In genre-scraper.py there is a variable called genre_to_scrape - simply change that to any of the genre's listed on this page, or to any of the values in the huge list of comments right after genre_to_scrape is defined.

Run the program with python3 and a folder with the name of your genre will be created, with a subdirectory "images/" containing all of the jpgs. Point your GAN to the directory with the name of your genre (so if I did landscapes, i'd just change genre_to_scrape to "landscape", and then run my GAN with DATA_ROOT=landscape)

DATA_ROOT=myimages dataset=folder ndf=50 ngf=150 th main.lua

You can adjust ndf (number of filters in discriminator's first layer) and ngf (number of filters in generator's first layer) freely, although it's reccomended that the generator has ~2x the filters as the discriminator to prevent the discriminator from beating the generator out, since the generator has a much much harder job.

Keep in mind, you can also pass these arguments when training:

batchSize=64 -- Batchsize - didn't get very good results with this over 128...

noise=normal, uniform -- pass ONE Of these. It seems like normal works a lot better, though.

nz=100 -- number of dimensions for Z

nThreads=1 -- number of data loading threads

gpu=1 -- gpu to use

name=experiment1 -- just to make sure you don't overwrite anything cool, change the checkpoint filenames with this

DATA_ROOT=myimages dataset=folder netD=checkpoints/your_discriminator_net.t7 netG=your_driscriminator_net.t7 th main.lua

Passing ndf and ngf will have no effect here - as the networks are loaded from the checkpoints. Resuming from the checkpoint and training on different data can have very interesting effects. Below, a GAN trained on generating landscapes is trained on abstract art for half of an epoch.

net=your_generator_net.t7 th generate.lua

Very straightforward... I hope. Keep in mind; you can also pass these when generating images:

batchSize=36 -- How many images to generate - keep a multiple of six for unpadded output.

imsize=1 -- How large the image(s) should be (not in pixels!)

noisemode=normal, line, linefull -- pass ONE of these. If you pass line, pass batchSize > 1 and imsize = 1, too.

name=generation1 -- just to make sure you don't overwrite anything cool, change the filename with this

####There are more passable arguments on the unmodified network's page - I think I included the more important ones here though####

- Pre-trained networks: flower paintings, cityscapes (comment in the open issue if you have suggestions!)

- creating animated gifs of walks throughout latent space

- a GUI for this whole thing... maybe...