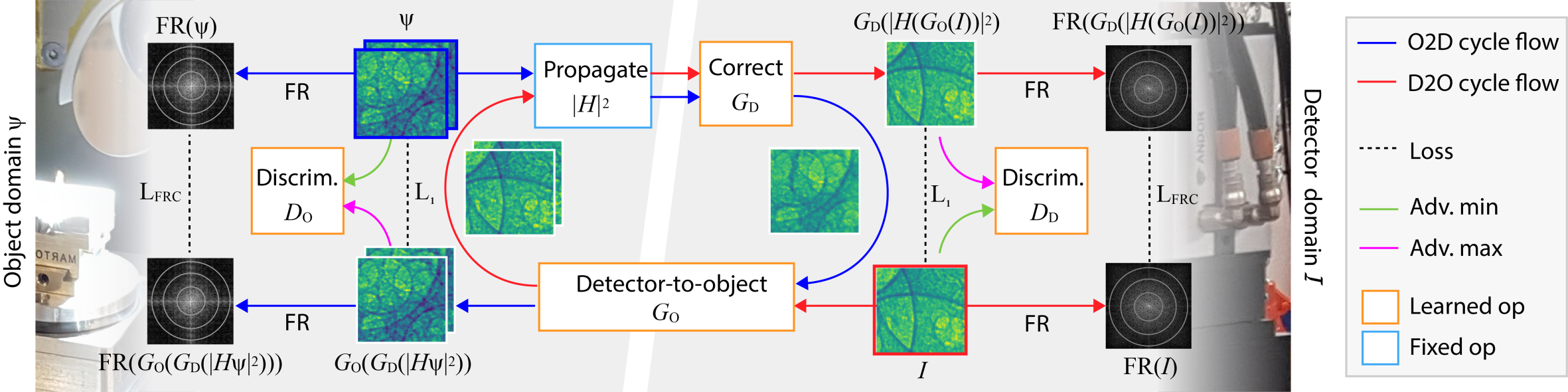

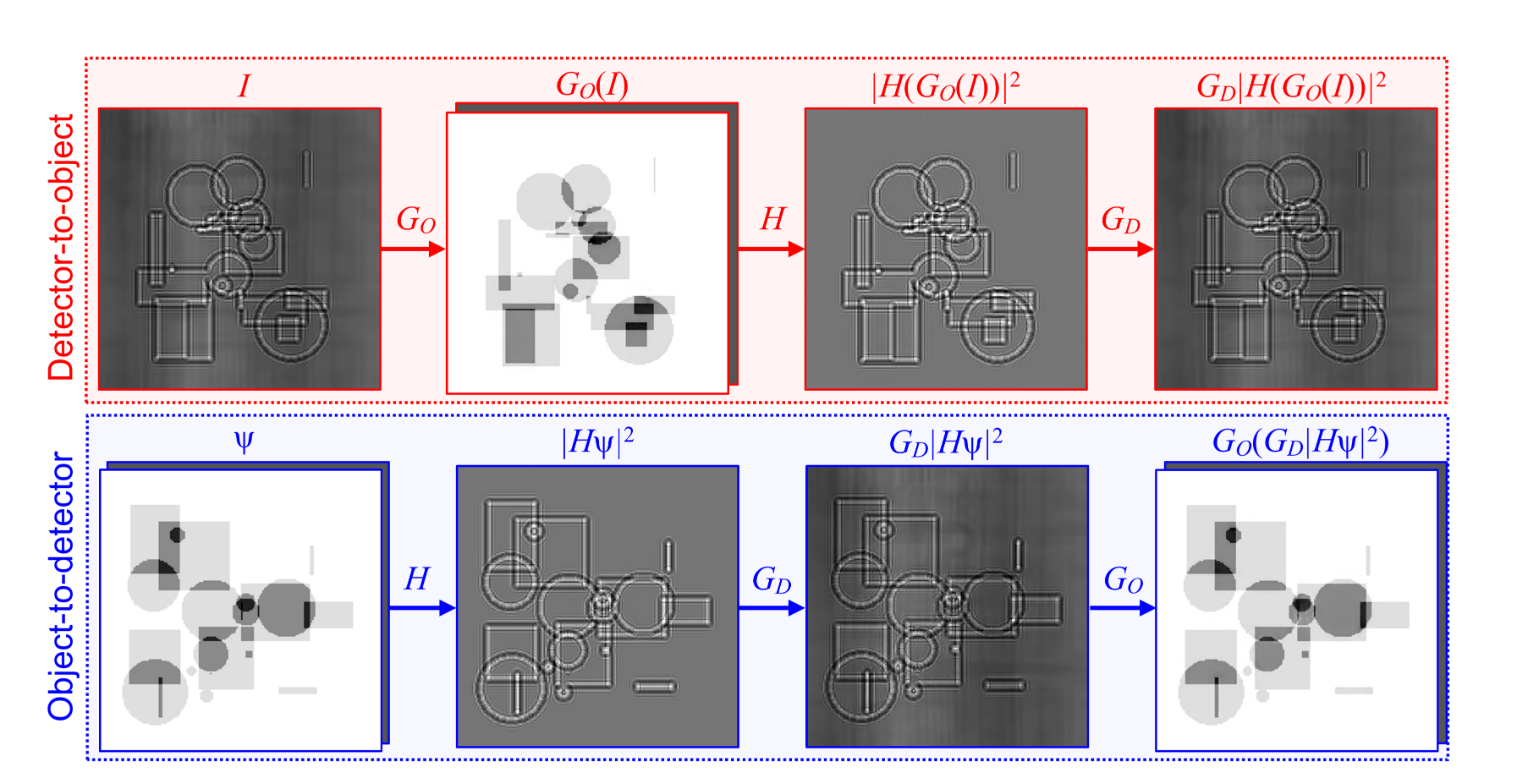

PhaseGAN is a deep-learning phase-retrieval approach allowing the use of unpaired datasets and includes the physics of image formation. For more detailed information about PhaseGAN training and performance, please refer to the PhaseGAN paper.

PhaseGAN Tutorial Google Colab | Code

- Linux (not tested for MacOS or Windows)

- Python3

- NVIDIA GPU (not tested for CPU)

Clone this repo:

git clone https://github.com/pvilla/PhaseGAN.git

cd PhaseGAN

To install the required python packages:

pip install -r requirements.txt

For the training, our provided data loader Dataset2channel.py support loading data with HDF5 format. An example of the dataset structure could be find in Example dataset folder and PhaseGAN validation dataset (Google Drive). Please note that ground truth images are provided for validation purposes, but we never use it as references in the unpaired training.

We used hdf5 data format for the original training. For the training with other data formats, you may want to create a customized data loader.

To run the training:

python3 train.py

For more training options, please check out:

python3 train.py --help

The training results will be saved in: ./results/fig/run_name/train.

The training parameters and losses will be saved to a txt file here: ./results/fig/run_name/log.txt.

The models will be saved in ./results/fig/run_name/save.

If you use this code for your research, please cite our paper.

@article{zhang2020phasegan,

title={PhaseGAN: A deep-learning phase-retrieval approach for unpaired datasets},

author={Zhang, Yuhe and Noack, Mike Andreas and Vagovic, Patrik and Fezzaa, Kamel and Garcia-Moreno, Francisco and Ritschel, Tobias and Villanueva-Perez, Pablo},

journal={arXiv preprint arXiv:2011.08660},

year={2020}

}

Our code is based on pytorch-CycleGAN-and-pix2pix and TernausNet.