- Install Python 3.11

- Execute

pip install -r requirements.txt - Install Tilt

- Make sure you have a local Kubernetes Cluster with either docker-compose, rancher-desktop or minikube

- Execute

tilt up

First of all, you need the Google Cloud Credentials JSON for the Computing Engine Default Service Account. If you don't

have one, create

it here.

The key needs to be named key.json and put into the backend Directory.

- Go to Vertex AI Model Registry

- Select newest Version

- Go to 'Deploy & Test'

- Click on 'Deploy to Endpoint'

- Enter a Name for the new Endpoint

- Go to 'Model Settings' and select 'n1-standard-2' for Machine Type

- Click on 'Deploy'

- Go to Vertex AI Endpoints and copy the ID of our newly created Endpoint.

- Go to Kubeflow Pipelines

- Select 'Configure'

- Create a new cluster by selecting europe-west1-c as zone and ticking the box at 'Allow access to the following Cloud APIs'

- When the cluster is created, click on 'Deploy'

- After Deployment, go to AI Platform Pipelines

- Click 'Open Pipelines Dashboard' on you newly created Instance

- Copy the URL

- Go to backend/Dockerfile

- Set ENDPOINT_ID to our Vertex AI Endpoint ID

- Set KUBEFLOW_URL to our Kubeflow Pipelines Instance URL

- Start the Docker Container

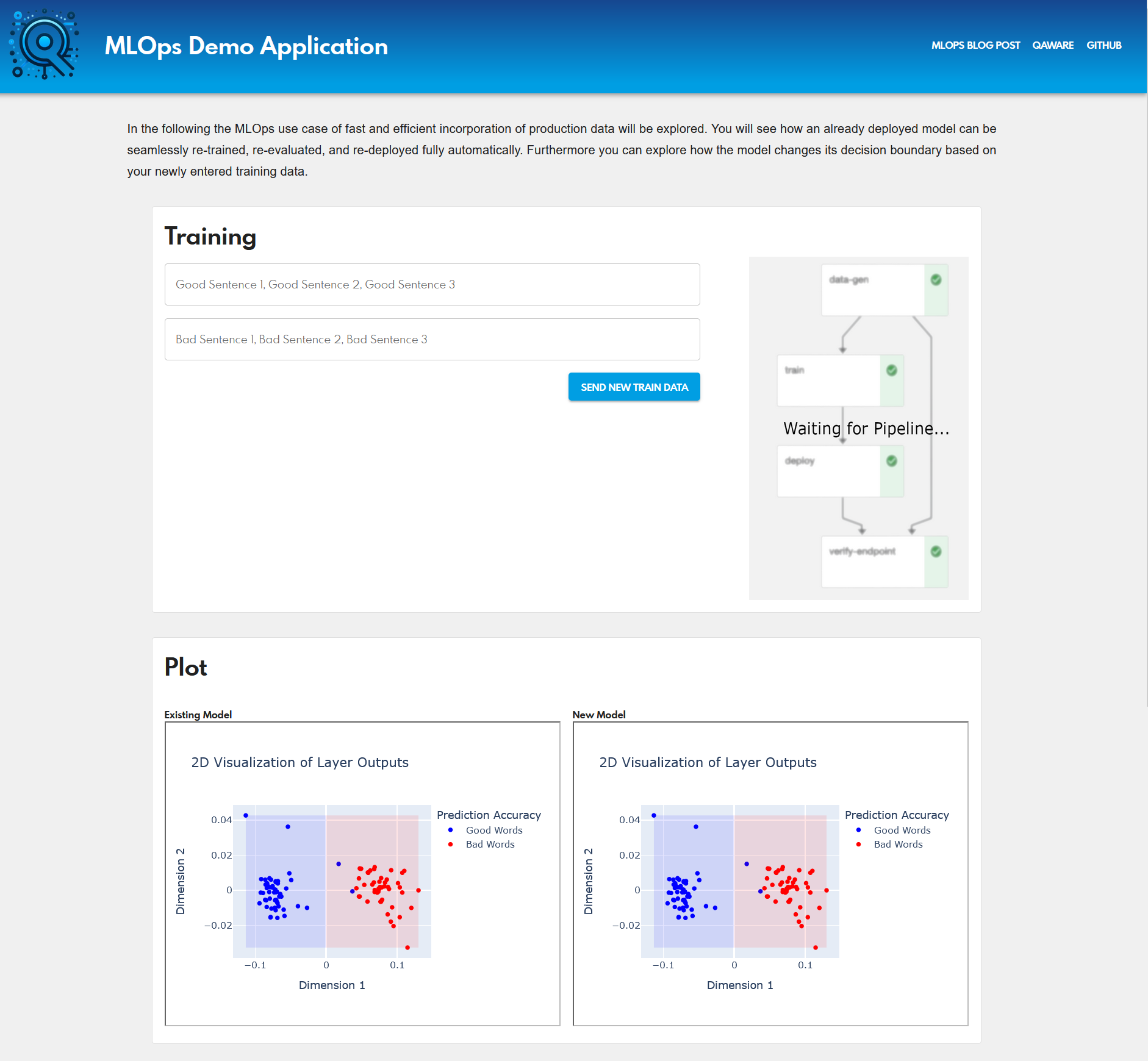

After the preparation, you can run the Webapp by running npm start in the frontend directory