A collection of CV implementations using Pytorch and OpenCV. Will continue to upload more

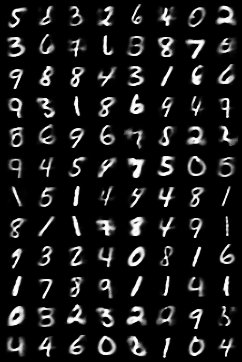

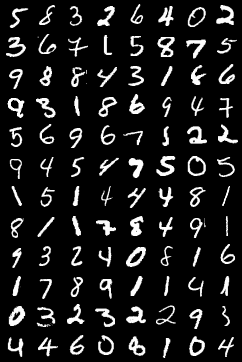

After being fed through an autoencoder, we can see that the reconstructed images are blurrier than the original images. Image quality seems a little better compared to the linear autoencoder.

VAE with a CNN. Unlike the vanilla VAE above, the bottleneck is rather small (Batch_size * 2 * 2). The resulting images clearly show the model struggling to generated a clear image due to the bottleneck.

GANs training over time on MNIST data

Same network architecture as Vanilla GANs but with Least Square loss

Same cost function as Vanilla GANs but with Deep convolutional layers. Produces better clearer images compared to the Vanilla GANs

Same architecture as DCGANs but with LeastSquares loss

Generating images with specific labels

CGAN with a LS loss

For this reconstruction task, MNIST images were cropped to only keep the middle 4 columns of pixel values, and CVAE model was told to reconstruct the original image using the cropped images as inputs.

Cropped Images / Reconstructed Images / Original Images

Adversarial Autoencoder that combines AE and GANs. This Pytorch implementation uses VAE instead of a vanilla AE.

The model was trained on edgeToShoes dataset. The training takes about 6 hours per epoch, and uses a little more than 5GB of gpu memory on my 1080ti. The model was trained for 5 epochs total so the model is not great. The gif is only here to illustrate that the training does improve the model overtime. Shoes->Edge seem much easier for the model to learn than Edge->shoes.

Edge / Shoes->Edge / Shoe / Edge->Shoe

Edge / Shoes->Edge / Shoe / Edge->Shoe

Content / Style / Transformed Image

Content / Style / Transformed Image

How changing alpha changes how much style to be trasnferred.

Turning MNIST numbers to an 8.