This repo is the official implementation of "Scale-Aware Modulation Meet Transformer".

14 Jul, 2023: Related code for detection and segmentation is coming soon. The paper will be available soon.14 Jul, 2023: SMT is accepted to ICCV 2023!

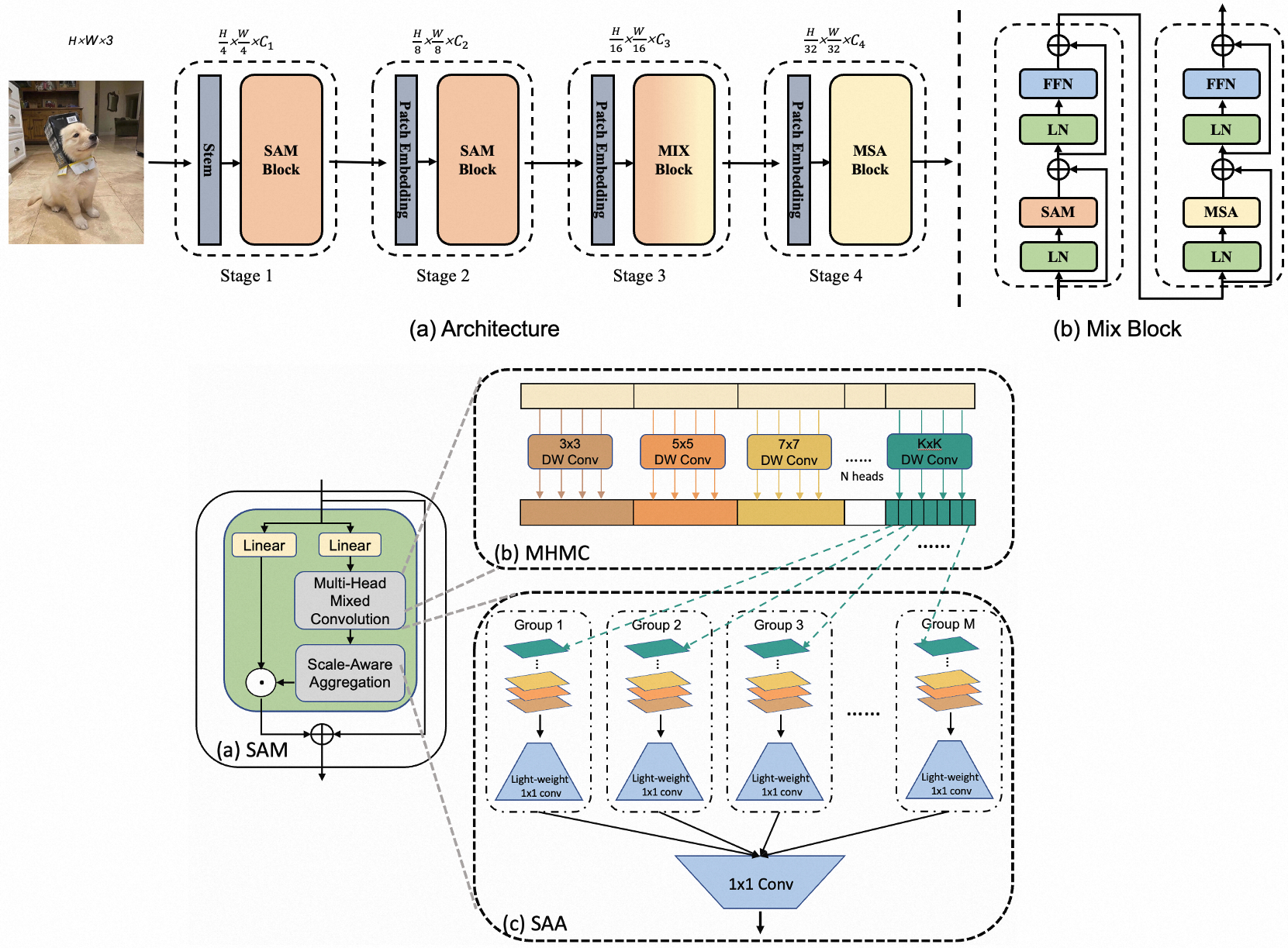

SMT is capably serves as a promising new generic backbone for efficient visual modeling.

It is a new hybrid ConvNet and vision Transformer backbone, which can effectively simulate the transition from local to global dependencies as the network goes deeper, resulting in superior performance over both ConvNets and Transformers.

ImageNet-1K and ImageNet-22K Pretrained SMT Models

| name | pretrain | resolution | acc@1 | acc@5 | #params | FLOPs | 22K model | 1K model |

|---|---|---|---|---|---|---|---|---|

| SMT-T | ImageNet-1K | 224x224 | 82.2 | 96.0 | 12M | 2.4G | - | github/ config/ |

| SMT-S | ImageNet-1K | 224x224 | 83.7 | 96.5 | 21M | 4.7G | - | github/ config |

| SMT-B | ImageNet-1K | 224x224 | 84.3 | 96.9 | 32M | 7.7G | - | github/config |

| SMT-L | ImageNet-22K | 224x224 | 87.1 | 98.1 | 81M | 17.6G | github/ config | github/ config |

| SMT-L | ImageNet-22K | 384x384 | 88.1 | 98.4 | 81M | 51.6G | github/ config | github/ config |

COCO Object Detection (2017 val)

| Backbone | Method | pretrain | Lr Schd | box mAP | mask mAP | #params | FLOPs |

|---|---|---|---|---|---|---|---|

| SMT-S | Mask R-CNN | ImageNet-1K | 3x | 49.0 | 43.4 | 40M | 265G |

| SMT-B | Mask R-CNN | ImageNet-1K | 3x | 49.8 | 44.0 | 52M | 328G |

| SMT-S | Cascade Mask R-CNN | ImageNet-1K | 3x | 51.9 | 44.7 | 78M | 744G |

| SMT-S | RetinaNet | ImageNet-1K | 3x | 47.3 | - | 30M | 247G |

| SMT-S | Sparse R-CNN | ImageNet-1K | 3x | 50.2 | - | 102M | 171G |

| SMT-S | ATSS | ImageNet-1K | 3x | 49.9 | - | 28M | 214G |

| SMT-S | DINO | ImageNet-1K | 4scale | 54.0 | - | 40M | 309G |

ADE20K Semantic Segmentation (val)

| Backbone | Method | pretrain | Crop Size | Lr Schd | mIoU (ss) | mIoU (ms) | #params | FLOPs |

|---|---|---|---|---|---|---|---|---|

| SMT-S | UperNet | ImageNet-1K | 512x512 | 160K | 49.2 | 50.2 | 50M | 935G |

| SMT-B | UperNet | ImageNet-1K | 512x512 | 160K | 49.6 | 50.6 | 62M | 1004G |

- Clone this repo:

git clone https://github.com/Afeng-x/SMT.git

cd SMT- Create a conda virtual environment and activate it:

conda create -n smt python=3.8 -y

conda activate smtInstall PyTorch>=1.10.0 with CUDA>=10.2:

pip3 install torch==1.10 torchvision torchaudio --index-url https://download.pytorch.org/whl/cu113- Install

timm==0.4.12:

pip install timm==0.4.12- Install other requirements:

pip install opencv-python==4.4.0.46 termcolor==1.1.0 yacs==0.1.8 pyyaml scipy ptflops thopTo evaluate a pre-trained SMT on ImageNet val, run:

python -m torch.distributed.launch --nproc_per_node 1 --master_port 12345 main.py --eval \

--cfg configs/smt/smt_base_224.yaml --resume /path/to/ckpt.pth \

--data-path /path/to/imagenet-1kTo train a SMT on ImageNet from scratch, run:

python -m torch.distributed.launch --master_port 4444 --nproc_per_node 8 main.py \

--cfg configs/smt/smt_tiny_224.yaml \

--data-path /path/to/imagenet-1k --batch-size 128For example, to pre-train a SMT-Large model on ImageNet-22K:

python -m torch.distributed.launch --nproc_per_node 8 --master_port 12345 main.py \

--cfg configs/smt/smt_large_224_22k.yaml --data-path /path/to/imagenet-22k \

--batch-size 128 --accumulation-steps 4 python -m torch.distributed.launch --nproc_per_node 8 --master_port 12345 main.py \

--cfg configs/smt/smt_large_384_22kto1k_finetune.yaml \

--pretrained /path/to/pretrain_ckpt.pth --data-path /path/to/imagenet-1k \

--batch-size 64 [--use-checkpoint]

To measure the throughput, run:

python -m torch.distributed.launch --nproc_per_node 1 --master_port 12345 main.py \

--cfg <config-file> --data-path <imagenet-path> --batch-size 64 --throughput --disable_ampThis repository is built on top of the timm library and the official Swin Transformer repository.