This repository is used to teach and provide examples for basic and intermediate concepts regarding Machine Learning and Deep Learning.

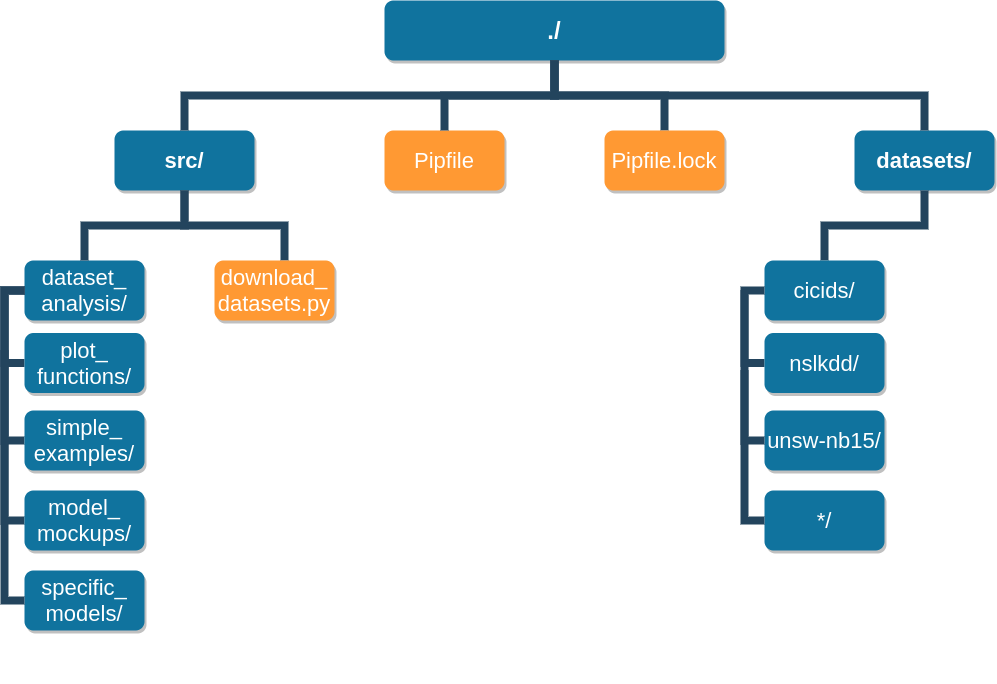

Contains a few examples of common operations performed on almost every project, such as: data loading, statistical analysis, simple data pre-processing, visualization, scaling, feature selection, etc.

Contains a few implementations of learning models that are classically used for didactic purposes, like neural networks on the MNIST dataset.

A few code snippets for plotting useful stuff, like commonly used activation functions.

Actual learning models applied to famous datasets used in computer networks (usually).

Scripts developed to analyse real, specific datasets.

Download the following datasets (.csv files only): CICIDS, NSL-KDD and UNSW-NB15.

When downloading UNSW-NB15, "-1 / unknown" could be printed on terminal. This is not an error, it is a result of wget not being able to estimate the remaining time for large files. Wait until the program stop running.

Some files used as examples were taken directly from the examples in the corresponding library's documentation and may contain code that is not appropriate for hyperparameter tuning, specifically some examples from the keras documentation.

As pointed out in this issue. Some examples use a test set (named as such) for validation. Although the code is not being used for hyperparameter tuning, the mixed nomenclature between test and validation sets should have been avoided and are currently (as of May 7th, 2020). Be aware that in order to perform hyperparameter tuning there is the need to separate the test set for usage only after the model has been finely tuned.

## This sould be avoided:

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

.

.

.

model.fit_generator(datagen.flow(x_train, y_train,

batch_size=batch_size),

epochs=epochs,

validation_data=(x_test, y_test),

workers=4)

.

.

.

scores = model.evaluate(x_test, y_test, verbose=1)