For Text Classification

Densely Connected CNN with Multi-scale Feature Attention for Text Classification is initially described in an IJCAI-ECAI 2018 paper. It provides a new CNN architecture to produce variable n-gram features. It is worth nothing that:

- It uses dense connections to build short-cut paths between upstream and downstream convolutional blocks, which enable the model to compose features of larger scale from those of smaller scale, and thus produce variable n-gram features.

- A multi-scale feature attention is developed to adaptively select multi-scale features for classification.

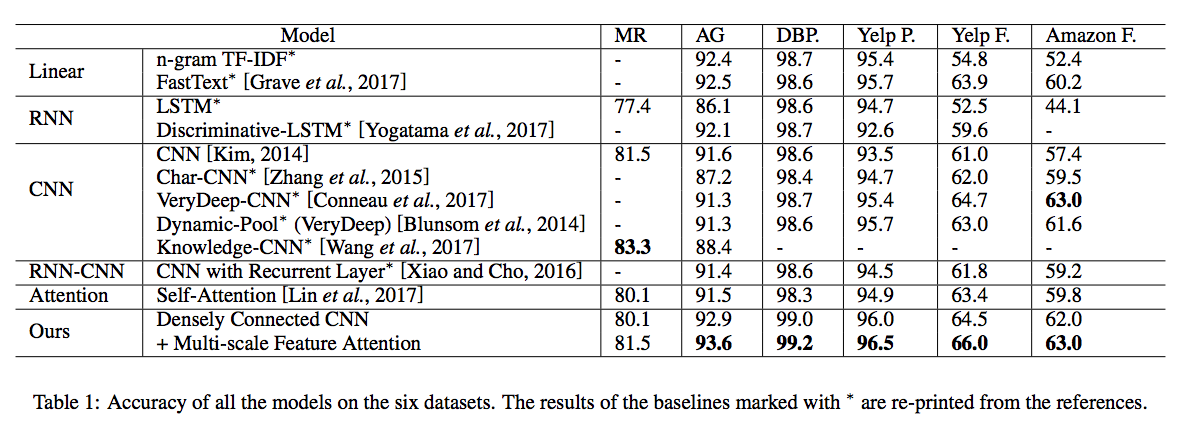

- It obtains competitive performance against state-of-the-art baselines on six benchmark datasets.

If you find these models useful in your research, please consider citing:

@article{Wang2018Densely,

title={Densely Connected CNN with Multi-scale Feature Attention for Text Classification},

author={Wang, Shiyao and Huang, Minlie and Deng, Zhidong},

conference={IJCAI-ECAI 2018, Stockholm, Sweden},

year={2018}

}

-

Please clone the Densely-Connected-CNN-with-Multiscale-Feature-Attention repository, and we call the directory that you cloned as ${DenseAttention_ROOT}.

-

please clone the Caffe from caffe_for_text and build it. It is a modified version of the offical repository.

-

Please download text classification datasets from benchmark datasets which are releasd by Zhang et al., 2015. AGNews in

$(DenseAttention_ROOT)/data/ag_news_csvis an example dataset in this repo. -

Please download pretrained word vectors from Glove.

-

Prepare training & testing data by using original datasets, pretrained word vectors and tools in

$(DenseAttention_ROOT)/data/gen_data.py. -

Generate the training & testing prototxt by using tools in

$(DenseAttention_ROOT)/script/gen_model.py. -

To perform experiments, run the script with the corresponding config file as input. For example, to train and test, use the following command

cd $(DenseAttention_ROOT)/experiment ./train.shThis example uses 4 NVIDIA Titan X Pascal GPUs and the trained model as well as logs are saved in

$(DenseAttention_ROOT)/experiment. -

Please find more details in config files and in our code.