🏆 The 1st Place Solution to The 8th NVIDIA AI City Challenge (2024) Track 2: CityLLaVA: Efficient Fine-Tuning for VLMs in City Scenario.

| TeamName | MRR Score | Rank |

|---|---|---|

| AliOpenTrek(Ours) | 33.4308 | 1 |

| AIO_ISC | 32.8877 | 2 |

| Lighthouse | 32.3006 | 3 |

- Install Package

conda create -n cityllava python=3.10 -y

conda activate cityllava

cd AICITY2024_Track2_AliOpenTrek_CityLLaVA/

pip install --upgrade pip # enable PEP 660 support

pip install -e .

pip install flash-attn --no-build-isolationFirstly change the directory to data_preprocess and create the data directory.

cd data_preprocess

mkdir ./data

Please download the wts-dataset. Then, put the datasets under ./data. After unzip the datasets, the directory structure should be like this:

.

├── data

│ ├── BDD_PC_5k

│ │ ├── annotations

│ │ │ ├── bbox_annotated

│ │ │ └── caption

│ │ ├── bbox_global # BDD global views

│ │ │ ├── train

│ │ │ └── val

│ │ ├── bbox_local # BDD local views

│ │ │ ├── train

│ │ │ └── val

│ │ └── videos

│ ├── WTS

│ │ ├── annotations

│ │ │ ├── bbox_annotated

│ │ │ ├── bbox_generated

│ │ │ └── caption

│ │ ├── bbox_global # WTS global views

│ │ │ ├── train

│ │ │ └── val

│ │ ├── bbox_local # BDD local views

│ │ │ ├── train

│ │ │ └── val

│ │ └── videos

│ └── test_part

│ ├── WTS_DATASET_PUBLIC_TEST

│ │ ├──bbox_global/test/public # WTS Test Images

│ │ ├──bbox_local/test/public

│ │ └──external/BDD_PC_5K

│ │ ├──bbox_global/test/public # BDD Test Images

│ │ └──bbox_local/test/public

│ └── WTS_DATASET_PUBLIC_TEST_BBOX

├── processed_anno

│ ├── frame_bbox_anno

│ │ ├── bdd_test_all_video_with_bbox_anno_first_frame.json

│ │ ├── bdd_train_all_video_with_bbox_anno_first_frame.json

│ │ ├── bdd_val_all_video_with_bbox_anno_first_frame.json

│ │ ├── wts_test_all_video_with_bbox_anno_first_frame.json

│ │ ├── wts_train_all_video_with_bbox_anno_first_frame.json

│ │ └── wts_val_all_video_with_bbox_anno_first_frame.json

│ ├── llava_format

│ │ ├── wts_bdd_train.json

│ │ └── wts_bdd_val.json

│ ├──best_view_for_test.json

│ └──perspective_test_images.json

└── ... # python and shell scripts

Then run the following script to process the annotations:

bash prepare_data.sh

Then the processed annotations could be found under ./processed_anno, and the train json is:

'./data/processed_anno/llava_format/wts_bdd_llava_qa_train_stage_filted_checked.json'

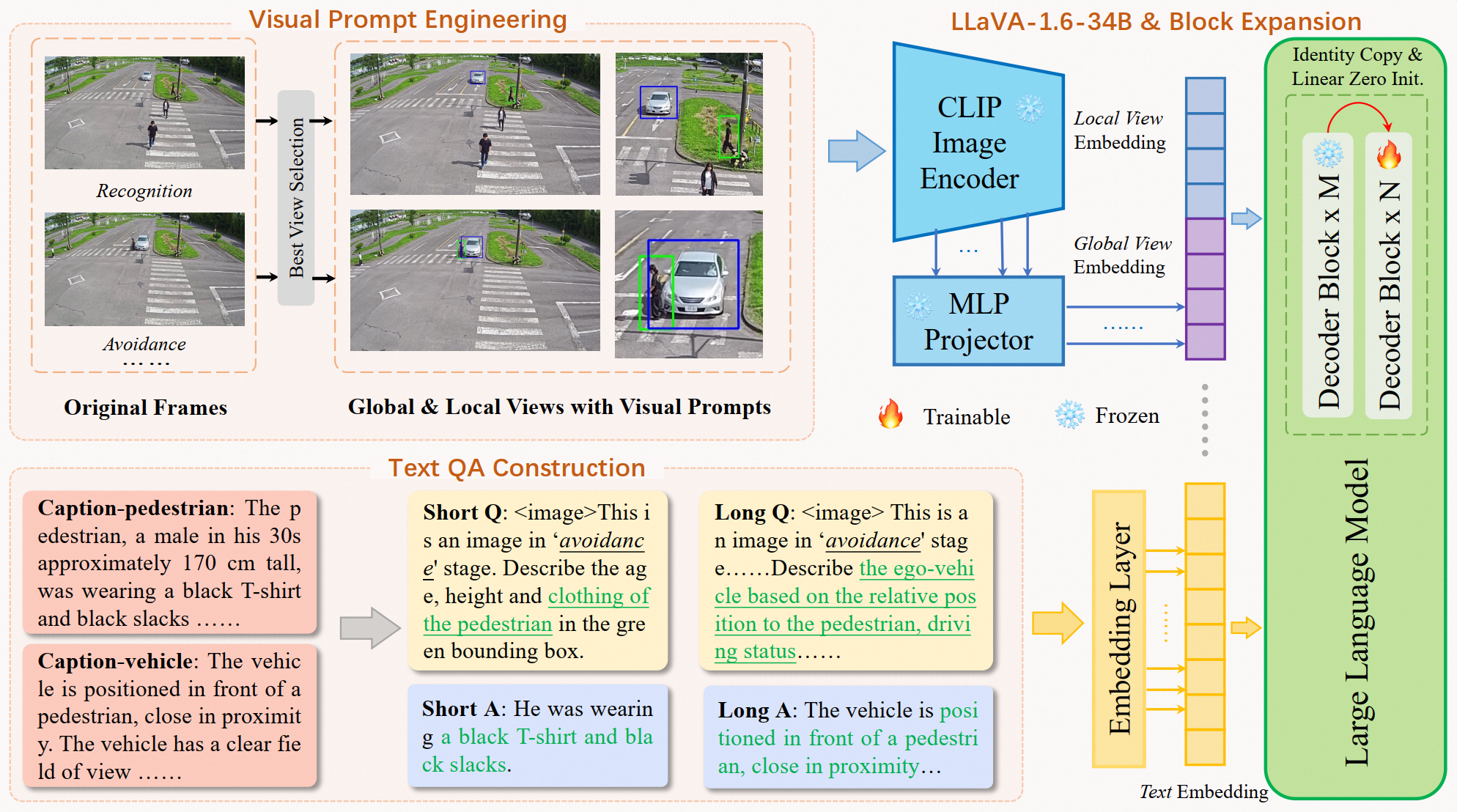

We use the[block expansion](https://github.com/TencentARC/LLaMA-Pro.git) to fine-tune the VLMs. 8~16 blocks are suggested for balancing the performance and efficiency. We add 12 blcoks to the original llava-1.6-34b. the llava-1.6-34b-12block model could be created by these steps:

- Download the llava-1.6-34b model to

./models, and add block with this script:

python block_expansion_llava_1_6.py

- Copy the

*.jsonandtokenizer.modelform./models/llava-v1.6-34bto./models/llava-v1.6-34b-12block; - Modify the

num_hidden_layers=72(new_layer_nums= original_layer_nums+block_layer_nums) inconfig.jsonof the llava-1.6-34b-12block model.

We use 8xA100 GPUs for fine-tuning. The training process takes approximately 8 hours by this script:

bash scripts/finetune_block_bigsmall.sh

The fine-tuned model could be download here.

Firstly, you should check the parameters defined at ./scripts/inference.sh, ensure that all essential files exist.

Note that should modify the path in Line 8 in ./llava/serve/batch_inference_block.py (sys.path.append)

Now you can do inference on WTS_TEST_SET:

bash scripts/inference.sh

We use the wts-dataset for evaluation.

If you find CityLLaVA useful for your research and applications, please cite using this BibTeX:

@article{duan2024cityllava,

title={CityLLaVA: Efficient Fine-Tuning for VLMs in City Scenario},

url={https://github.com/qingchunlizhi/AICITY2024_Track2_AliOpenTrek_CityLLaVA},

author={Zhizhao Duan, Hao Cheng, Duo Xu, Xi Wu, Xiangxie Zhang, Xi Ye, and Zhen Xie},

month={April},

year={2024}

}