Guanjun Wu 1*, Taoran Yi 2*,

Jiemin Fang 3‡, Lingxi Xie 3 ,

Xiaopeng Zhang 3 , Wei Wei 1 ,Wenyu Liu 2 , Qi Tian 3 , Xinggang Wang 2‡✉

1 School of CS, HUST 2 School of EIC, HUST 3 Huawei Inc.

* Equal Contributions. ‡\ddagger Project Lead. ✉ Corresponding Author.

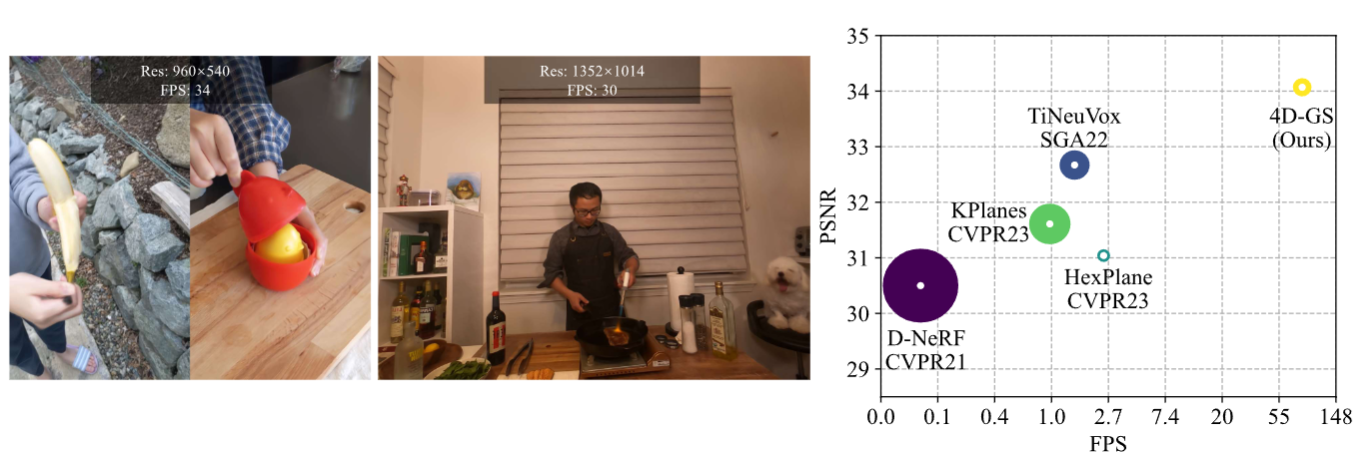

Our method converges very quickly and achieves real-time rendering speed.

Our method converges very quickly and achieves real-time rendering speed.

New Colab demo: (Thanks Tasmay-Tibrewal

)

Old Colab demo: (Thanks camenduru.)

Light Gaussian implementation: This link (Thanks pablodawson)

2024.6.25: we clean the code and add an explanation of the parameters.

2024.3.25: Update guidance for hypernerf and dynerf dataset.

2024.03.04: We change the hyperparameters of the Neu3D dataset, corresponding to our paper.

2024.02.28: Update SIBR viewer guidance.

2024.02.27: Accepted by CVPR 2024. We delete some logging settings for debugging, the corrected training time is only 8 mins (20 mins before) in D-NeRF datasets and 30 mins (1 hour before) in HyperNeRF datasets. The rendering quality is not affected.

Please follow the 3D-GS to install the relative packages.

git clone https://github.com/hustvl/4DGaussians

cd 4DGaussians

git submodule update --init --recursive

conda create -n Gaussians4D python=3.7

conda activate Gaussians4D

pip install -r requirements.txt

pip install -e submodules/depth-diff-gaussian-rasterization

pip install -e submodules/simple-knnIn our environment, we use pytorch=1.13.1+cu116.

For synthetic scenes: The dataset provided in D-NeRF is used. You can download the dataset from dropbox.

#!/bin/bash

# 设置下载URL

URL="https://www.dropbox.com/scl/fi/cdcmkufncwcikk1dzbgb4/data.zip?rlkey=n5m21i84v2b2xk6h7qgiu8nkg&e=1&st=73y3kcei&dl=1"

# 设置下载目录

DOWNLOAD_DIR="/home/qingpo.wuwu1/Project_2_3dGS_Cars/datasets/3_4DGS/dner"

# 设置输出文件名

OUTPUT_FILE="$DOWNLOAD_DIR/data.zip"

# 确保下载目录存在

mkdir -p "$DOWNLOAD_DIR"

# 使用wget下载文件

wget --continue -O "OUTPUT_FILE" "OUTPUT_FILE" "URL"

# 检查下载是否成功

if [ $? -eq 0 ]; then

echo "文件下载成功: $OUTPUT_FILE"

else

echo "文件下载失败"

fiFor real dynamic scenes: The dataset provided in HyperNeRF is used. You can download scenes from Hypernerf Dataset and organize them as Nerfies.

#!/bin/bash

# 设置代理(包含认证信息)

export http_proxy="http://username:password@127.0.0.1:7890"

export https_proxy="http://username:password@127.0.0.1:7890"

# 切换到目标目录

cd /home/qingpo.wuwu1/Project_2_3dGS_Cars/datasets/3_4DGS/hypernerf

# 创建一个包含所有URL的文件

cat << EOF > urls.txt

https://github.com/google/hypernerf/releases/download/v0.1/interp_aleks-teapot.zip

https://github.com/google/hypernerf/releases/download/v0.1/interp_chickchicken.zip

https://github.com/google/hypernerf/releases/download/v0.1/interp_cut-lemon.zip

https://github.com/google/hypernerf/releases/download/v0.1/interp_hand.zip

https://github.com/google/hypernerf/releases/download/v0.1/interp_slice-banana.zip

https://github.com/google/hypernerf/releases/download/v0.1/interp_torchocolate.zip

https://github.com/google/hypernerf/releases/download/v0.1/misc_americano.zip

https://github.com/google/hypernerf/releases/download/v0.1/misc_cross-hands.zip

https://github.com/google/hypernerf/releases/download/v0.1/misc_espresso.zip

https://github.com/google/hypernerf/releases/download/v0.1/misc_keyboard.zip

https://github.com/google/hypernerf/releases/download/v0.1/misc_oven-mitts.zip

https://github.com/google/hypernerf/releases/download/v0.1/misc_split-cookie.zip

https://github.com/google/hypernerf/releases/download/v0.1/misc_tamping.zip

https://github.com/google/hypernerf/releases/download/v0.1/vrig_3dprinter.zip

https://github.com/google/hypernerf/releases/download/v0.1/vrig_broom.zip

https://github.com/google/hypernerf/releases/download/v0.1/vrig_chicken.zip

https://github.com/google/hypernerf/releases/download/v0.1/vrig_peel-banana.zip

https://github.com/google/hypernerf/archive/refs/tags/v0.1.zip

https://github.com/google/hypernerf/archive/refs/tags/v0.1.tar.gz

EOF

# 使用GNU Parallel进行多线程下载,显示进度

parallel --bar --eta --progress \

-j 4 \

wget -c --tries=10 --wait=5 --progress=bar:force {} :::: urls.txt

# 下载完成后删除URL文件

rm urls.txtMeanwhile, Plenoptic Dataset could be downloaded from their official websites. To save the memory, you should extract the frames of each video and then organize your dataset as follows.

├── data

│ | dnerf

│ ├── mutant

│ ├── standup

│ ├── ...

│ | hypernerf

│ ├── interp

│ ├── misc

│ ├── virg

│ | dynerf

│ ├── cook_spinach

│ ├── cam00

│ ├── images

│ ├── 0000.png

│ ├── 0001.png

│ ├── 0002.png

│ ├── ...

│ ├── cam01

│ ├── images

│ ├── 0000.png

│ ├── 0001.png

│ ├── ...

│ ├── cut_roasted_beef

| ├── ...

For multipleviews scenes: If you want to train your own dataset of multipleviews scenes, you can orginize your dataset as follows:

├── data

| | multipleview

│ | (your dataset name)

│ | cam01

| ├── frame_00001.jpg

│ ├── frame_00002.jpg

│ ├── ...

│ | cam02

│ ├── frame_00001.jpg

│ ├── frame_00002.jpg

│ ├── ...

│ | ...

After that, you can use the multipleviewprogress.sh we provided to generate related data of poses and pointcloud.You can use it as follows:

bash multipleviewprogress.sh (youe dataset name)You need to ensure that the data folder is organized as follows after running multipleviewprogress.sh:

├── data

| | multipleview

│ | (your dataset name)

│ | cam01

| ├── frame_00001.jpg

│ ├── frame_00002.jpg

│ ├── ...

│ | cam02

│ ├── frame_00001.jpg

│ ├── frame_00002.jpg

│ ├── ...

│ | ...

│ | sparse_

│ ├── cameras.bin

│ ├── images.bin

│ ├── ...

│ | points3D_multipleview.ply

│ | poses_bounds_multipleview.npy

For training synthetic scenes such as bouncingballs, run

python train.py -s data/dnerf/bouncingballs --port 6017 --expname "dnerf/bouncingballs" --configs arguments/dnerf/bouncingballs.py

这个代码生成 /point_cloud/iteration_{num}/point_cloud.ply 文件 & cfg_args 文件 还有 deformation.pth & deformation_table.path & deformation_acum.pth 文件

For training dynerf scenes such as cut_roasted_beef, run

# (1) 从 每个 video 中提取 frames

python scripts/preprocess_dynerf.py --datadir data/dynerf/cut_roasted_beef

# (2) 从 input data 中生成 point clouds

bash colmap.sh data/dynerf/cut_roasted_beef llff

# (3) 把 (2) 得到的 point clouds 作下载样

python scripts/downsample_point.py data/dynerf/cut_roasted_beef/colmap/dense/workspace/fused.ply data/dynerf/cut_roasted_beef/points3D_downsample2.ply

# (4) train.

python train.py -s data/dynerf/cut_roasted_beef --port 6017 --expname "dynerf/cut_roasted_beef" --configs arguments/dynerf/cut_roasted_beef.py For training hypernerf scenes such as virg/broom: Pregenerated point clouds by COLMAP are provided here. Just download them and put them in to correspond folder, and you can skip the former two steps. Also, you can run the commands directly.

# (1) 计算 COLMAP 生成的 dense point clouds

bash colmap.sh /home/qingpo.wuwu1/Project_2_3dGS_Cars/datasets/3_4DGS/hypernerf/virg/broom2 hypernerf

# (2) 把 (1) 得到的 point clouds 作下载样

python scripts/downsample_point.py data/hypernerf/virg/broom2/colmap/dense/workspace/fused.ply data/hypernerf/virg/broom2/points3D_downsample2.ply

# (3) train.

python train.py -s data/hypernerf/virg/broom2/ --port 6017 --expname "hypernerf/broom2" --configs arguments/hypernerf/broom2.py For training multipleviews scenes,you are supposed to build a configuration file named (you dataset name).py under "./arguments/mutipleview",after that,run

python train.py -s data/multipleview/(your dataset name) --port 6017 --expname "multipleview/(your dataset name)" --configs arguments/multipleview/(you dataset name).py For your custom datasets, install nerfstudio and follow their COLMAP pipeline. You should install COLMAP at first, then:

pip install nerfstudio

# computing camera poses by colmap pipeline

ns-process-data images --data data/your-data --output-dir data/your-ns-data

cp -r data/your-ns-data/images data/your-ns-data/colmap/images

python train.py -s data/your-ns-data/colmap --port 6017 --expname "custom" --configs arguments/hypernerf/default.py You can customize your training config through the config files.

Also, you can train your model with checkpoint.

python train.py -s data/dnerf/bouncingballs --port 6017 --expname "dnerf/bouncingballs" --configs arguments/dnerf/bouncingballs.py --checkpoint_iterations 200 # change it.Then load checkpoint with:

python train.py -s data/dnerf/bouncingballs --port 6017 --expname "dnerf/bouncingballs" --configs arguments/dnerf/bouncingballs.py --start_checkpoint "output/dnerf/bouncingballs/chkpnt_coarse_200.pth"

# finestage: --start_checkpoint "output/dnerf/bouncingballs/chkpnt_fine_200.pth"Run the following script to render the images.

python render.py --model_path "output/dnerf/bouncingballs/" --skip_train --configs arguments/dnerf/bouncingballs.py

这个代码会生成 test & video 文件夹

video_rgb_bouncingbox.mp4

You can just run the following script to evaluate the model.

python metrics.py --model_path "output/dnerf/bouncingballs/"

这个代码会生成 per_view.json

和 results.json

和 results.json

There are some helpful scripts, please feel free to use them.

export_perframe_3DGS.py:

get all 3D Gaussians point clouds at each timestamps.

usage:

python export_perframe_3DGS.py --iteration 14000 --configs arguments/dnerf/lego.py --model_path output/dnerf/lego You will a set of 3D Gaussians are saved in output/dnerf/lego/gaussian_pertimestamp. 可以看出来,我们运行了上面的代码之后,代码保存了 20个 time_{num}.ply 文件到 gaussian_pertimestamp 文件夹下面:

weight_visualization.ipynb:

visualize the weight of Multi-resolution HexPlane module.

merge_many_4dgs.py:

merge your trained 4dgs.

usage:

export exp_name="dynerf"

python merge_many_4dgs.py --model_path output/$exp_name/sear_steak

python merge_many_4dgs.py --model_path output/lego/sear_steakcolmap.sh:

generate point clouds from input data

bash colmap.sh data/hypernerf/virg/vrig-chicken hypernerf

bash colmap.sh data/dynerf/sear_steak llffBlender format seems doesn't work. Welcome to raise a pull request to fix it.

downsample_point.py :downsample generated point clouds by sfm.

python scripts/downsample_point.py data/dynerf/sear_steak/colmap/dense/workspace/fused.ply data/dynerf/sear_steak/points3D_downsample2.plyIn my paper, I always use colmap.sh to generate dense point clouds and downsample it to less than 40000 points.

Here are some codes maybe useful but never adopted in my paper, you can also try it.

Welcome to also check out these awesome concurrent/related works, including but not limited to

Deformable 3D Gaussians for High-Fidelity Monocular Dynamic Scene Reconstruction

SC-GS: Sparse-Controlled Gaussian Splatting for Editable Dynamic Scenes

MD-Splatting: Learning Metric Deformation from 4D Gaussians in Highly Deformable Scenes

4DGen: Grounded 4D Content Generation with Spatial-temporal Consistency

Diffusion4D: Fast Spatial-temporal Consistent 4D Generation via Video Diffusion Models

DreamGaussian4D: Generative 4D Gaussian Splatting

EndoGaussian: Real-time Gaussian Splatting for Dynamic Endoscopic Scene Reconstruction

EndoGS: Deformable Endoscopic Tissues Reconstruction with Gaussian Splatting

Endo-4DGS: Endoscopic Monocular Scene Reconstruction with 4D Gaussian Splatting

This project is still under development. Please feel free to raise issues or submit pull requests to contribute to our codebase.

Some source code of ours is borrowed from 3DGS, K-planes, HexPlane, TiNeuVox, Depth-Rasterization. We sincerely appreciate the excellent works of these authors.

We would like to express our sincere gratitude to @zhouzhenghong-gt for his revisions to our code and discussions on the content of our paper.

Some insights about neural voxel grids and dynamic scenes reconstruction originate from TiNeuVox. If you find this repository/work helpful in your research, welcome to cite these papers and give a ⭐.

@InProceedings{Wu_2024_CVPR,

author = {Wu, Guanjun and Yi, Taoran and Fang, Jiemin and Xie, Lingxi and Zhang, Xiaopeng and Wei, Wei and Liu, Wenyu and Tian, Qi and Wang, Xinggang},

title = {4D Gaussian Splatting for Real-Time Dynamic Scene Rendering},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {20310-20320}

}

@inproceedings{TiNeuVox,

author = {Fang, Jiemin and Yi, Taoran and Wang, Xinggang and Xie, Lingxi and Zhang, Xiaopeng and Liu, Wenyu and Nie\ss{}ner, Matthias and Tian, Qi},

title = {Fast Dynamic Radiance Fields with Time-Aware Neural Voxels},

year = {2022},

booktitle = {SIGGRAPH Asia 2022 Conference Papers}

}