This work is still under development

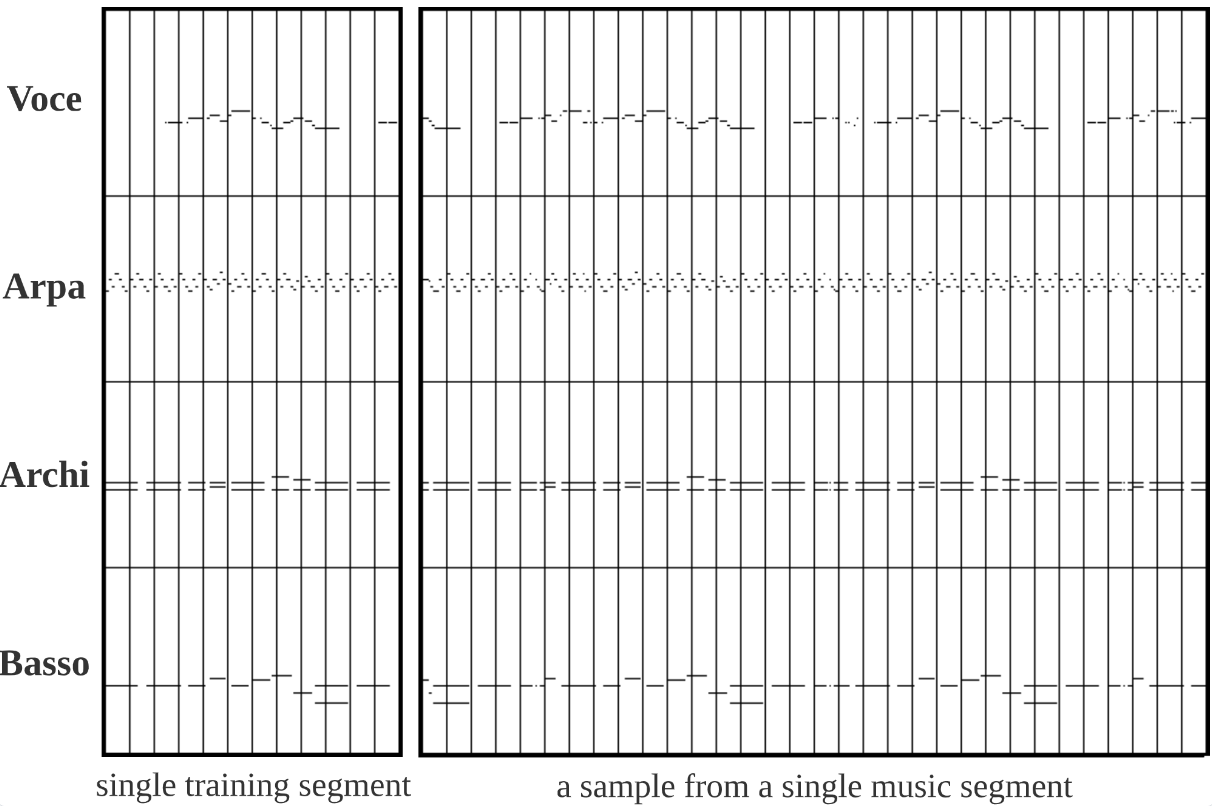

Torch implementation for an auto-regressive sequential generative model that can learn from a single multi-track music segment, to generate coherent, aesthetic, and variable polyphonic music of multi-instruments with an arbitrary length of bar. Such as:

- JSB Chorale datasets pickle or MIDI.

- some multi-track MIDIs.

Put MIDIs in train_data/, such as train_data/JSB-Chorales-dataset/*.pickle and train_data/midi/*.mid

- train and evaluate with MIDI

make train CUDA=0 NAME=test TYPE=xl N=400 DIR=midi FILE=xxx.mid

make test CUDA=0 NAME=test TYPE=xl DIR=midi FILE=xxx.mid

- train and evaluate with pickle (JSB chorales dataset)

make train_pickle CUDA=0 NAME=test TYPE=xl N=400 DIR=JSB-Chorales-dataset FILE=jsb-chorales-16th.pkl INDEX=0

make test_pickle CUDA=0 NAME=test TYPE=xl DIR=JSB-Chorales-dataset FILE=jsb-chorales-16th.pkl INDEX=0

If you use this code for your research, please cite our paper:

@inproceedings{sintra2021,

title={Sin{T}ra: Learning an inspiration model from a single multi-track music segment},

author={Qingwei Song, Qiwei Sun, Dongsheng Guo, Haiyong Zheng},

booktitle={Proceedings of the 22nd Conference of the International Society for Music Information Retrieval},

year={2021}

}Feel free to contact me! songqingyu@stu.ouc.edu.cn