Manage kubernetes in the most light and convenient way

English | 中文

✨ Create Cluster

- Supports online deployment, proxy deployment, offline deployment

- Frequently-used mirror repository management

- Create clusters / install plugins from templates

- Supports multi-version K8S and CRI deployments

- NFS storage support

🎈 Cluster hosting

- kubeadm Cluster hosting

- Cluster plug-in installation/uninstallation

- Real-time logs during cluster operations

- Access to cluster kubectl web console

- Edit clusters (metadata, etc.)

- Adding / removing cluster nodes

- Cluster backup and restore, scheduled backups

- Cluster backup space management

- Remove cluster from kubeclipper

☸️ Cluster Management

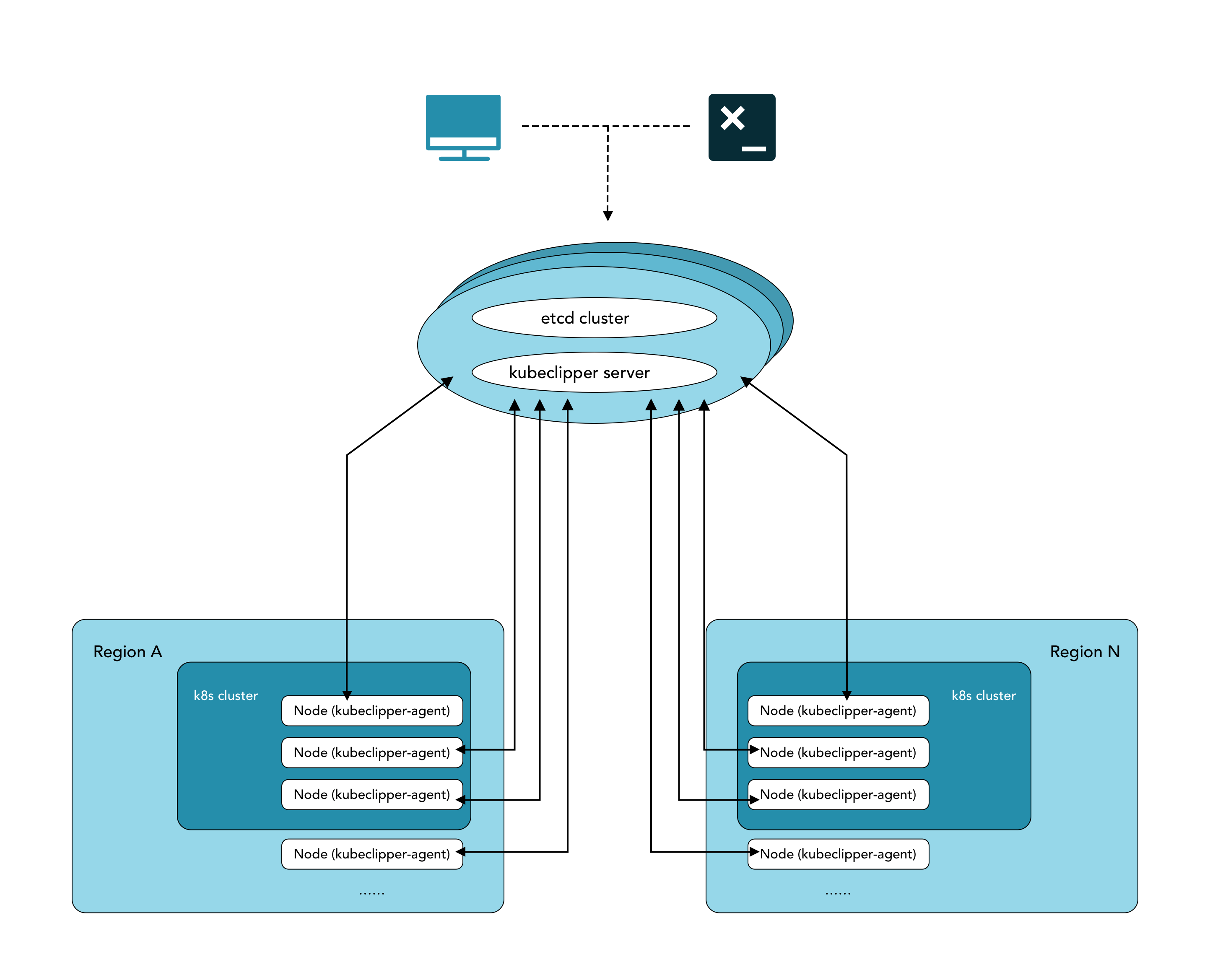

- Multi-region, multi-cluster management

- Cluster plug-in installation/uninstallation

- Access to cluster kubectl web console

- Real-time logs during cluster operations

- Edit clusters (metadata, etc.)

- Deleting clusters

- Adding / removing cluster nodes

- Retry from breakpoint after creation failure

- Cluster backup and restore, scheduled backups

- Cluster version upgrade

- Save entire cluster / individual plugins as templates

- Cluster backup space management

🌐 Region & Node Management

- Adding agent nodes and specifying regions (kcctl)

- Node status management

- Connect node terminal

- Node enable/disable

- View the list of nodes and clusters under a region

🚪 Access control

- User and role management

- Custom Role Management

- OIDC integrate

For users who are new to KubeClipper and want to get started quickly, it is recommended to use the All-in-One installation mode, which can help you quickly deploy KubeClipper with zero configuration.

KubeClipper itself does not take up too many resources, but in order to run Kubernetes better in the future, it is recommended that the hardware configuration should not be lower than the minimum requirements.

You only need to prepare a host with reference to the following requirements for machine hardware and operating system.

- Make sure your machine meets the minimum hardware requirements: CPU >= 2 cores, RAM >= 2GB.

- Operating System: CentOS 7.x / Ubuntu 18.04 / Ubuntu 20.04.

-

Nodes must be able to connect via

SSH. -

You can use the

sudo/curl/wget/tarcommand on this node.

It is recommended that your operating system is in a clean state (no additional software is installed), otherwise, conflicts may occur.

KubeClipper provides command line tools 🔧 kcctl to simplify operations.

You can download the latest version of kcctl directly with the following command:

# Install latest release

curl -sfL https://oss.kubeclipper.io/kcctl.sh | bash -

# In China, you can add env "KC_REGION=cn", we use registry.aliyuncs.com/google_containers instead of k8s.gcr.io

curl -sfL https://oss.kubeclipper.io/kcctl.sh | KC_REGION=cn bash -

# The latest release version is downloaded by default. You can download the specified version. For example, specify the master development version to be installed

curl -sfL https://oss.kubeclipper.io/kcctl.sh | KC_REGION=cn KC_VERSION=master bash -It is highly recommended that you install the latest release to experience more features.

You can also download the specified version on the GitHub Release Page.

Check if the installation is successful with the following command:

kcctl versionIn this quick start tutorial, you only need to run just one command for installation:

If you want to install AIO mode

# install default release

kcctl deploy

# you can use KC_VERSION to install the specified version, default is latest release

KC_VERSION=master kcctl deployIf you want to install multi node, Use kcctl deploy -h for more information about a command

After you runn this command, kcctl will check your installation environment and enter the installation process, if the conditions are met.

After printing the KubeClipper banner, the installation is complete.

_ __ _ _____ _ _

| | / / | | / __ \ (_)

| |/ / _ _| |__ ___| / \/ |_ _ __ _ __ ___ _ __

| \| | | | '_ \ / _ \ | | | | '_ \| '_ \ / _ \ '__|

| |\ \ |_| | |_) | __/ \__/\ | | |_) | |_) | __/ |

\_| \_/\__,_|_.__/ \___|\____/_|_| .__/| .__/ \___|_|

| | | |

|_| |_|When deployed successfully, you can open a browser and visit http://$IP to enter the KubeClipper console.

You can log in with the default account and password admin / Thinkbig1 .

You may need to configure port forwarding rules and open ports in security groups for external users to access the console.

When kubeclipper is deployed successfully, you can use the kcctl tool or console to create a k8s cluster. In the quick start tutorial, we use the kcctl tool to create.

First, log in with the default account and password to obtain the token, which is convenient for subsequent interaction between kcctl and kc-server.

# if your kc-server node ip is 192.168.234.3

# you should replace 192.168.234.3 to your kc-server node ip

kcctl login -H http://192.168.234.3:8080 -u admin -p Thinkbig1Then create a k8s cluster with the following command:

NODE=$(kcctl get node -o yaml|grep ipv4DefaultIP:|sed 's/ipv4DefaultIP: //')

kcctl create cluster --master $NODE --name demo --untaint-masterThe cluster creation will be completed in about 3 minutes, or you can use the following command to view the cluster status:

kcctl get cluster -o yaml|grep status -A5You can also enter the console to view real-time logs.

Once the cluster enter the Running state , it means that the creation is complete. You can use kubectl get cs command to view the cluster status.

- fork repo and clone

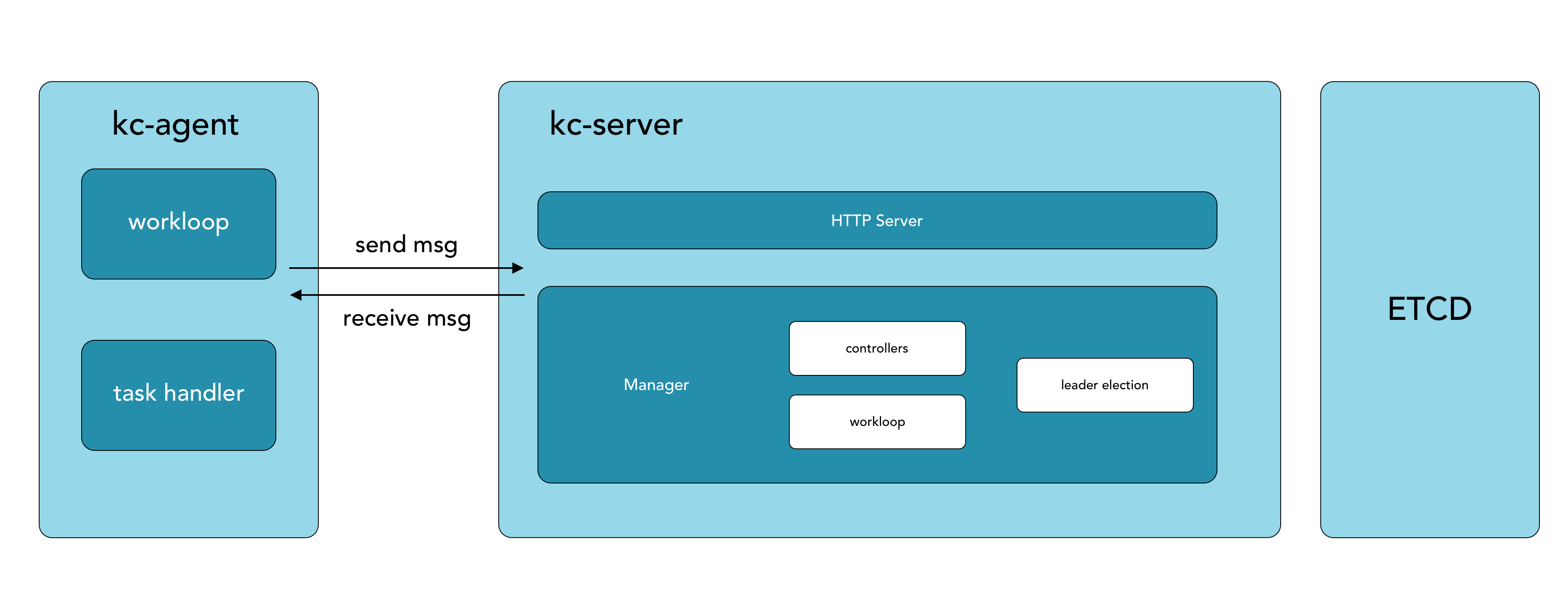

- run etcd locally, usually use docker / podman to run etcd container

export HostIP="Your-IP" docker run -d \ --net host \ k8s.gcr.io/etcd:3.5.0-0 etcd \ --advertise-client-urls http://${HostIP}:2379 \ --initial-advertise-peer-urls http://${HostIP}:2380 \ --initial-cluster=infra0=http://${HostIP}:2380 \ --listen-client-urls http://${HostIP}:2379,http://127.0.0.1:2379 \ --listen-metrics-urls http://127.0.0.1:2381 \ --listen-peer-urls http://${HostIP}:2380 \ --name infra0 \ --snapshot-count=10000 \ --data-dir=/var/lib/etcd

- change

kubeclipper-server.yamletcd.serverList to your locally etcd cluster make build./dist/kubeclipper-server serve

Please follow Community to join us.