This is the project repo for the final project of the Udacity Self-Driving Car Nanodegree: Programming a Real Self-Driving Car. For more information about the project, see the project introduction here.

Please use one of the two installation options, either native or docker installation.

-

Be sure that your workstation is running Ubuntu 16.04 Xenial Xerus or Ubuntu 14.04 Trusty Tahir. Ubuntu downloads can be found here.

-

If using a Virtual Machine to install Ubuntu, use the following configuration as minimum:

- 2 CPU

- 2 GB system memory

- 25 GB of free hard drive space

The Udacity provided virtual machine has ROS and Dataspeed DBW already installed, so you can skip the next two steps if you are using this.

-

Follow these instructions to install ROS

- ROS Kinetic if you have Ubuntu 16.04.

- ROS Indigo if you have Ubuntu 14.04.

-

- Use this option to install the SDK on a workstation that already has ROS installed: One Line SDK Install (binary)

-

Download the Udacity Simulator.

Build the docker container

docker build . -t capstoneRun the docker file

docker run -p 4567:4567 -v $PWD:/capstone -v /tmp/log:/root/.ros/ --rm -it capstoneTo set up port forwarding, please refer to the instructions from term 2

- Clone the project repository

git clone https://github.com/udacity/CarND-Capstone.git- Install python dependencies

cd CarND-Capstone

pip install -r requirements.txt- Make and run styx

cd ros

catkin_make

source devel/setup.sh

roslaunch launch/styx.launch- Run the simulator

- Download training bag that was recorded on the Udacity self-driving car.

- Unzip the file

unzip traffic_light_bag_file.zip- Play the bag file

rosbag play -l traffic_light_bag_file/traffic_light_training.bag- Launch your project in site mode

cd CarND-Capstone/ros

roslaunch launch/site.launch- Confirm that traffic light detection works on real life images

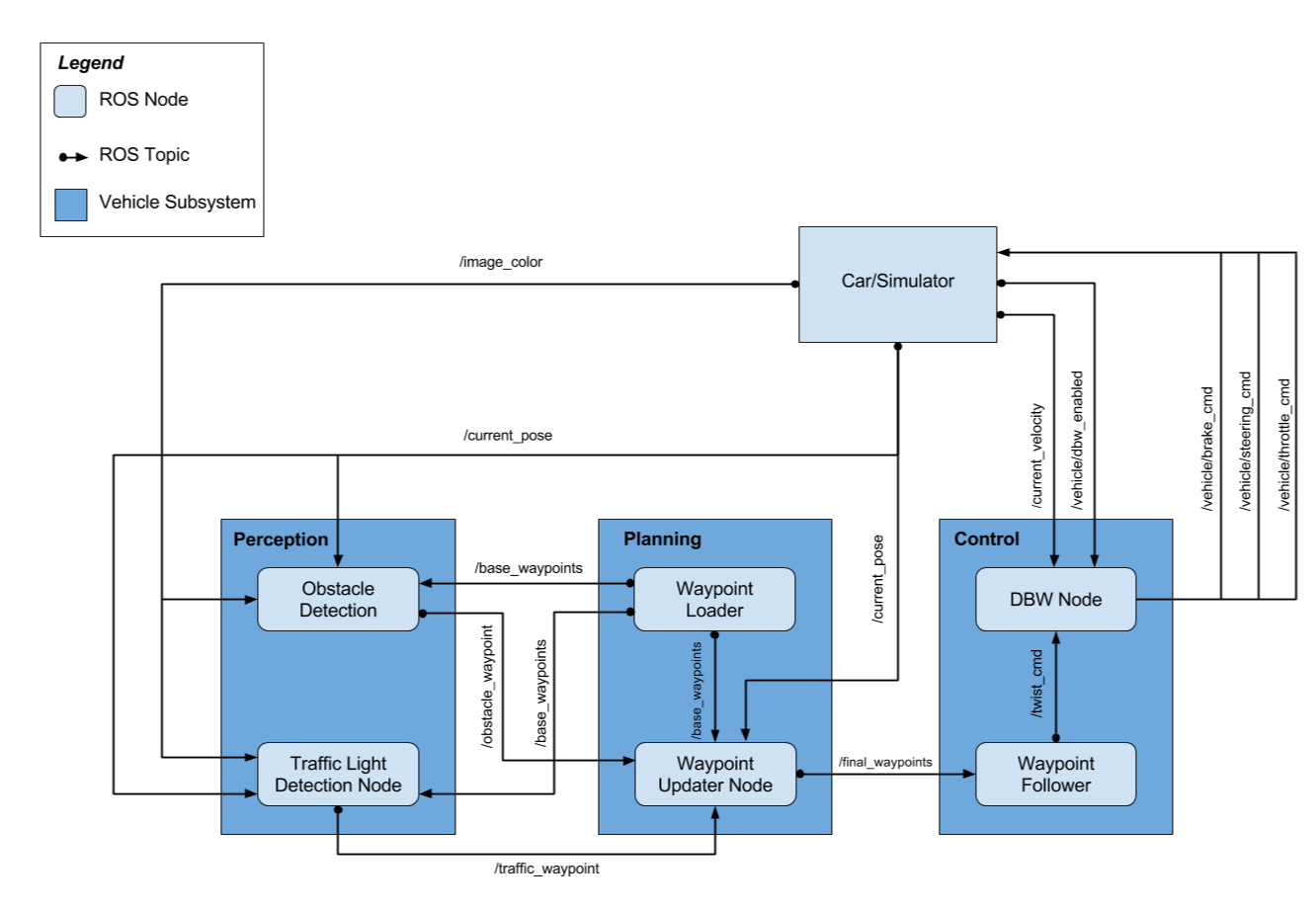

the code come from terzakig/SelfDrivingCar3-Integration. The topics and msg flow:

1.obstacle detection

This code have not the obstacle part. In the future, we can use lidar data methhod(like :3D-deepBox methhod) or image object detection data methhod(like: yolo, fast rcnn, ssd etc.) to get obstacle position .

2.traffic light detection

This code use base_waypoints to get accurate 3D map and light location. For better planning car, code utilizes RGB color space to identify red , yellow and green color. In the future, We can use the deep net(like cnn) to identify light color and shape in real complicated scenes.

1.waypoint updater

Use KDTree to find the closed distance for track list that is about when car to stop and when to ignore the light detection reaction.

1.for real scene, we need consider more about how to fuse the different sensor data for good planning and routing(like:lattice method).

2.need think about balance between different models running time and car high speed.