A No-code workflow executor with built-in web UI

It executes DAGs (Directed acyclic graph) defined in a simple YAML format.

- Contents

- Why not Airflow or Prefect?

- ️How does it work?

- Example

- ️Quick start

- Command Line User Interface

- Web User Interface

- YAML format

- Admin Configuration

- FAQ

- License

- Contributors

Popular workflow engines, Airflow and Prefect, are powerful and valuable tools, but they require writing Python code to run workflows. In many cases, there are already hundreds of thousands of existing lines of code written in other languages such as shell scripts or Perl. Adding another layer of Python on top of these would make it more complicated. Also, it is often not feasible to rewrite everything in Python in such situations. So we decided to develop a new workflow engine, Dagu, which allows you to define DAGs in a simple YAML format. It is self-contained, no-dependency, and it does not require DBMS.

- Self-contained - Single binary with no dependency, No DBMS or cloud service is required.

- Simple - It executes DAGs defined in a simple declarative YAML format. Existing programs can be used without any modification.

The below simple workflow creates and runs a sql.

name: create and run sql

steps:

- name: create sql file

command: "bash"

script: |

echo "select * from table;" > select.sql

- name: run the sql file

command: "psql -U username -d myDataBase -a -f psql select.sql"

stdout: output.txt

depends:

- create sql fileDownload the latest binary from the Releases page and place it in your $PATH. For example, you can download it in /usr/local/bin.

Start the server with dagu server and browse to http://127.0.0.1:8000 to explore the Web UI.

Create a workflow by clicking the New DAG button on the top page of the web UI. Input example.yaml in the dialog.

Go to the workflow detail page and click the Edit button in the Config Tab. Copy and paste from this example YAML and click the Save button.

You can execute the example by pressing the Start button.

dagu start [--params=<params>] <file>- Runs the workflowdagu status <file>- Displays the current status of the workflowdagu retry --req=<request-id> <file>- Re-runs the specified workflow rundagu stop <file>- Stops the workflow execution by sending TERM signalsdagu dry [--params=<params>] <file>- Dry-runs the workflowdagu server- Starts the web server for web UI

-

DAGs: Overview of all DAGs (workflows).

DAGs page displays all workflows and real-time status. To create a new workflow, you can click the button in the top-right corner.

-

Detail: Realtime status of the workflow.

The detail page displays the real-time status, logs, and all workflow configurations.

-

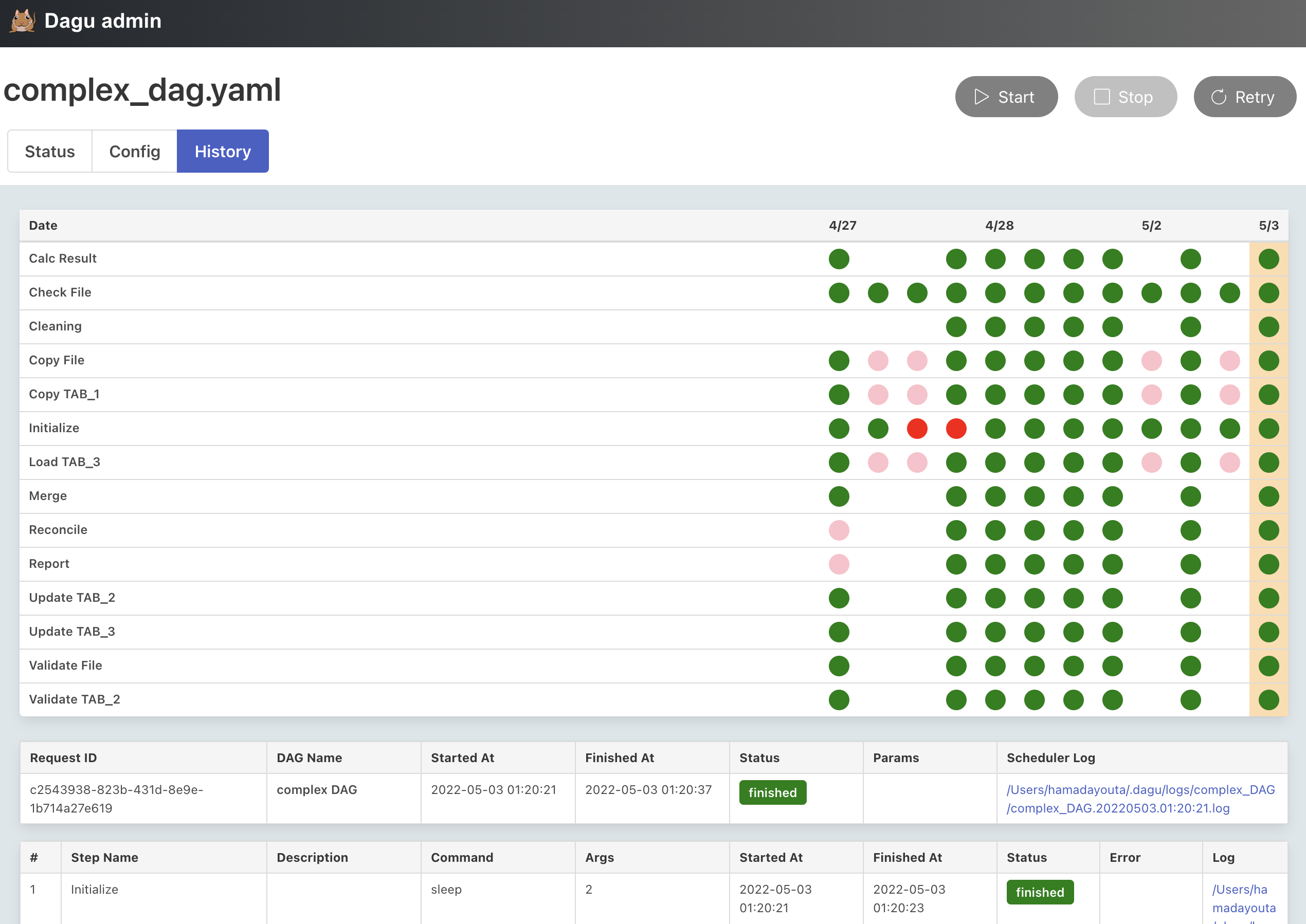

History: History of the execution of the workflow.

The history page allows you to check past execution results and logs.

name: hello world

steps:

- name: step 1

command: bash

script: |

echo ${USER}

output: YOUR_NAME

- name: step 2

command: "echo hello world, ${YOUR_NAME}!"

stdout: /tmp/hello-world.txt

depends:

- step 1You can define environment variables and refer using env field.

name: example

env:

- SOME_DIR: ${HOME}/batch

- SOME_FILE: ${SOME_DIR}/some_file

steps:

- name: some task in some dir

dir: ${SOME_DIR}

command: python main.py ${SOME_FILE}You can define parameters using params field and refer to each parameter as $1, $2, etc. Parameters can also be command substitutions or environment variables. It can be overridden by --params= parameter of start command.

name: example

params: param1 param2

steps:

- name: some task with parameters

command: python main.py $1 $2You can use command substitution in field values. I.e., a string enclosed in backquotes (`) is evaluated as a command and replaced with the result of standard output.

name: example

env:

TODAY: "`date '+%Y%m%d'`"

steps:

- name: hello

command: "echo hello, today is ${TODAY}"Sometimes you have parts of a workflow that you only want to run under certain conditions. You can use the precondition field to add conditional branches to your workflow.

For example, the below task only runs on the first date of each month.

name: example

steps:

- name: A monthly task

command: monthly.sh

preconditions:

- condition: "`date '+%d'`"

expected: "01"If you want the workflow to continue to the next step regardless of the step's conditional check result, you can use the continueOn field:

name: example

steps:

- name: A monthly task

command: monthly.sh

preconditions:

- condition: "`date '+%d'`"

expected: "01"

continueOn:

skipped: truescript field provides a way to run arbitrary snippets of code in any language.

name: example

steps:

- name: step 1

command: "bash"

script: |

cd /tmp

echo "hello world" > hello

cat hello

output: RESULT

- name: step 2

command: echo ${RESULT} # hello world

depends:

- step 1output field can be used to set a environment variable with standard output. Leading and trailing space will be trimmed automatically. The environment variables can be used in subsequent steps.

name: example

steps:

- name: step 1

command: "echo foo"

output: FOO # will contain "foo"stdout field can be used to write standard output to a file.

name: example

steps:

- name: create a file

command: "echo hello"

stdout: "/tmp/hello" # the content will be "hello\n"It is often desirable to take action when a specific event happens, for example, when a workflow fails. To achieve this, you can use handlerOn fields.

name: example

handlerOn:

failure:

command: notify_error.sh

exit:

command: cleanup.sh

steps:

- name: A task

command: main.shIf you want a task to repeat execution at regular intervals, you can use the repeatPolicy field. If you want to stop the repeating task, you can use the stop command to gracefully stop the task.

name: example

steps:

- name: A task

command: main.sh

repeatPolicy:

repeat: true

intervalSec: 60Combining these settings gives you granular control over how the workflow runs.

name: all configuration # DAG's name

description: run a DAG # DAG's description

env: # Environment variables

- LOG_DIR: ${HOME}/logs

- PATH: /usr/local/bin:${PATH}

logDir: ${LOG_DIR} # Log directory to write standard output

histRetentionDays: 3 # Execution history retention days (not for log files)

delaySec: 1 # Interval seconds between steps

maxActiveRuns: 1 # Max parallel number of running step

params: param1 param2 # Default parameters for the DAG that can be referred to by $1, $2, and so on

preconditions: # Precondisions for whether the DAG is allowed to run

- condition: "`echo $2`" # Command or variables to evaluate

expected: "param2" # Expected value for the condition

mailOn:

failure: true # Send a mail when the DAG failed

success: true # Send a mail when the DAG finished

MaxCleanUpTimeSec: 300 # The maximum amount of time to wait after sending a TERM signal to running steps before killing them

handlerOn: # Handlers on Success, Failure, Cancel, and Exit

success:

command: "echo succeed" # Command to execute when the DAG execution succeed

failure:

command: "echo failed" # Command to execute when the DAG execution failed

cancel:

command: "echo canceled" # Command to execute when the DAG execution canceled

exit:

command: "echo finished" # Command to execute when the DAG execution finished

steps:

- name: some task # Step's name

description: some task # Step's description

dir: ${HOME}/logs # Working directory

command: bash # Command and parameters

stdout: /tmp/outfile

ouptut: RESULT_VARIABLE

script: |

echo "any script"

mailOn:

failure: true # Send a mail when the step failed

success: true # Send a mail when the step finished

continueOn:

failure: true # Continue to the next regardless of the step failed or not

skipped: true # Continue to the next regardless the preconditions are met or not

retryPolicy: # Retry policy for the step

limit: 2 # Retry up to 2 times when the step failed

repeatPolicy: # Repeat policy for the step

repeat: true # Boolean whether to repeat this step

intervalSec: 60 # Interval time to repeat the step in seconds

preconditions: # Precondisions for whether the step is allowed to run

- condition: "`echo $1`" # Command or variables to evaluate

expected: "param1" # Expected Value for the conditionThe global configuration file ~/.dagu/config.yaml is useful to gather common settings, such as logDir or env.

You can customize the admin web UI by environment variables.

DAGU__DATA- path to directory for internal use by dagu (default :~/.dagu/data)DAGU__LOGS- path to directory for logging (default :~/.dagu/logs)DAGU__ADMIN_PORT- port number for web URL (default :8000)DAGU__ADMIN_NAVBAR_COLOR- navigation header color for web UI (optional)DAGU__ADMIN_NAVBAR_TITLE- navigation header title for web UI (optional)

Please create ~/.dagu/admin.yaml.

host: <hostname for web UI address> # default value is 127.0.0.1

port: <port number for web UI address> # default value is 8000

dags: <the location of DAG configuration files> # default value is current working directory

command: <Absolute path to the dagu binary> # [optional] required if the dagu command not in $PATH

isBasicAuth: <true|false> # [optional] basic auth config

basicAuthUsername: <username for basic auth of web UI> # [optional] basic auth config

basicAuthPassword: <password for basic auth of web UI> # [optional] basic auth configCreating a global configuration ~/.dagu/config.yaml is a convenient way to organize shared settings.

logDir: <path-to-write-log> # log directory to write standard output

histRetentionDays: 3 # history retention days

smtp: # [optional] mail server configuration to send notifications

host: <smtp server host>

port: <stmp server port>

errorMail: # [optional] mail configuration for error-level

from: <from address>

to: <to address>

prefix: <prefix of mail subject>

infoMail:

from: <from address> # [optional] mail configuration for info-level

to: <to address>

prefix: <prefix of mail subject>Feel free to contribute in any way you want. Share ideas, questions, submit issues, and create pull requests. Take a look at this TODO list. Thanks!

It will store execution history data in the DAGU__DATA environment variable path. The default location is $HOME/.dagu/data.

It will store log files in the DAGU__LOGS environment variable path. The default location is $HOME/.dagu/logs. You can override the setting by the logDir field in a YAML file.

The default retention period for execution history is seven days. However, you can override the setting by the histRetentionDays field in a YAML file.

You can change the status of any task to a failed state. Then, when you retry the workflow, it will execute the failed one and any subsequent.

No, it doesn't have scheduler functionality. It is meant to be used with cron or other schedulers.

Dagu uses Unix sockets to communicate with running processes.

This project is licensed under the GNU GPLv3 - see the LICENSE.md file for details

Made with contrib.rocks.