Reducing Spurious Correlations in Aspect-based Sentiment Analysis with Explanation from Large Language Models

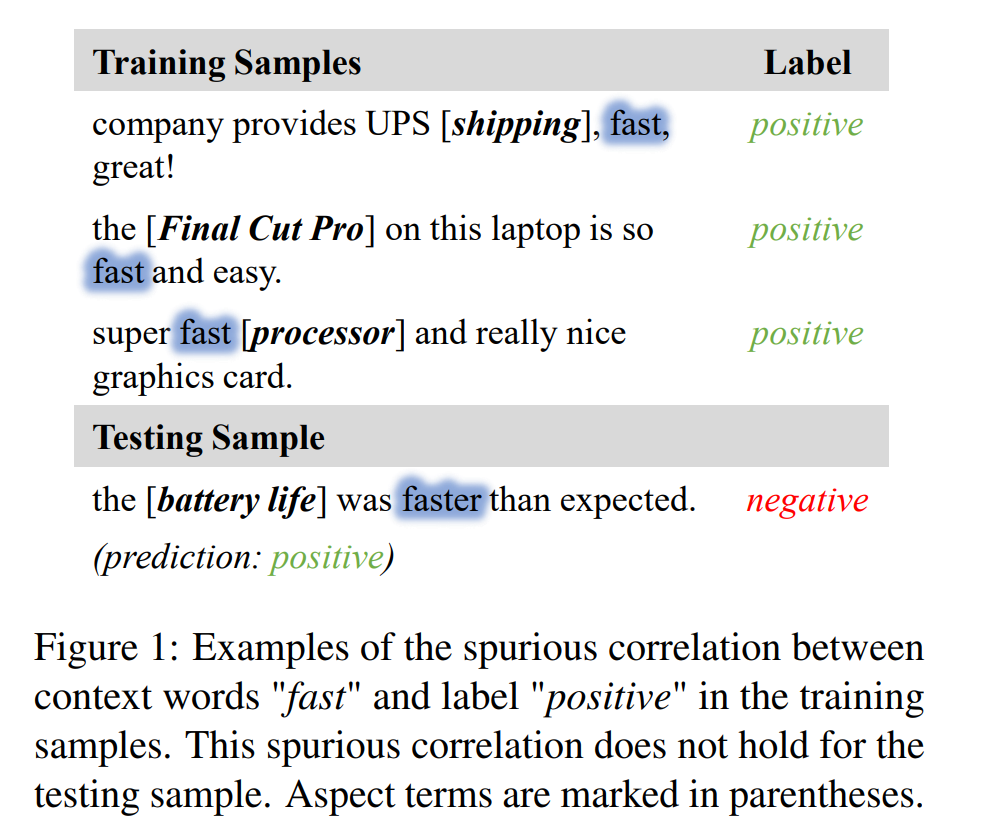

Recently, aspect-based sentiment analysis (ABSA) models have yielded promising results. However,

- they are susceptible to learning spurious correlations between certain words of the input text and output labels while modeling the sentiment feature of the aspect. This spurious correlation will potentially undermine the performance of ABSA models.

One direct solution for this problem is to make the model see and learn an explanation of sentiment expression rather than certain words. Motivated by this, we exploit explanations for the sentiment polarity of each aspect from large language models (LLMs) to reduce spurious correlations in ABSA.

- First, we formulate a prompt template that wraps the sentence, an aspect, and the sentiment label. This template is utilized to prompt LLMs to generate an appropriate explanation that states the sentiment cause.

- Then, we propose two straightforward yet effective methods to leverage the explanation for preventing the learning of spurious correlations.

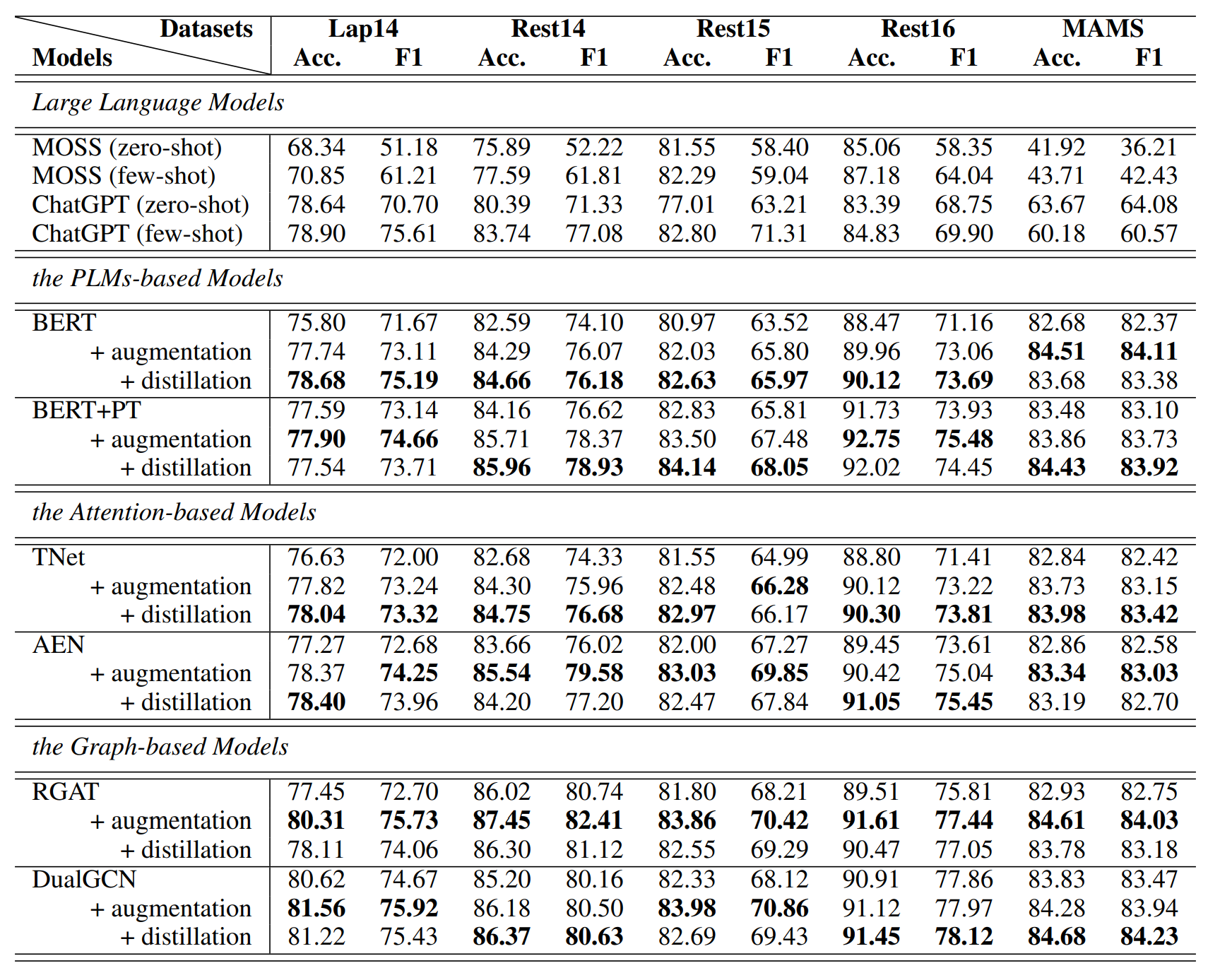

We conducted extensive comparative experiments on five datasets by integrating them with some representative ABSA models.

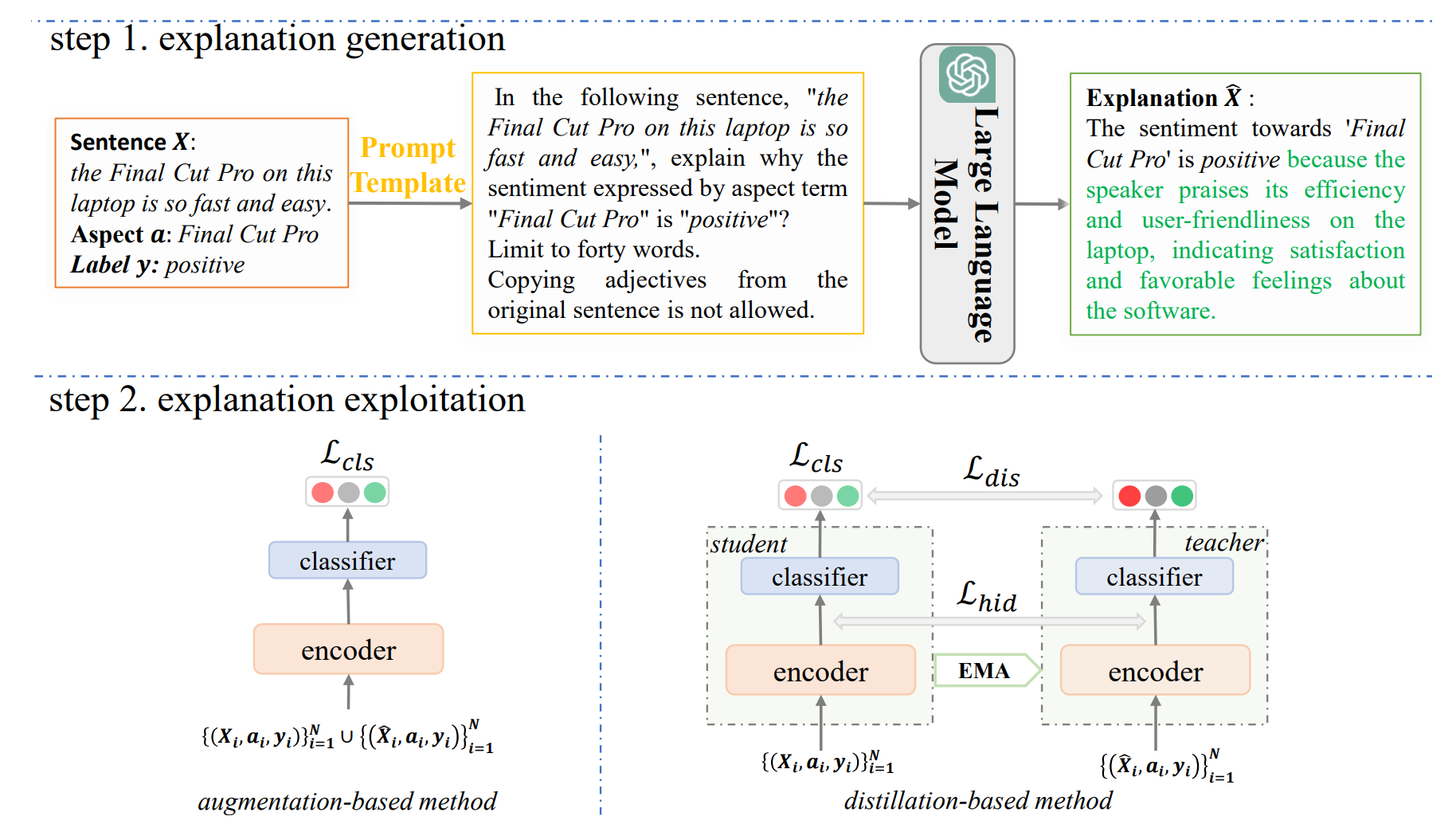

As shown in Figure, our framework consists of two steps.

- The first step is explanation generation. Here, for each training sample, we use a prompt template to encapsulate the sentence, the aspect, and its sentiment to drive the LLMs to generate an explanation to indicate the corresponding sentiment cause.

- The second step is explanation exploitation. Here, we propose two simple yet effective methods to exploit explanations for alleviating the spurious correlations in ABSA.

- download pre-trained model weights from huggingface, and put it in

./pretrain_modelsdirectory - download data according to references

- use large models to generate explanation of data (some code can see MOSS and ChatGPT)

- install packages (see

requirements.txt) - run

.run_bash/*.sh

@inproceedings{wang-etal-2023-reducing,

title = "Reducing Spurious Correlations in Aspect-based Sentiment Analysis with Explanation from Large Language Models",

author = "Wang, Qianlong and Ding, Keyang and Liang, Bin and Yang, Min and Xu, Ruifeng",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2023",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

pages = "2930--2941",

}