PetNet is a deep neural network designed for the semantic segmentation of images containing cats and dogs, as well as the classification of their respective breeds. This documentation provides an overview of PetNet, its network architecture, model training and evaluation processes, inference details, and testing instructions.

PetNet aims to:

- Detect the presence of cats, dogs, or both in an image.

- Classify the breed of the detected animal.

- Generate a binary mask to distinguish pixels featuring a pet (cat or dog) from those that do not.

The network is trained on a dataset comprising 7,270 images of cats and dogs. It is further validated using a separate set of 1,818 images. Each image contains at most one cat and one dog.

PetNet is based on the U-Net architecture, incorporating a pre-trained RESNET-18 network as its backbone. It features two output heads: one for multi-label classification (covering pet type and breed type, with 39 labels in total) and another for semantic segmentation. The network is designed to detect at most one cat and one dog per image.

To train the model, follow these steps:

- Download the dataset from this link. Ensure the data folder and the pets_dataset_info.csv file are placed within the dataset folder of the project.

- Build the Docker image using:

docker build --platform linux/amd64 -t petnet:latest .- To run the docker image and train the model, use the following command:

docker run -it petnet:latest

python3 src/train.pyHyperparameters can be adjusted in the src/config.py file. The best-performing model will be saved as weights/model.pth.

The training and testing losses, along with accuracy metrics, are logged using Weights and Biases. Metrics such as mean classification precision, recall, segmentation IOU, and loss for both training and validation sets are recorded. The evaluation report is accessible here.

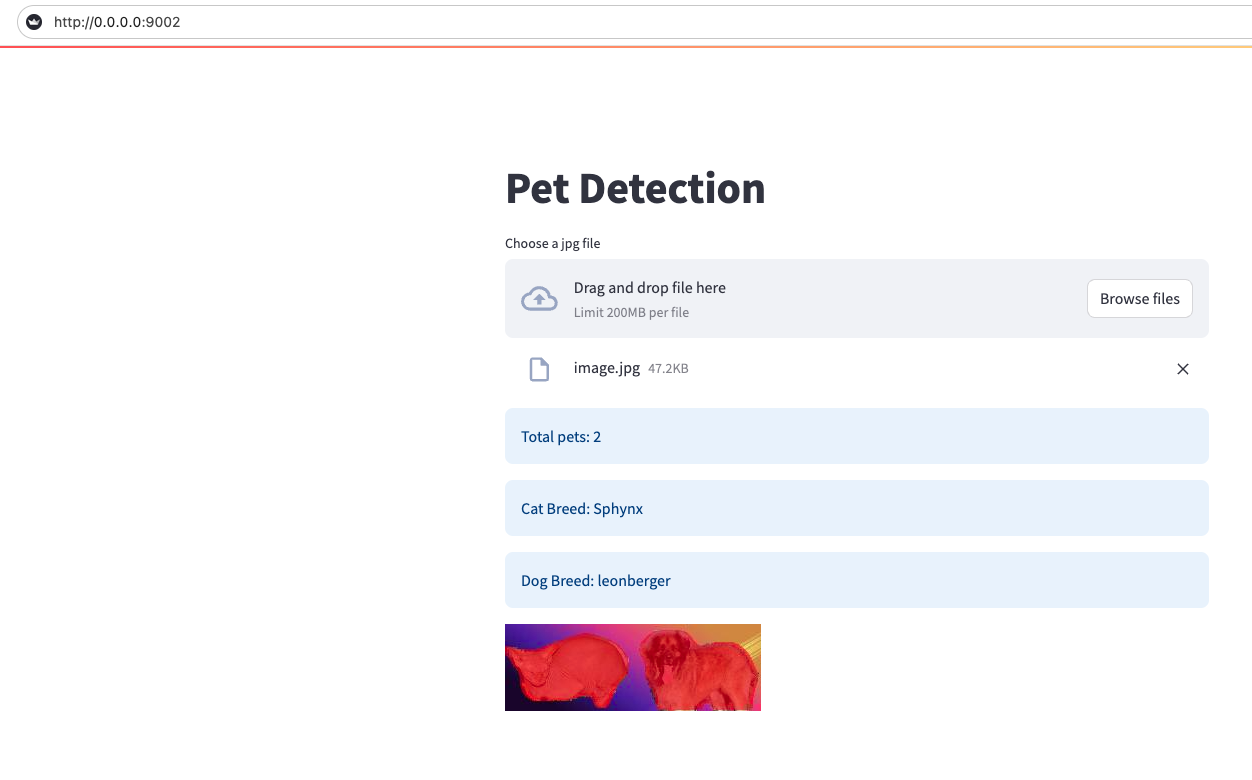

PetNet features a REST API, served using FastAPI, for model inference. An accompanying user interface, built with Streamlit, facilitates interaction with the API. To deploy the API, follow these steps:

- Build the Docker image as described in the Model Training section.

- Launch the service using:

docker compose up- Access the UI at

http://0.0.0.0:9002. Users can upload images and receive predictions, including pet type, breed, and the segmentation mask.

- Download the trained model from this link and place it in the

weightsdirectory asmodel.pth. - Create a virtual environment and install the required packages using:

pip install -r requirements.txt- Enable the environment and run the following command in terminal to perform inference on an image file:

python src/inference.py --img_path /path/to/image.jpgIt will display the predicted labels in the terminal and save the segmentation mask as /path/to/image_predicted_mask.jpg.

Run unit tests by executing pytest from the root directory of the project.

The EDA notebook is available here.