This repo is the official implementation of the two-stage data association presented in the paper: “A Two-Stage Data Association Approach for 3D Multi-Object Tracking”

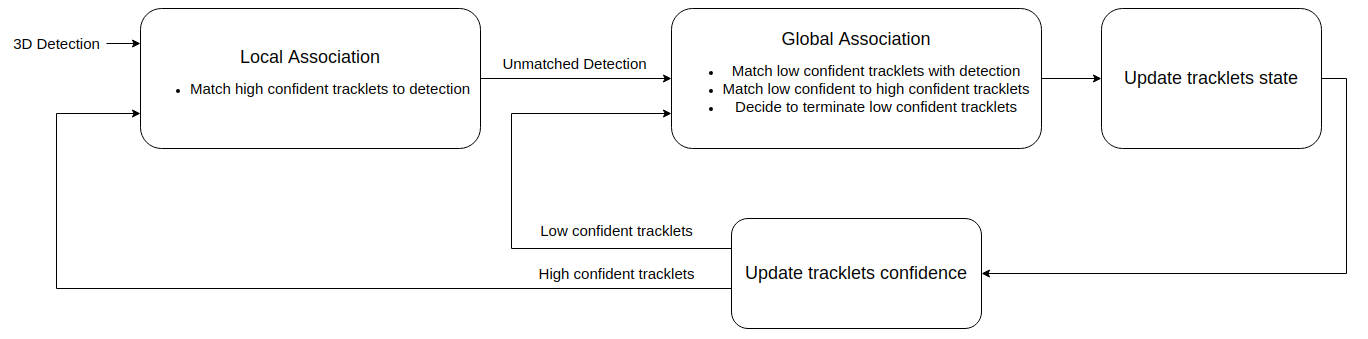

This tracking algorithm is according to track-by-detection paradigm. At every timestep, it receives as input a set 3D bounding boxes and determines the ID of each box. Each ID denotes a unique trajectory. The overview of the algorithm is shown in Figure.1

Figure 1. The pipeline of two-stage data association. The first stage - local association establish the correspondences between detections at this time step. Then, global association stage matches each low-confident tracklets with either a high-confident tracklet or a left-over detection, or terminates it.

A demo on a sequence of KITTI is shown below:

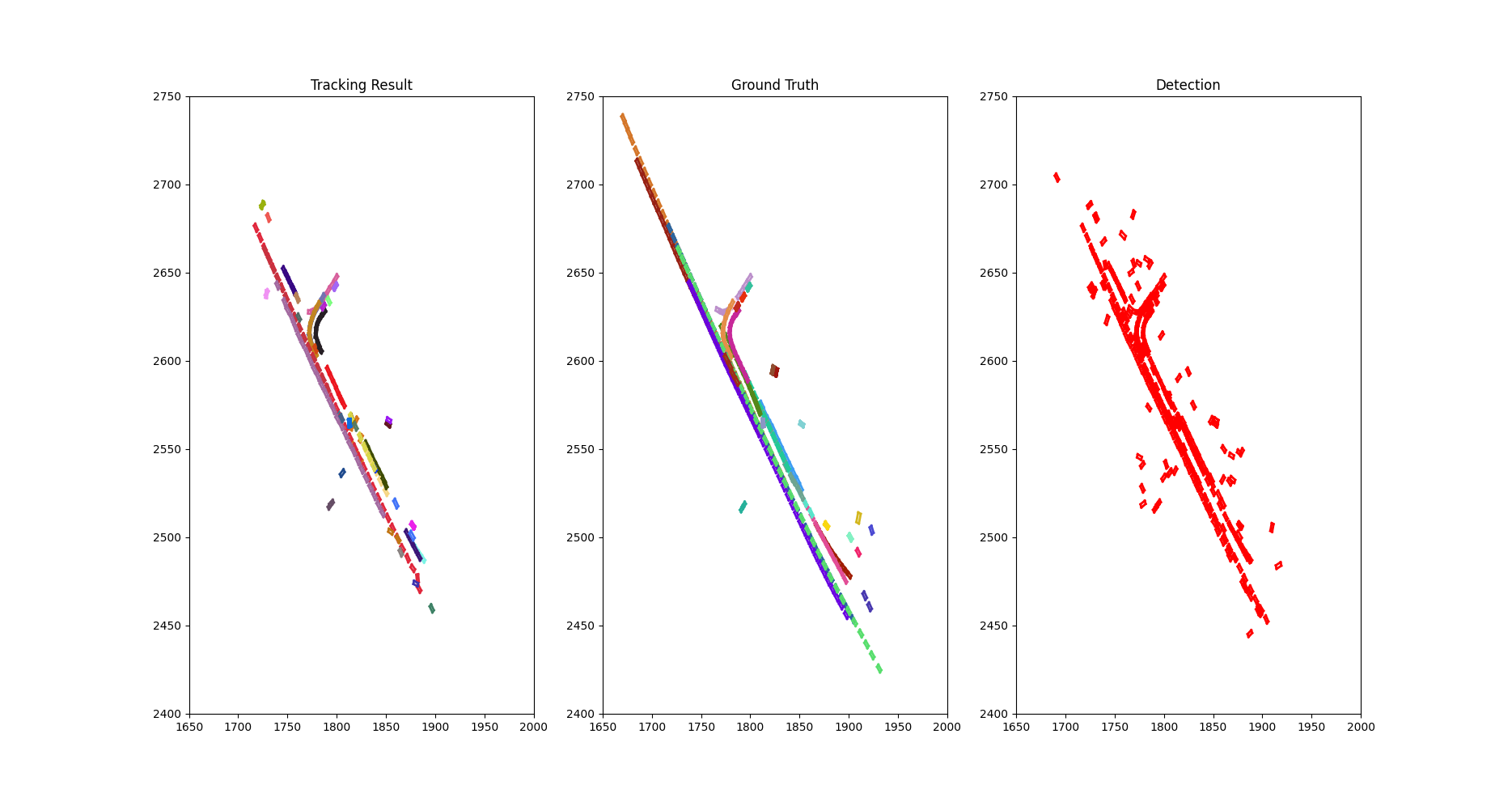

In addition, qualitative performance on NuScenes is shown by accumulating ground truths, tracking results, and object detection results (generated by MEGVII) across a scene as in Figure. ???. On this figure, boxes have the same color denote a trajectory.

Figure 2. The bird-eye view of the tracking result for class car compared to the ground truth of scene 0796 (NuScenes) accumulated through time. Each rectangle represents a car and each color is associated to a track ID.

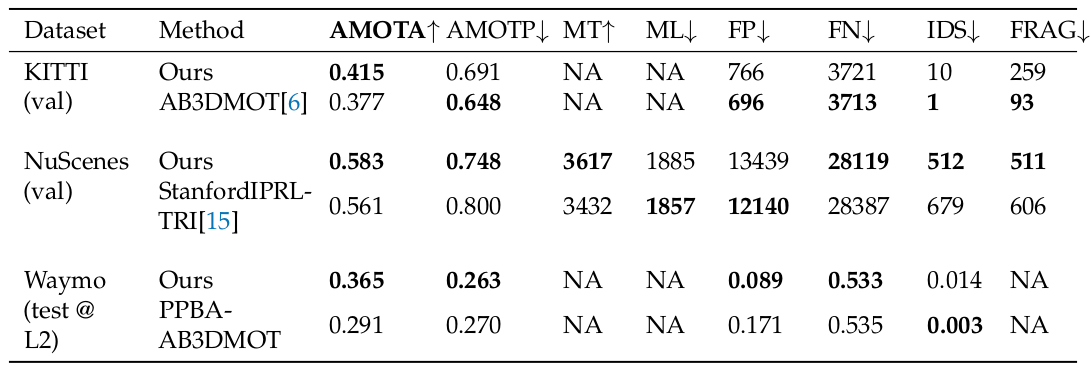

The performance of MOT algorithms is measured quantitatively by AMOTA. The performance of our tracking algorithm on this metric (and other secondary metrics) compared to AB3DMOT-family is shown in Table.1

Table 1. Quantitative performance of our model on KITTI validation set, NuScenes validation set, and Waymo test set

The python packages required to run the code in this repo are listed in requirement.txt which can be installed by

$pip install -r requirements.txt

This repo supports 3 datasets: KITTI, NuScenes, and Waymo.The code assumes the following structure for the dataset directory

dataset

|

|__ kitti

| |___ tracking

| | |___ data_tracking_calib

| | | |___ training

| | | | |___ calib

| | | | | |___ 0000.txt

| | | |___ testing

| | |___ data_tracking_image_2

| | | |___ training

| | | | |___ image_02

| | | | | |___ 0000

| | | |___ testing

| | |___ data_tracking_label_2

| | | |___ training

| | | | |___ image_02

| | | | | |___ 0000.txt

| | |___ data_tracking_oxts

| | | |___ training

| | | | |___ oxts

| | | | | |___ 0000.txt

| | | |___ testing

|

|__ nuscenes

| |___ v1.0-mini

| | |___ maps

| | |___ samples

| | |___ sweeps

| | |___ v1.0-mini

| |___ v1.0-trainval

| | |___ maps

| | |___ samples

| | |___ sweeps

| | |___ v1.0-mini

| |___ nusc-detection

| | |___ detection-megvii

| | | |___ megvii_test.json

| | | |___ megvii_train.json

To use this repo on KITTI tracking dataset, please download the dataset from: http://www.cvlibs.net/datasets/kitti/eval_tracking.php. Specifically to the following parts are compulsory:

- GPU/IMU data

- Training label of tracking dataset (this is to run evaluation on KITTI)

Camera calibration matrices

The 3D detections for validation and test set are provided in data/kitti/pointrcnn_detection thus images and point cloud are not necessary. However, if you wish to run kitti_demo_tracking.py, please download left color image of tracking dataset so that tracking results can be observed by projecting objects in 3D to images. The usage for kitti_run_demo.py as following

$python kitti_demo_tracking.py sequence_name tracked_obj_class

To generate the tracking result on KITTI’s validation set and evaluate it with AMOTA metric, you can use kitti_run.sh. Please remember to modify the class of objects of interest (Car, Pedestrian, Cyclist) in this file before executing.

The NuScenes devkit is required to interface with NuScenes dataset. Please install it in complement to installing required packages for this repo.

Once the devkit is installed, if you want to run nuscenes_demo_tracking.py to have a demonstration of tracking on a scene of NuScenes, you can download the mini partition of NuScenes dataset. The usage for nuscenes_demo_tracking.py as following

$python nuscenes_demo_tracking.py scene_index

To generate tracking result on NuScenes’ validation set or test set, you need to download the detection files (can be found in section Baseline of https://www.nuscenes.org/tracking?externalData=all&mapData=all&modalities=Any) or provide your own detection. After having the detection files and placing them in the right directory as in the directory structure shown above, you can use nuscenes_generate_tracking.py by invoking

nuscenes_run.sh.

TODO.

TODO: directory structure

[1] https://github.com/eddyhkchiu/mahalanobis_3d_multi_object_tracking [2] https://github.com/xinshuoweng/AB3DMOT