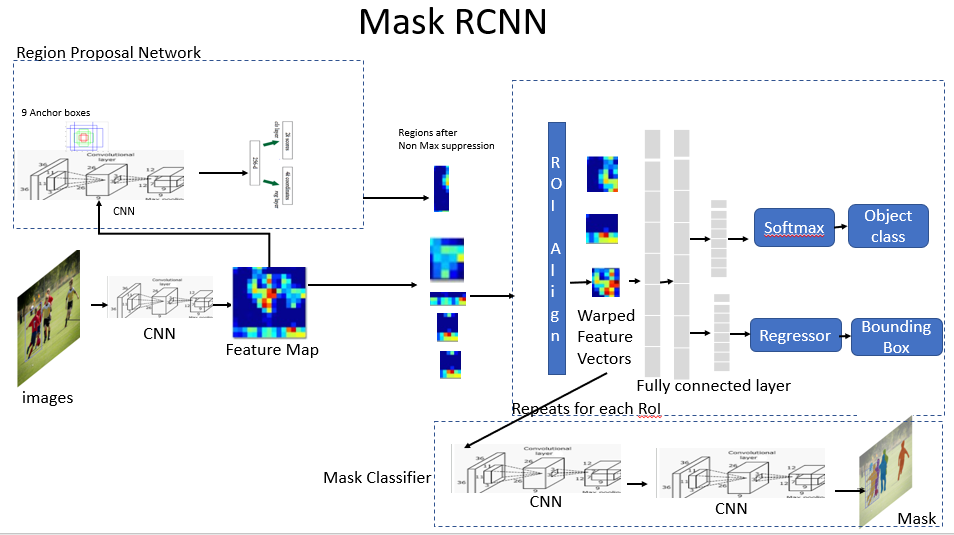

This is an implementation of Mask R-CNN on Python 3, Keras, and TensorFlow based on Matterport's version. The model generates bounding boxes and segmentation masks for each instance of an object in the image, with Feature Pyramid Network (FPN) + ResNet-101 as backbones.

- Mask R-CNN implementation built on TensorFlow and Keras.

- Model training with data augmentation and various configuration.

- Custom mAP callback during the training process for initial evaluation.

- Training with 5-fold cross-validation strategy.

- Evaluation with mean Average Precision (mAP) on COCO metric

AP@.50:.05:.95and PASCAL VOC metricAP@.50. For more information, read here. - Jupyter notebooks examples to visualize the detection pipeline at every step and understand more about Mask R-CNN.

- Convert predicted results to the VGG annotation format to expand the dataset for further training. Achieved a finer, more accurate mask with less labeling time than by handwork (~3 quarters in large-scale).

It is recommended to organize the dataset folder, testing image/video folder and model weight under same folder as the below structure:

├── notebooks # several notebooks from Matterport's Mask R-CNN

├── dataset # place the dataset here

│ └── <dataset_name>

│ ├── train

│ │ ├── <image_file 1> # accept .jpg or .jpeg file

│ │ ├── <image_file 2>

│ │ ├── ...

│ │ └── via_export_json.json # corresponded single annotation file, must be named like this

│ ├── val

│ └── test

├── logs # log folder

├── mrcnn # model folder

├── test # test folder

│ ├── image

│ └── video

├── trained_weight # pre-trained model and trained weight folder

| ...

├── environment.yml # environment setup file

├── README.md

├── dataset.py # dataset configuration

├── evaluation.py # weight evaluation

└── training.py # training model

- Conda environment setup:

conda env create -f environment.yml conda activate mask-rcnn - Training:

* Train a new model starting from pre-trained weights python3 training.py --dataset=/path/to/dataset --weight=/path/to/pretrained/weight.h5 * Resume training a model python3 training.py --dataset=/path/to/dataset --continue_train=/path/to/latest/weights.h5

- Evaluating:

python3 evaluation.py --dataset=/path/to/dataset --weights=/path/to/pretrained/weight.h5

- Testing

* Image python3 image_detection.py --dataset=/path/to/dataset --weights=/path/to/pretrained/weight.h5 --image=/path/to/image/directory * Video (update weight path and dataset path in mrcnn.visualize_cv2) python3 video_detection.py --video_path = /path/to/testing/video/dir/

- Annotation generating:

python3 annotating_generation.py --dataset=/path/to/dataset --weights=/path/to/pretrained/weight.h5 --image=/path/to/image/directory

- View training plot:

tensorboard --logdir=logs/path/to/trained/dir

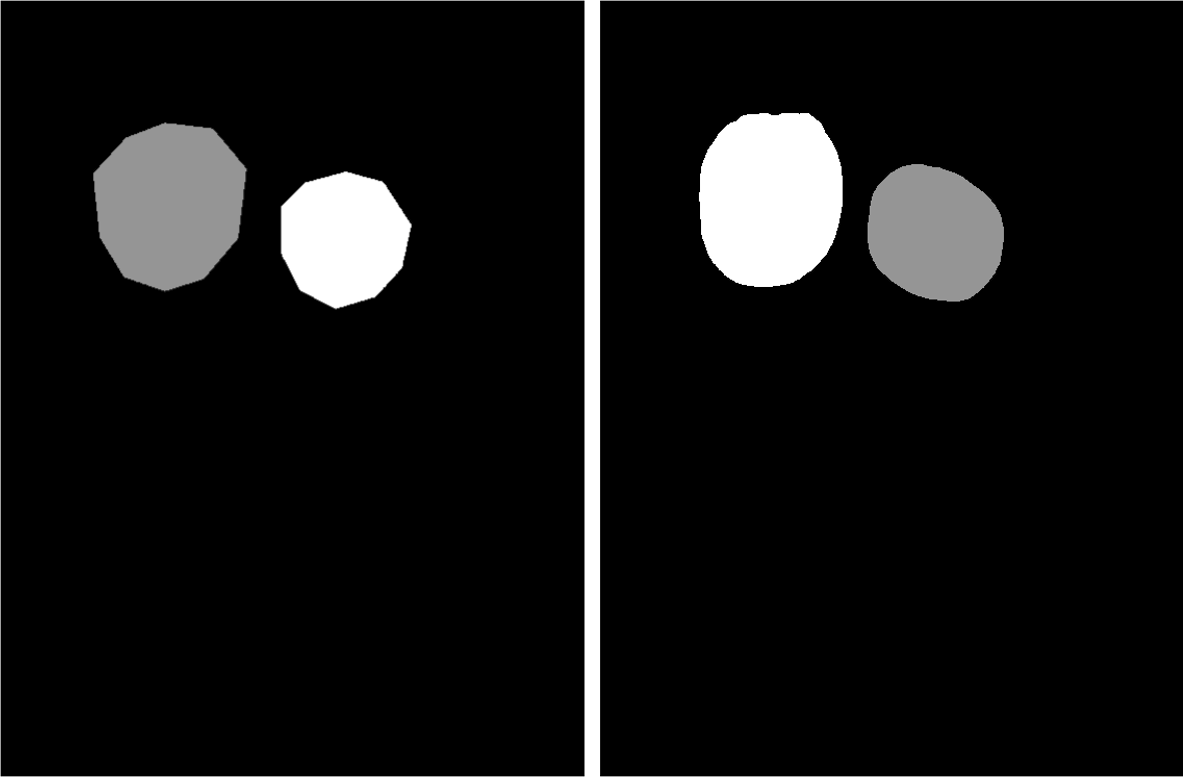

Annotated image for this implementation is created by VGG Image Annotator with format structure:

{ 'filename': '<image_name>.jpg',

'regions': {

'0': {

'region_attributes': {},

'shape_attributes': {

'all_points_x': [...],

'all_points_y': [...],

'name': <class_name>}},

... more regions ...

},

'size': <image_size>

}