(English)Abstract: We implemented a simpler KAN network with no more than 30 lines of core code, but 20 times faster and two orders of magnitude more accurate than the original KAN.

We've added a new parameter to relu-kan, train_se, which can be set to true to allow the start and end points of each basis function to be trained as trainable parameters, further improving the model's fitting ability.

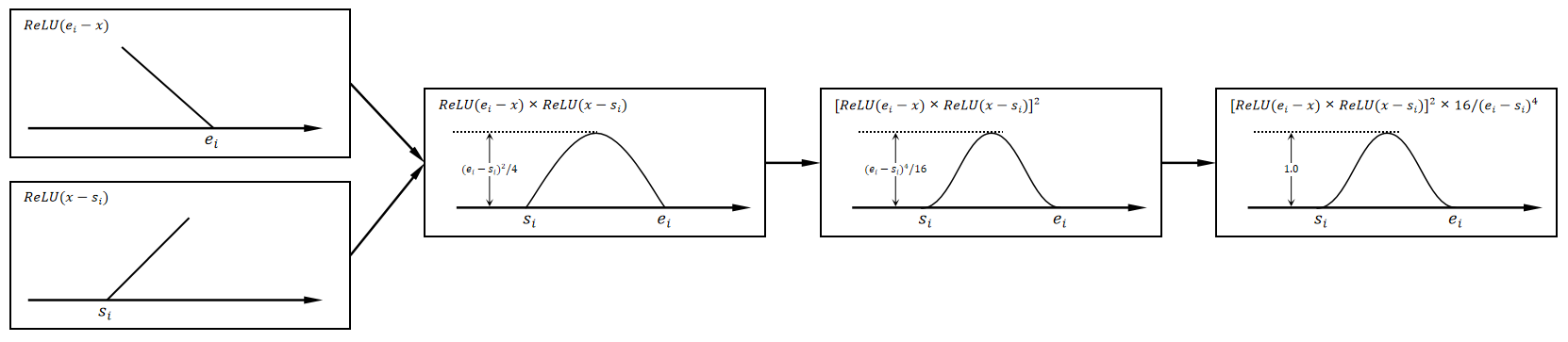

We use the simpler function

where,

Like

Based on this, defining a convolutional operation

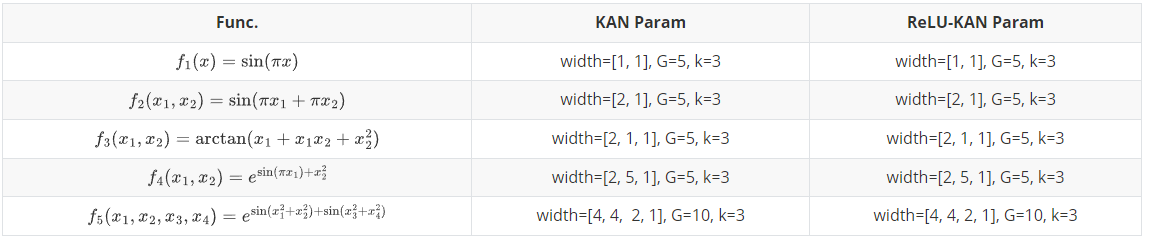

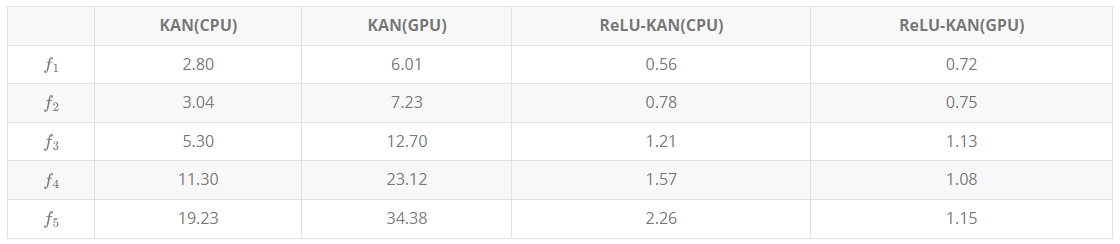

We compare the training speed before and after improvement on five functions:

On CPU and GPU, the training time of both is shown in the following table:

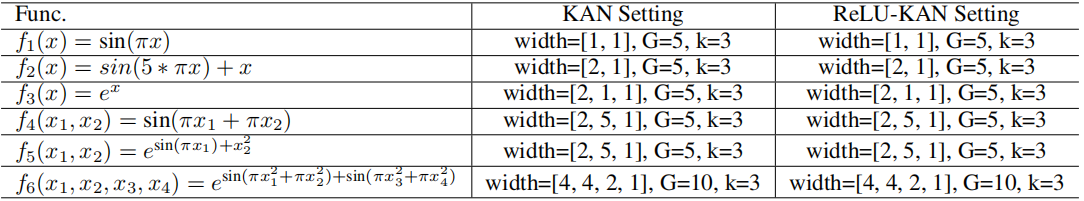

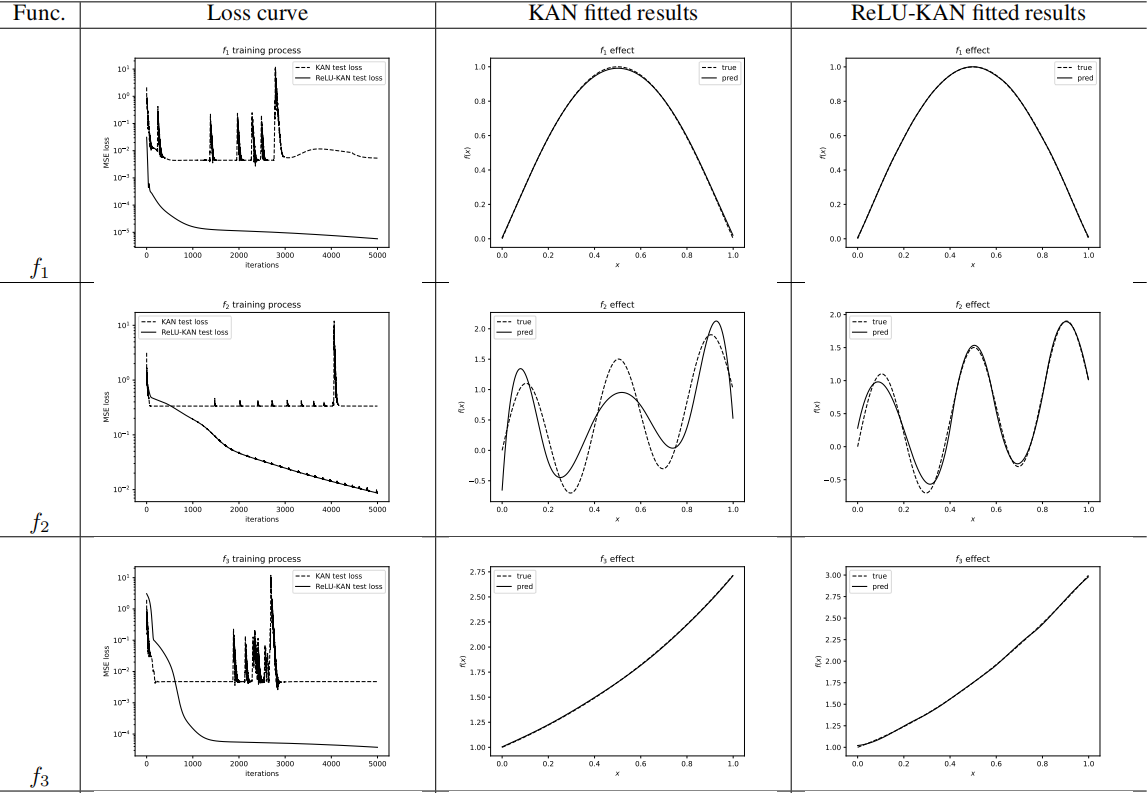

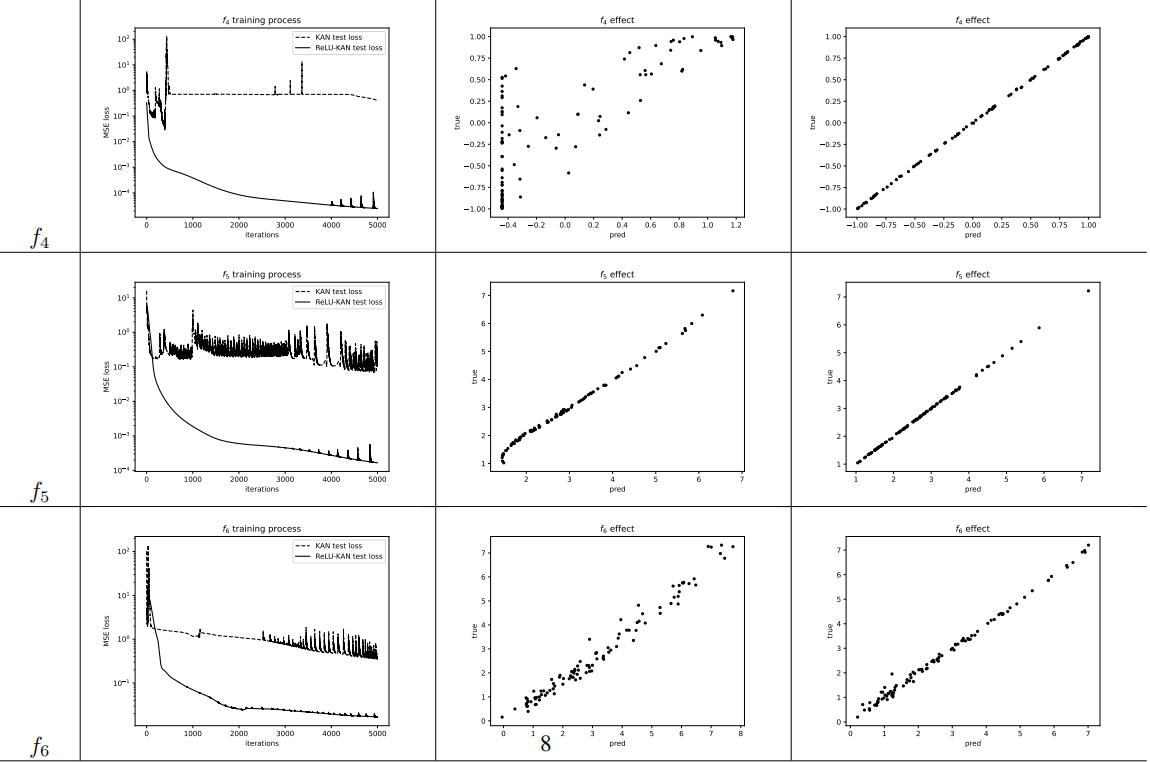

We conducted fitting experiments on the following six functions,

and the fitting results are shown in the following table

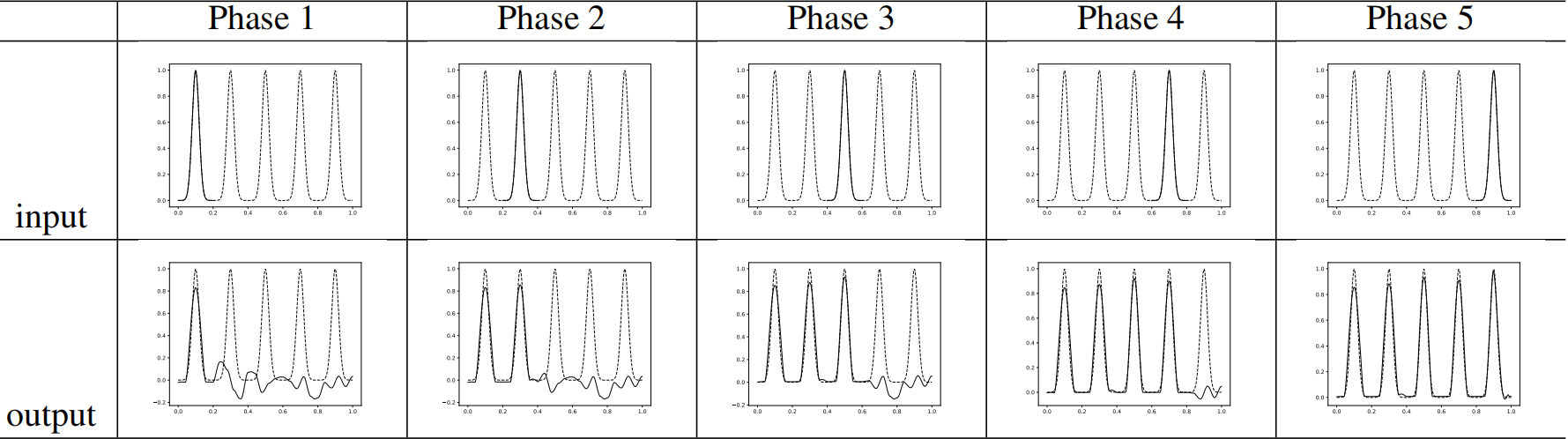

The new KAN can similarly avoid catastrophic forgetting

Run fitting_example.py You can see an example of ReLU-KAN fitting a unary function.

exp_speed.py: Experimental code for speed.

exp_fitting.py: Experimental code for fitting ability.

catastrophic_forgetting.py: Experimental Code against Catastrophic Forgetting.