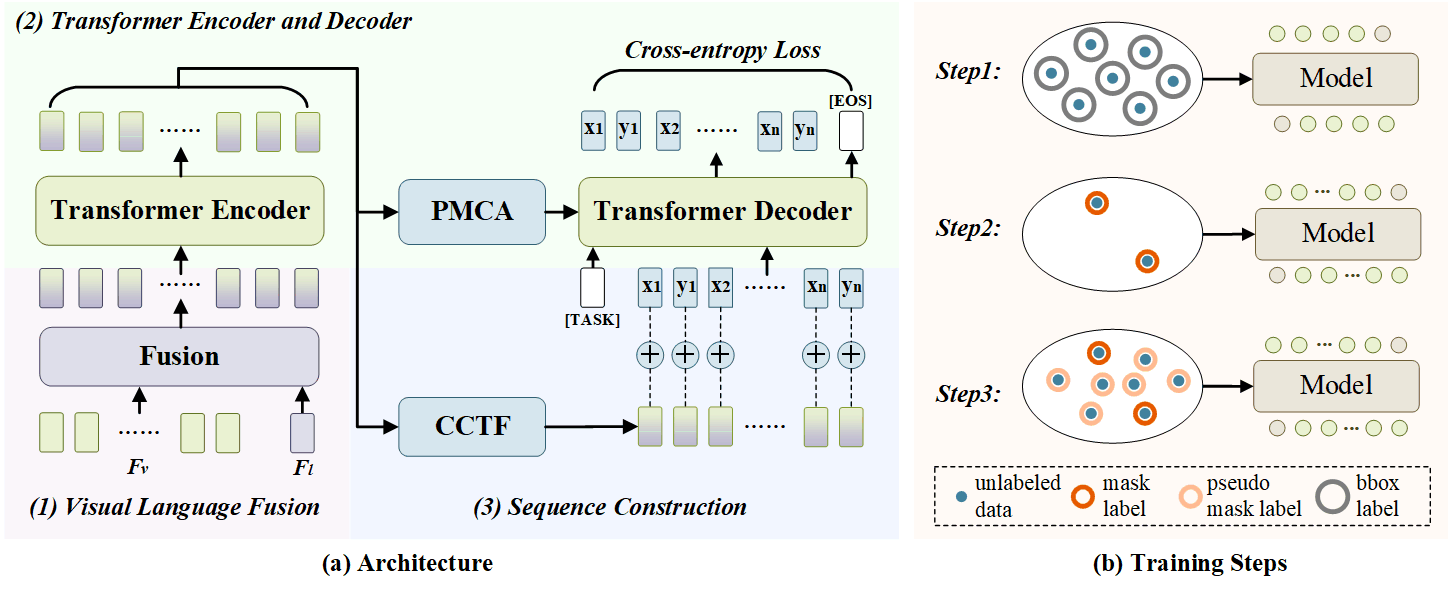

This GitHub repository contains the code implementation for Learning to Segment Every Referring Object Point by Point. The paper introduces a partially supervised training paradigm for Referring Expression Segmentation (RES), aiming to reduce the requirement for extensive pixel-level annotations. The proposed approach utilizes abundant referring bounding boxes and a limited number of pixel-level referring masks (e.g., 1%) to train the model.

pip install -r requirements.txt

wget https://github.com/explosion/spacy-models/releases/download/en_vectors_web_lg-2.1.0/en_vectors_web_lg-2.1.0.tar.gz -O en_vectors_web_lg-2.1.0.tar.gz

pip install en_vectors_web_lg-2.1.0.tar.gz

Then install SeqTR package in editable mode:

pip install -e .

- Download DarkNet-53 model weights trained on MS-COCO object detection task.

- Download the train2014 images from Joseph Redmon's mscoco mirror.

- Download our randomly sampled data with 1%, 5%, and 10% mask annotation.

- Download the trained REC model weights in SeqTR including RefCOCO_REC, RefCOCO+_REC, RefCOCOg_REC.

The project structure should look like the following:

| -- Partial-RES

| -- data

| -- annotations

| -- refcoco-unc

| -- instances.json

| -- instances_sample_1.json

| -- instances_sample_5.json

| -- instances_sample_10.json

| -- ix_to_token.pkl

| -- token_to_ix.pkl

| -- word_emb.npz

| -- refcocoplus-unc

| -- refcocog-umd

| -- weights

| -- darknet.weights

| -- yolov3.weights

| -- images

| -- mscoco

| -- train2014

| -- COCO_train2014_000000000072.jpg

| -- ...

| -- checkpoint

| -- refcoco-unc

| -- det_best.pth

| -- refcocoplus-unc

| -- det_best.pth

| -- refcocog-umd

| -- det_best.pth

| -- configs

| -- seqtr

| -- tools

| -- teaser

In practice, we load the REC model trained in SeqTR as a pretrained one. The parameters of the REC model on the three datasets are placed in . /checkpoint folder, see Data Preparation-4 for the download link.

In this step, we train the model with only 1%, 5%, and 10% labeled mask data on the RES task.

python tools/train.py \

configs/seqtr/segmentation/seqtr_segm_[DATASET_NAME].py \

--cfg-options \

ema=True \

data.samples_per_gpu=128 \

data.train.annsfile='./data/annotations/[DATASET_NAME]/instances_sample_5.json' \

scheduler_config.max_epoch=90 \

--finetune-from checkpoint/[DATASET_NAME]/det_best.pth

if you need multiple GPUs to train the model, run this:

python -m torch.distributed.run \

--nproc_per_node=2 \

--use_env tools/train.py \

configs/seqtr/segmentation/seqtr_segm_[DATASET_NAME].py \

--launche pytorch \

--cfg-options \

ema=True \

data.samples_per_gpu=64 \

data.train.annsfile='./data/annotations/[DATASET_NAME]/instances_sample_5_bbox_pick.json' \

scheduler_config.max_epoch=90 \

--finetune-from checkpoint/[DATASET_NAME]/det_best.pth

- Generate initial pseudo points with the trained RES model in Step2.

python tools/test.py \

configs/seqtr/segmentation/seqtr_segm_[DATASET_NAME].py \

--load-from \

work_dir/[CHECKPOINT_DIR]/segm_best.pth \

--cfg-options \

ema=True \

data.samples_per_gpu=128 \

data.val.which_set='train'

- Select pseudo points with RPP strategy. --ratio is the sampling ratio, which is in [1, 5, 10].

python choose_pseudo_data.py --ratio 5 --dataset refcocog-umd

With this script you can generate instances_sample_5_box_pick.json in ./data/annotations/refcocog-umd/ folder.

- Retraining with Selected Pseudo Points

python tools/train.py \

configs/seqtr/segmentation/seqtr_segm_[DATASET_NAME].py \

--cfg-options \

ema=True \

data.samples_per_gpu=128 \

data.train.annsfile='./data/annotations/[DATASET_NAME]/instances_sample_5_box_pick.json' \

scheduler_config.max_epoch=90 \

--finetune-from checkpoint/[DATASET_NAME]/det_best.pth

python tools/test.py \

configs/seqtr/segmentation/seqtr_segm_[DATASET_NAME].py \

--load-from \

work_dir/[CHECKPOINT_DIR]/segm_best.pth \

--cfg-options \

ema=True \

data.samples_per_gpu=128

If it's helpful to you, please cite as follows:

@inproceedings{qu2023learning,

title={Learning to Segment Every Referring Object Point by Point},

author={Qu, Mengxue and Wu, Yu and Wei, Yunchao and Liu, Wu and Liang, Xiaodan and Zhao, Yao},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={3021--3030},

year={2023}

}

Our code is built on the SeqTR, mmcv, mmdetection and detectron2 libraries.