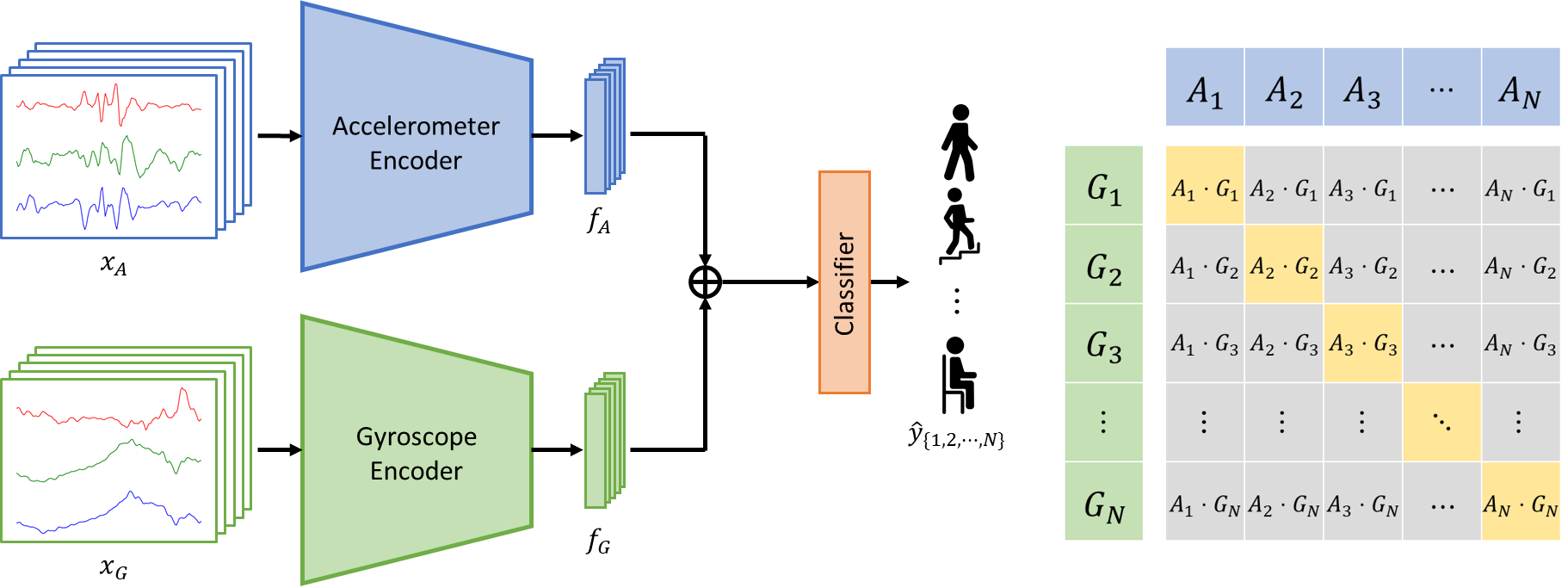

Contrastive Accelerometer-Gyroscope Embedding Model for Human Activity Recognition

Inyong Koo, Yeonju Park, Minki Jeong, Changick Kim

This repository provides a pytorch implementation for the paper "Contrastive Accelerometer-Gyroscope Embedding Model for Human Activity Recognition (IEEE Sensors Journal, 2022)".

-

Download datasets (UCI-HAR, mHealth, MobiAct, PAMAP2) in

data/data

├── UCI HAR Dataset

├── MHEALTHDATASET

├── PAMAP2_Dataset

└── MobiAct_Dataset_v2.0

- Although not introduced in paper, we also support Opportunity and USC-HAD datasets in our framework.

- Create processed data splits for all datasets with

python create_dataset.py

To train Contrastive Accelerometer-Gyroscope Embedding (CAGE) model, run

python train_CAGE.py --dataset {Dataset}

We also provide baseline models such as DeepConvLSTM (Ordóñez et al., 2016), LSTM-CNN (Xia et al., 2020), and ConvAE (Haresamudram et al., 2019).

python train_baseline.py --dataset {Dataset} --model {Model}