Mamba-UNet: Unet-like Pure Visual Mamba for Medical Image Segmentation

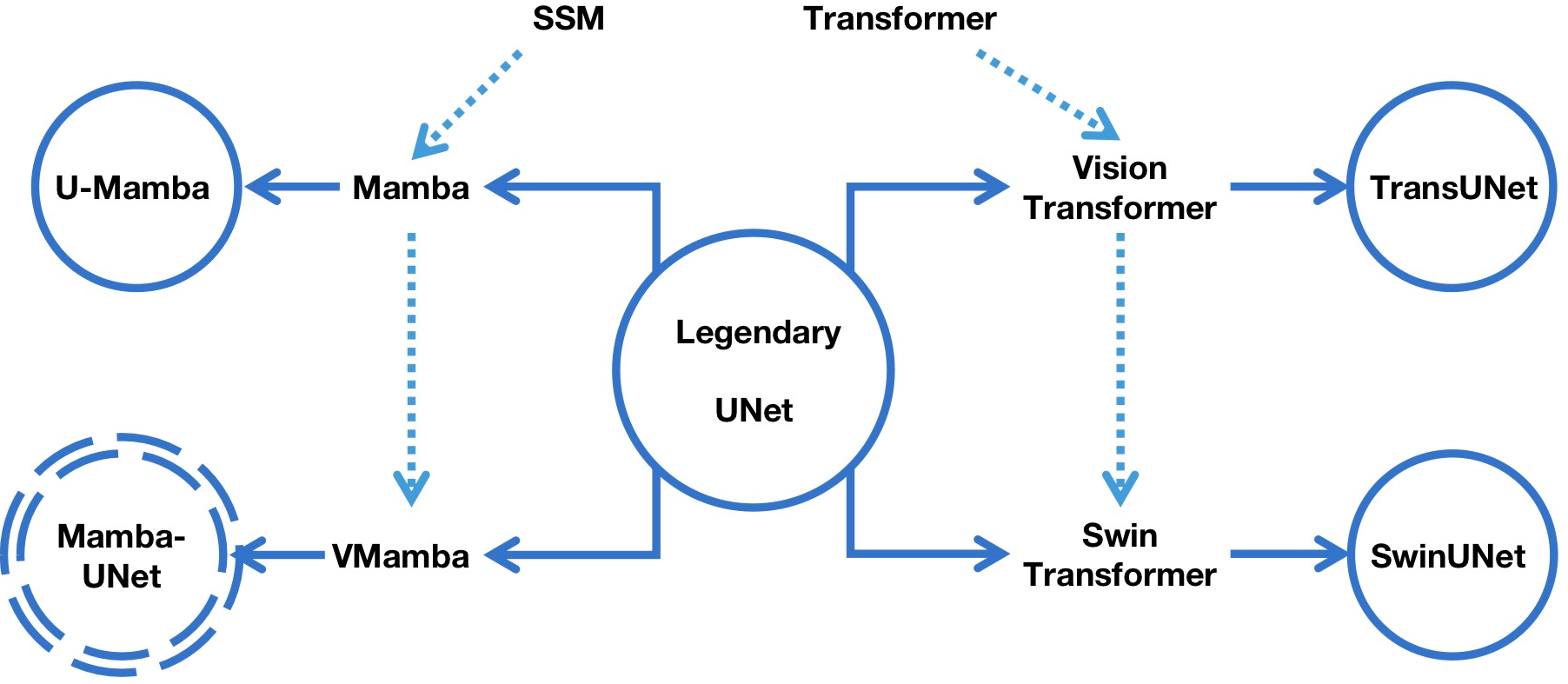

The position of Mamba-UNet

Semi-Mamba-UNet: Pixel-Level Contrastive Cross-Supervised Visual Mamba-based UNet for Semi-Supervised Medical Image Segmentation

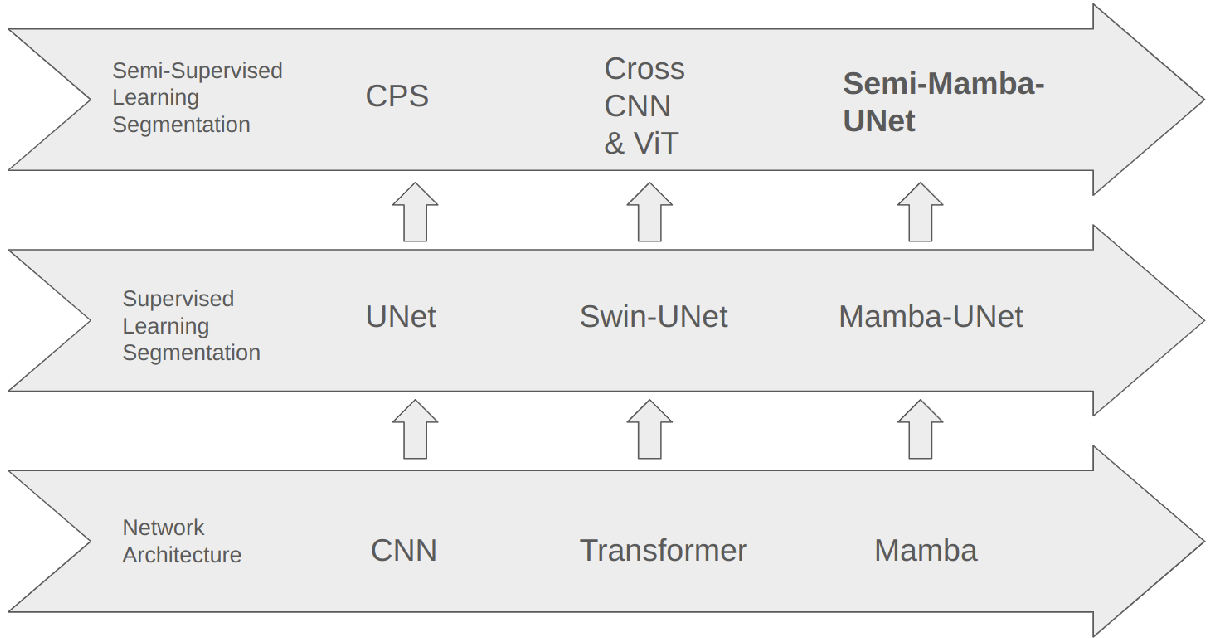

The position of Semi-Mamba-UNet

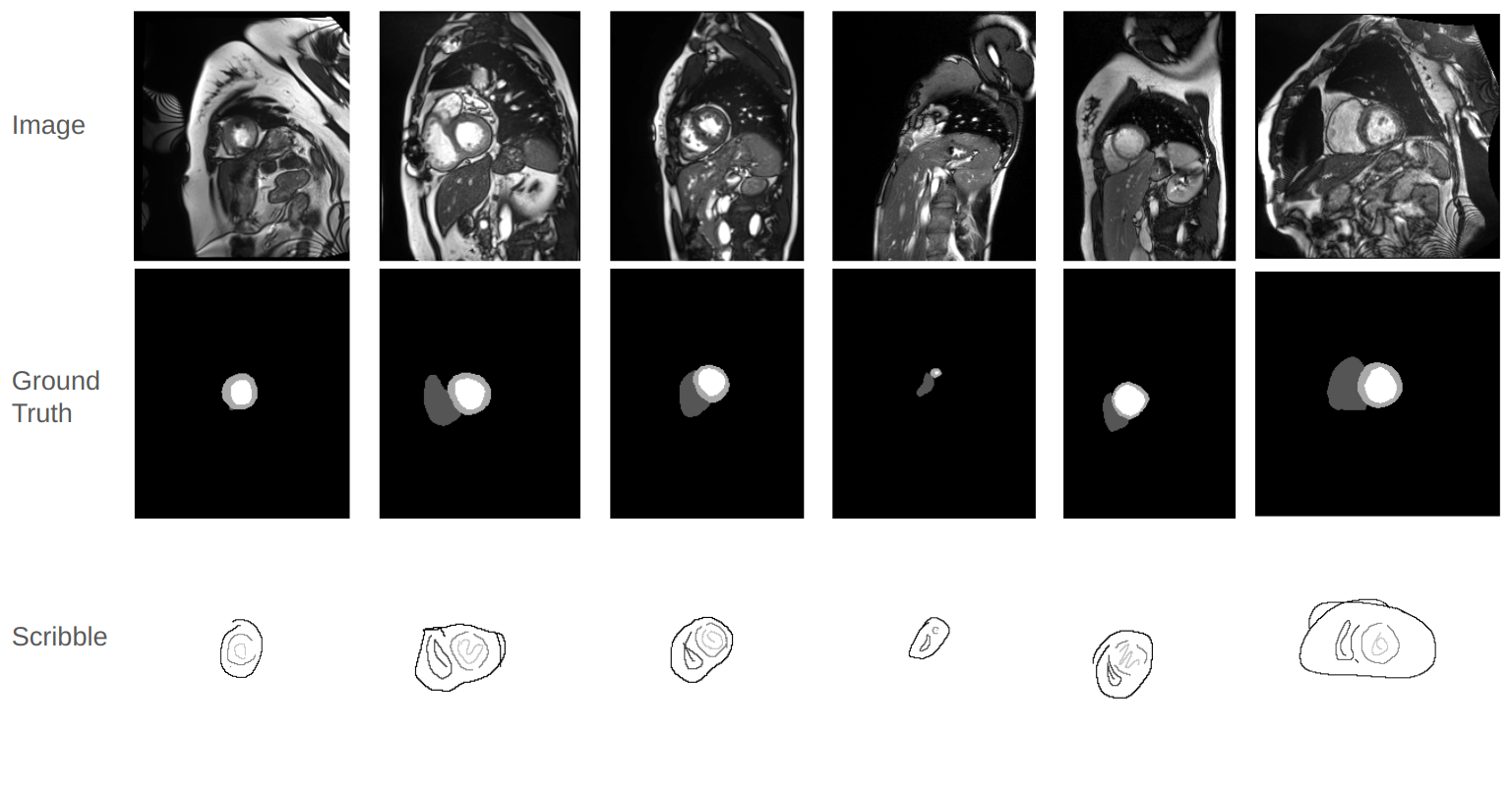

Weak-Mamba-UNet: Visual Mamba Makes CNN and ViT Work Better for Scribble-based Medical Image Segmentation

The introduction of Scribble Annotation

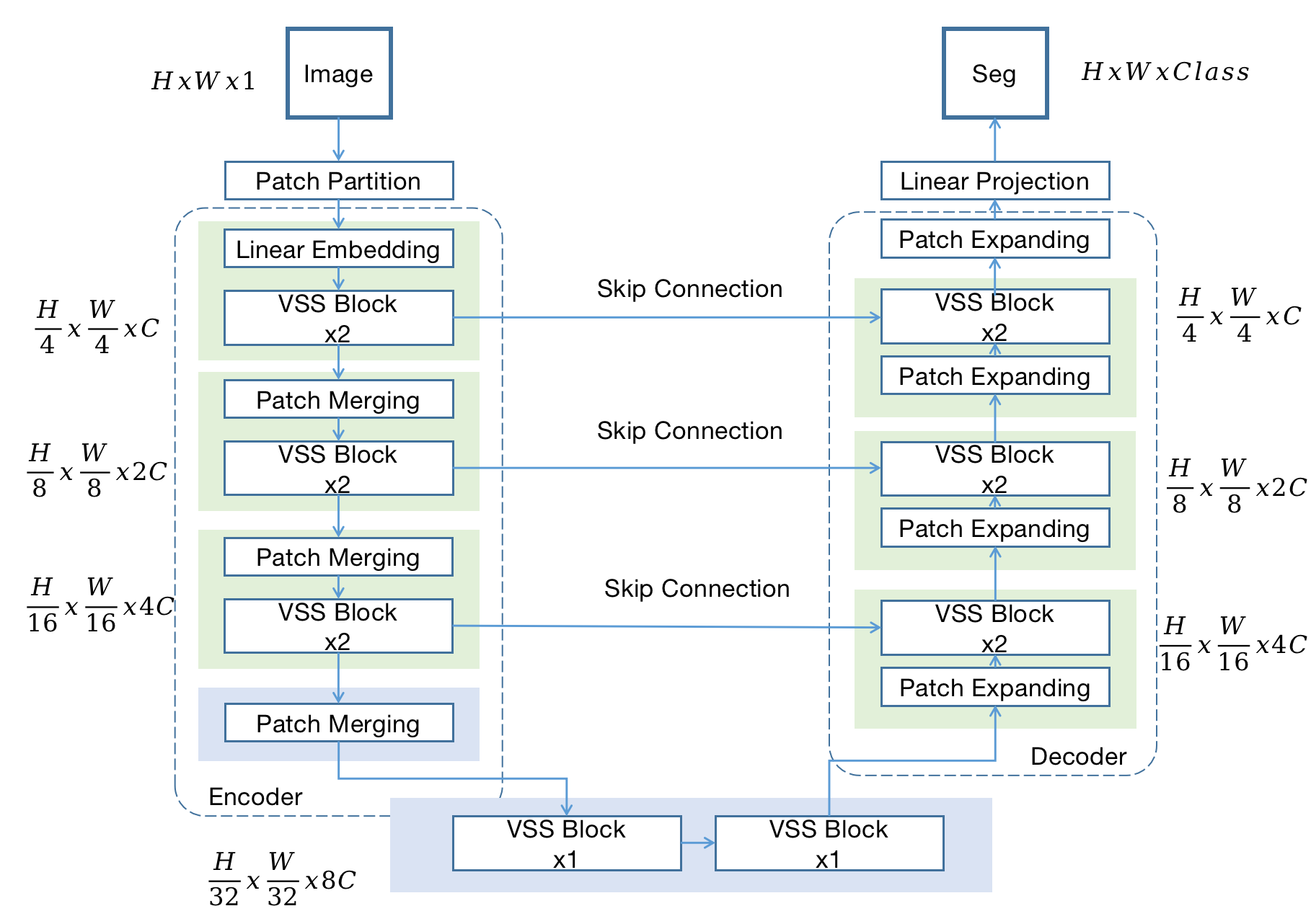

Mamba-UNet

Mamba-UNet -> [Paper Link] Released in 6/Feb/2024.

Semi-Mamba-UNet -> [Paper Link] Released in 10/Feb/2024.

Weak-Mamba-UNet -> [Paper Link] Released in 16/Feb/2024.

3D Mamba-UNet -> TBA

MambaMorph -> TBA

- Pytorch, MONAI

- Some basic python packages: Torchio, Numpy, Scikit-image, SimpleITK, Scipy, Medpy, nibabel, tqdm ......

cd casual-conv1d

python setup.py install

cd mamba

python setup.py install

- Clone the repo:

git clone https://github.com/ziyangwang007/Mamba-UNet.git

cd Mamba-UNet

- Download Pretrained Model

Download through Google Drive for SwinUNet, and Google Drive for Mamba-UNet. in `code/pretrained_ckpt'.

- Download Dataset

Download through Google Drive, and save in `data/ACDC'.

- Train 2D UNet

python train_fully_supervised_2D.py --root_path ../data/ACDC --exp ACDC/unet --model unet --max_iterations 10000 --batch_size 24

- Train SwinUNet

python train_fully_supervised_2D_ViT.py --root_path ../data/ACDC --exp ACDC/swinunet --model swinunet --max_iterations 10000 --batch_size 24

- Train Mamba-UNet

python train_fully_supervised_2D_VIM.py --root_path ../data/ACDC --exp ACDC/VIM --model mambaunet --max_iterations 10000 --batch_size 24

- Train Semi-Mamba-UNet when 5% as labeled data

python train_Semi_Mamba_UNet.py --root_path ../data/ACDC --exp ACDC/Semi_Mamba_UNet --max_iterations 30000 --labeled_num 3 --batch_size 16 --labeled_bs 8

- Train Semi-Mamba-UNet when 10% as labeled data

python train_Semi_Mamba_UNet.py --root_path ../data/ACDC --exp ACDC/Semi_Mamba_UNet --max_iterations 30000 --labeled_num 7 --batch_size 16 --labeled_bs 8

- Train UNet with Mean Teacher when 5% as labeled data

python train_mean_teacher_2D.py --root_path ../data/ACDC --model unet --exp ACDC/Mean_Teacher --max_iterations 30000 --labeled_num 3 --batch_size 16 --labeled_bs 8

- Train SwinUNet with Mean Teacher when 5% as labeled data

python train_mean_teacher_ViT.py --root_path ../data/ACDC --model swinunet --exp ACDC/Mean_Teacher_ViT --max_iterations 30000 --labeled_num 3 --batch_size 16 --labeled_bs 8

- Train UNet with Mean Teacher when 10% as labeled data

python train_mean_teacher_2D.py --root_path ../data/ACDC --model unet --exp ACDC/Mean_Teacher --max_iterations 30000 --labeled_num 7 --batch_size 16 --labeled_bs 8

- Train SwinUNet with Mean Teacher when 10% as labeled data

python train_mean_teacher_ViT.py --root_path ../data/ACDC --model swinunet --exp ACDC/Mean_Teacher_ViT --max_iterations 30000 --labeled_num 7 --batch_size 16 --labeled_bs 8

- Test

python test_2D_fully.py --root_path ../data/ACDC --exp ACDC/xxx --model xxx

- Q: Performance: I find my results are slightly lower than your reported results.

A: Please do not worry. The performance depends on many factors, such as how the data is split, how the network is initialized, how you write the evaluation code for Dice, Accuray, Precision, Sensitivity, Specificity, and even the type of GPU used. What I want to emphasize is that you should maintain your hyper-parameter settings and test some other baseline methods(fair comparsion). If method A has a lower/higher Dice Coefficient than the reported number, it's likely that methods B and C will also have lower/higher Dice Coefficients than the numbers reported in the paper.

- Q: Concurrent Work: I found similar work about the integration of Mamba into UNet.

A: I am glad to see and acknowledge that there should be similar work. Mamba is a novel architecture, and it is obviously valuable to explore integrating Mamba with segmentation, detection, registration, etc. I am pleased that we all find Mamba efficient in some cases. This GitHub repository was developed on the 6th of February 2024, and I would not be surprised if people have proposed similar work from the end of 2023 to future. Also, I have only tested a limited number of baseline methods with a single dataset. Please make sure to read other related work around Mamba/Visual Mamba with UNet/VGG/Resnet etc.

- Q: Other Dataset: I want to try MambaUNet with other segmentation dataset, do you have any suggestions?

A: I recommend to start with UNet, as it often proves to be the most efficient architecture. Based on my experience with various segmentation datasets, UNet can outperform alternatives like TransUNet and SwinUNet. Therefore, UNet should be your first choice. Transformer-based UNet variants, which depend on pretraining, have shown promising results, especially with larger datasets—although such extensive datasets are uncommon in medical imaging. In my view, MambaUNet not only offers greater efficiency but also more promising performance compared to Transformer-based UNet. However, it's crucial to remember that MambaUNet, like Transformer, necessitates pretraining (e.g. on ImageNet).

- Q: Colloboration: Could I discuss with you about other topic, like Image Registration, Human Pose Estimation, Image Fusion, and etc.

A: I would also like to do some amazing work. Connect with me via ziyang [dot] wang17 [at] gmail [dot] com.

@article{wang2024mamba,

title={Mamba-UNet: UNet-Like Pure Visual Mamba for Medical Image Segmentation},

author={Wang, Ziyang and others},

journal={arXiv preprint arXiv:2402.05079},

year={2024}

}

@article{wang2024semimamba,

title={Semi-Mamba-UNet: Pixel-Level Contrastive Cross-Supervised Visual Mamba-based UNet for Semi-Supervised Medical Image Segmentation},

author={Wang, Ziyang and others},

journal={arXiv preprint arXiv:2402.07245},

year={2024}

}

@article{wang2024weakmamba,

title={Weak-Mamba-UNet: Visual Mamba Makes CNN and ViT Work Better for Scribble-based Medical Image Segmentation},

author={Wang, Ziyang and others},

journal={arXiv preprint arXiv:xxxx.xxxxx},

year={2024}

}

SSL4MIS Link, Segmamba Link, SwinUNet Link, Visual Mamba Link.