Kubernetes is a widely used system to manage containerised applications.

GCP is the computing services platform powered by Google.

This tutorial intends to demonstrate how one can use Infrastructure as Code (IaC) to automate the provision of a Kubernetes cluster running on GCP.

The presented solutions are intended for learning purposes. Hence, don't treat as production-ready.

Automations:

- Provision Kubernetes

- Deploying an application

- Securing an application

- Deploying a stateful application

Use the Table of Contents on the top-left corner to explore all options.

Ansible is the tool of choice to implement automation. Ansible is one of the most utilised automation tools in the market and allows the creation of the automation necessary to run the `Kubernetes cluster.

- Installing Python

- Installing Ansible

- Ansible Google Cloud Platform Guide

- Installing gcloud cli

- Installing gke-gcloud-auth-plugin

- google-auth Python package

- requests Python package

- kubernetes Python package

- Ansible Kubernetes Module requirements

The local environment used to test the scripts had the following software:

| Software | Version |

|---|---|

| macOS Ventura | 13.2.1 |

| ansible-core | 2.14.13 |

| Python | 3.9.6 |

Python lib requests |

2.28.2 |

Python lib google-auth |

2.16.2 |

Python lib kubernetes |

26.1.0 |

| gcloud sdk | 422.0.0 |

.

├── LICENSE # license file

├── README.md # main documentation file

└── ansible # Ansible top-level folder

├── ansible.cfg # Ansible config file

├── create-k8s.yml # Ansible playbook to provision env

├── deploy-app-k8s.yml # Ansible playbook to deploy a Nginx web-server

├── destroy-k8s.yml # Ansible playbook to destroy env

├── undeploy-app-k8s.yml # Ansible playbook to remove the Nginx web-server

├── inventory

│ └── gcp.yml # Ansible inventory file

└── roles

├── destroy_k8s # Ansible role to remove k8s cluster

│ └── tasks

│ └── main.yml

├── destroy_k8s_deployment # Ansible role to remove the Nginx web-server

│ └── tasks

│ └── main.yml

├── destroy_k8s_policies # Ansible role to remove the k8s network policies

│ └── tasks

│ └── main.yml

├── destroy_network # Ansible role to remove VPC

│ └── tasks

│ └── main.yml

├── k8s # Ansible role to create k8s cluster

│ └── tasks

│ └── main.yml

├── k8s-deployment # Ansible role to deploy a Nginx web-server

│ ├── tasks

│ │ └── main.yml

│ └── vars

│ └── main.yml

├── k8s-policies # Ansible role to configure k8s network policies

│ └── tasks

│ └── main.yml

├── k8s-statefulset # Ansible role to deploy a Kafka cluster

│ └── tasks

│ └── main.yml

└── network # Ansible role to create VPC

└── tasks

└── main.ymlCreate a yaml file in the ansible/inventory folder to allow Ansible to interact with your GCP environment.

Here is a sample file:

all:

vars:

# use this section to enter GCP related information

zone: europe-west2-c

region: europe-west2

project_id: <gcp-project-id>

gcloud_sa_path: "~/gcp-credentials/service-account.json"

credentials_file: "{{ lookup('env','HOME') }}/{{ gcloud_sa_path }}"

gcloud_service_account: service-account@project-id.iam.gserviceaccount.com

# use the section below to enter k8s cluster related information

cluster_name: <name for your k8s cluster>

initial_node_count: 1

disk_size_gb: 100

disk_type: pd-ssd

machine_type: n1-standard-2

# use the section below to enter k8s namespaces to manage

# this namespace is used in the Deploying an Application section

namespace: nginxRefer to Ansible documentation on How to build your inventory for more information.

Execute the following command to provision the Kubernetes cluster:

ansible-playbook ansible/create-k8s.yml -i ansible/inventory/<your-inventory-filename>Output:

PLAY [create infra] ****************************************************************

TASK [network : create GCP network] ************************************************

changed: [localhost]

TASK [k8s : create k8s cluster] ****************************************************

changed: [localhost]

TASK [k8s : create k8s node pool] **************************************************

changed: [localhost]

PLAY RECAP *************************************************************************

localhost: ok=3 changed=3 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Use the gcloud command-line tool to connect to the Kubernetes cluster:

gcloud container clusters get-credentials <cluster_name> --zone <zone> --project <project_id>Note: replace the variables with the values used in the inventory file. Also, it's possible to retrieve this command from the Kubernetes Cluster page on GCP console.

Output:

Fetching cluster endpoint and auth data.

kubeconfig entry generated for devops-platform.

After connecting to the cluster use the kubectl command-line tool to control the cluster.

kubectl get nodes

Output:

NAME STATUS ROLES AGE VERSION

gke-<cluster_name>-node-pool-e058a106-zn2b Ready <none> 10m v1.18.12-gke.1210

The role k8s-deployment contains an example of how deploy a NGINX container in the Kubernetes cluster.

The role will create:

Investigating the role directory structure, we noticed that there is a vars folder. We Set variables in roles to ensure a value is used in that role, and is not overridden by inventory variables. Refer to Ansible Using Variables documentation for more details.

Execute the following command to deploy the Nginx web-server:

ansible-playbook ansible/deploy-app-k8s.yml -i ansible/inventory/<your-inventory-filename>Output:

PLAY [deploy application] **********************************************************

TASK [k8s-deployment : Create a k8s namespace] *************************************

changed: [localhost]

TASK [k8s-deployment : Create a k8s service to expose nginx] ***********************

changed: [localhost]

PLAY RECAP *************************************************************************

localhost: ok=2 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Execute the following commands and then access the Nginx using this URL.

export POD_NAME=$(kubectl get pods --namespace nginx -l "app=nginx" -o jsonpath="{.items[0].metadata.name}")

kubectl --namespace nginx port-forward $POD_NAME 8080:80 Now that we have a running cluster and a working application, the next step is to secure the traffic flow to our Nginx pod. We will do that using Kubernetes Network Policies.

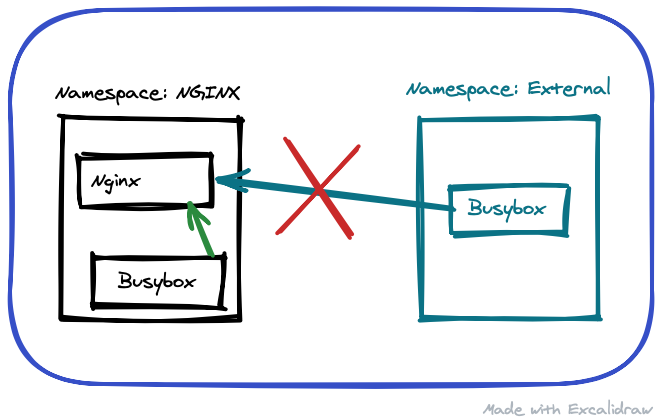

As we can see in the diagram above, we will allow communication from other pods in the same namespace as the Nginx pod while denying connection from the external namespace.

The role k8s-policies contains the manifest files to enable this configuration. It will create the external namespace and deploy a couple of busybox containers to help us demonstrate the policies.

Execute the following command to deploy the Nginx web-server:

ansible-playbook ansible/secure-app-k8s.yml -i ansible/inventory/<your-inventory-filename>Output:

PLAY [deploy application] **********************************************************************

TASK [k8s-policies : Create busybox pod on Nginx namespace] ************************************

ok: [localhost]

TASK [k8s-policies : Create external namespace] ************************************************

ok: [localhost]

TASK [k8s-policies : Create busybox pod on External namespace] *********************************

ok: [localhost]

TASK [k8s-policies : Create network policy to deny ingress] ************************************************************************************************

changed: [localhost]

PLAY RECAP *************************************************************************************

localhost: ok=4 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Retrieve the ip address of the nginx pod:

kubectl get pods --namespace nginx -l "app=nginx" -o jsonpath="{.items[0].status.podIP}"Use the busybox container to connect to the nginx pod:

kubectl -n nginx exec busybox -- wget --spider 10.40.1.10Output:

Connecting to 10.40.1.10 (10.40.1.10:80)

remote file exists

kubectl -n external exec busybox -- wget --spider 10.40.1.13Output:

Connecting to 10.40.1.13 (10.40.1.13:80)

wget: can't connect to remote host (10.40.1.13): Connection timed out

command terminated with exit code 1

This is the expected behaviour because our goal is to only allow access from pods in the nginx namespace.

Execute the following playbook to remove the Network Policy and re-run the wget command from the external namespace and see what happens!

ansible-playbook ansible/unsecure-app-k8s.yml -i ansible/inventory/<your-inventory-filename>The role k8s-statefulset contains an example of how to deploy a Kafka broker in the Kubernetes cluster.

The role will create:

Execute the following command to deploy the Kafka cluster:

ansible-playbook ansible/deploy-statefulset-k8s.yml -i ansible/inventory/gcp.ymlOutput:

PLAY [deploy statefulset application] **********************************************************

TASK [k8s-statefulset : create namespace zookeeper] ******************************************** changed: [localhost]

TASK [k8s-statefulset : create zookeeper-headless service] ************************************* changed: [localhost]

TASK [k8s-statefulset : create zookeeper service] ********************************************** changed: [localhost]

TASK [k8s-statefulset : deploy apache zookeeper] *********************************************** changed: [localhost]

TASK [k8s-statefulset : wait for zookeeper pods to be running] ************************************************************************************************ ok: [localhost]

TASK [k8s-statefulset : create namespace kafka] ************************************************ changed: [localhost]

TASK [k8s-statefulset : create kafka service for Broker] *************************************** changed: [localhost]

TASK [k8s-statefulset : deploy apache kafka Broker 1] ****************************************** changed: [localhost]

PLAY RECAP ************************************************************************************* localhost: ok=8 changed=7 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Connect to the broker pod, create, and list a topic.

kubectl exec -it -n kafka kafka-broker-0 -- bash

/opt/bitnami/kafka/bin/kafka-topics.sh --zookeeper zookeeper-headless.zookeeper:2181 --create --topic test-topic --partitions 1 --replication-factor 1

/opt/bitnami/kafka/bin/kafka-topics.sh --bootstrap-server localhost:9092 --listExecute the following command to destroy the Kubernetes cluster:

ansible-playbook ansible/destroy-k8s.yml -i ansible/inventory/<your-inventory-filename>

Output:

PLAY [destroy infra] *********************************************************************

TASK [destroy_k8s : destroy k8s cluster] *************************************************

changed: [localhost]

TASK [destroy_network : destroy GCP network] *********************************************

changed: [localhost]

PLAY RECAP *******************************************************************************

localhost: ok=2 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

- Ansible Documentation for more information on how to expand your Ansible knowledge and usage.

- Ansible Google Cloud Collection for more information on available modules.

- Ansible Kubernetes module for more information on

Kubernetesautomation. - Ansible Roles for

rolesspecific documentation.