This is a structured plan, with direct instructions to help a marketing team perform an A/B test.

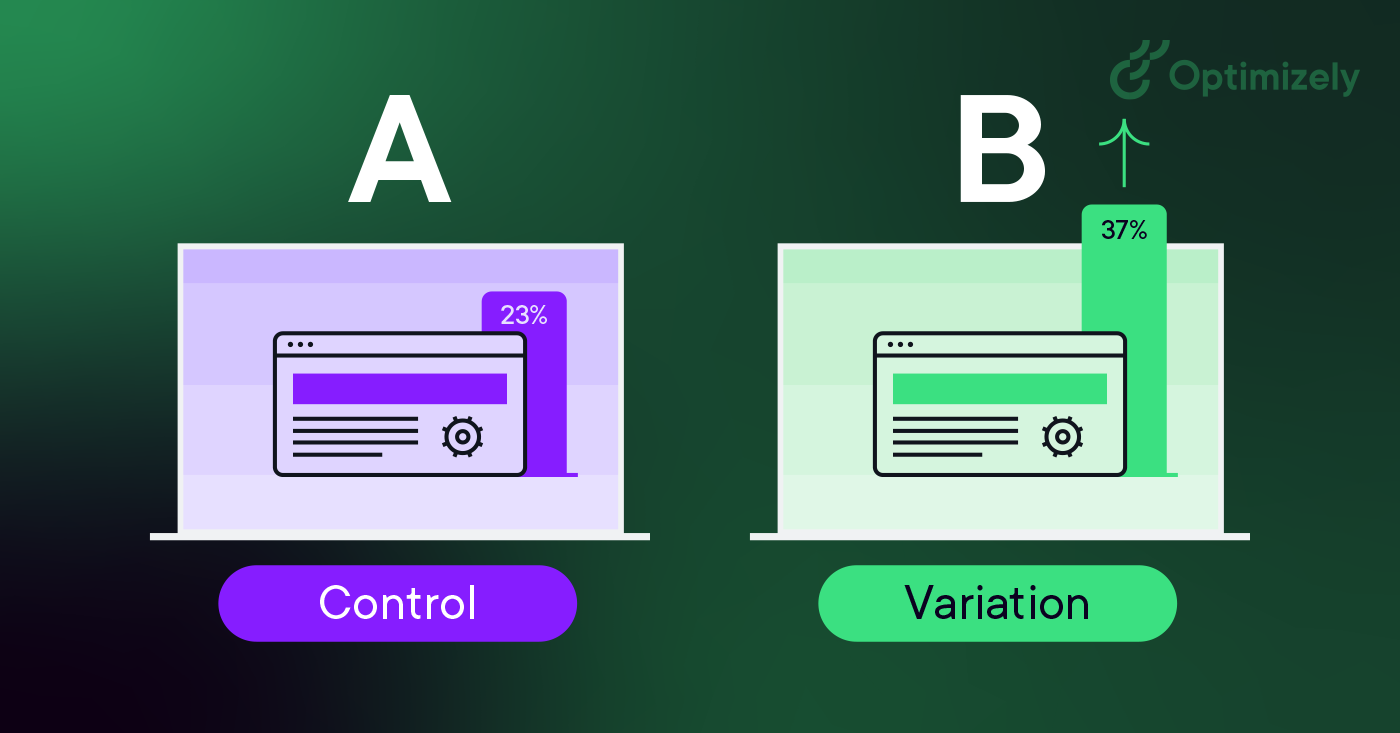

An A/B test is a controlled experiment to compare two versions of a marketing element, such as a webpage, email, or advertisement.

Designed to determine which version is more effective in achieving a specific goal, it can help increase conversions or click-through rates, for example.

In an A/B test, two versions of the marketing element are randomly assigned to two separate groups, with one group receiving version A and the other receiving version B. The two versions are identical except for the variable being tested.

Once the test is complete, the results are analyzed to determine which version performed better.

A/B testing is crucial from a marketing perspective because it provides a data-driven approach to improving marketing performance. Rather than relying on assumptions or guesses about what might work best, A/B testing allows marketers to test different variables and see which version is actually more effective. It helps companies make data-driven decisions about allocating their marketing resources and budget to achieve the best results.

However, there are many misconceptions about the A/B test. For the results to be considered reliable, the experiment has to follow a set of best practices to ensure the test has statistical relevance.

This is the plan to execute an A/B Test on a website page to try to improve conversion rates. The hypothesis is that changing the Call to Action (CTA) position can positively affect conversion numbers, generating more submissions of the company's consulting services demo form.

In this experiment, the null hypothesis (current version, version A) assumes conversion rates are equal and if there is a difference this is only due to the chance factor. In contrast, the alternative hypothesis (version B) assumes there is a statistically significant difference between the conversion rates.

Some examples of how A/B testing can be used include:

- Testing different versions of a landing page to see which one generates more conversions or leads.

- Testing different versions of an email subject line to see which one has a higher open rate.

- Testing different versions of a social media advertisement to see which one has a higher click-through rate.

- Testing different versions of a CTA button to see which one generates more conversions.

- Testing different versions of a product description to see which one has a higher conversion rate.

By continually testing and optimizing marketing elements, companies can improve their overall marketing performance and generate better results.