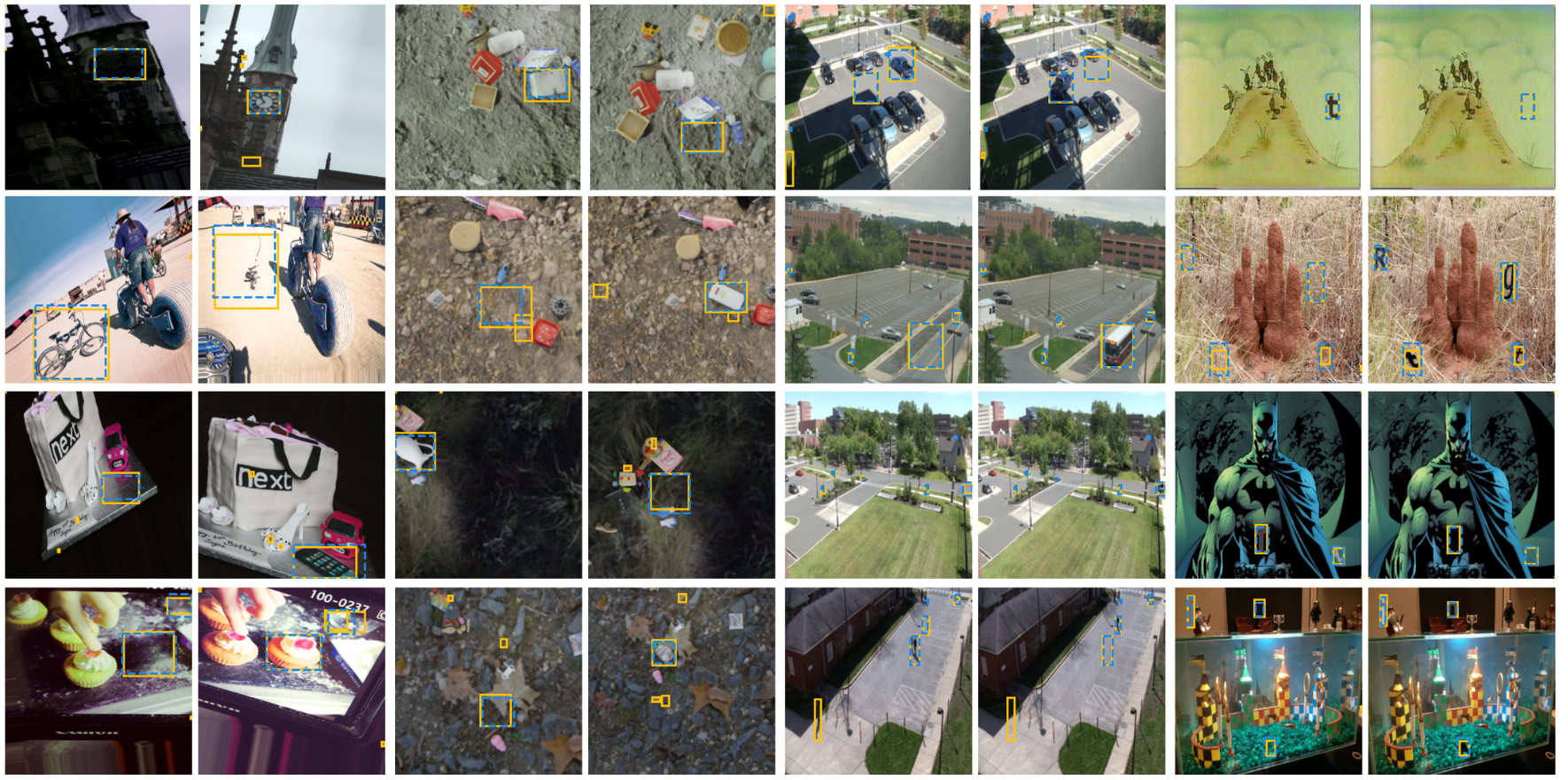

The Change You Want to See (Now in 3D)

Ragav Sachdeva, Andrew Zisserman

In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) 2023

Ragav Sachdeva, Andrew Zisserman

conda create -n cyws python=3.9

conda activate cyws

conda install -c pytorch pytorch=1.10.1 torchvision=0.11.2 cudatoolkit=11.3.1

pip install kornia@git+https://github.com/kornia/kornia@77589a58be6c603b7afd755d261783bd0c152a97

pip install matplotlib Shapely==1.8.0 easydict pytorch-lightning==1.5.8 loguru scipy h5py

python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu113/torch1.10/index.html

pip install mmcv-full==1.7.0 -f https://download.openmmlab.com/mmcv/dist/cu113/torch1.10/index.html

pip install mmdet==2.28.2 wandb

pip install segmentation-models-pytorch@git+https://github.com/ragavsachdeva/segmentation_models.pytorch.git@0092ee4d6f851d89a4a401bb2dfa6187660b8dd3

pip install imageio==2.13.5

Please use the following to download the datasets presented in this work. The checksums can be found here.

coco_inpainted

└───train

│ │ data_split.pkl

│ │ list_of_indices.npy

│ │

│ └───images_and_masks

│ | │ <index>.png (original coco image)

│ | │ <index>_mask<id>.png (mask of inpainted objects)

│ | │ ...

| |

│ └───inpainted

│ | │ <index>_mask<id>.png (inpainted image corresponding to the mask with the same name)

│ | │ ...

| |

│ └───metadata

│ | │ <index>.npy (annotations)

│ | │ ...

│

└───test

│ └───small

│ │ | data_split.pkl

│ │ | list_of_indices.npy

│ │ └───images_and_masks/

│ │ └───inpainted/

│ │ └───metadata/

│ │ └───test_augmentation/

| |

│ └───medium/

│ └───large/

Note: We deemed it convenient to bundle the inpainted images along with their corresponding (original) COCO images here to allow for a single-click download. Please see COCO Terms of Use.

kubric_change

│ metadata.npy (this is generated automatically the first time you load the dataset)

│ <index>_0.png (image 1)

| <index>_1.png (image 2)

| mask_<index>_00000.png (change mask for image 1)

| mask_<index>_00001.png (change mask for image 2)

| ...

Note: This dataset was generated using kubric.

Download original images using link provided by Jhamtani et al. + Download our annotations as .npy.gz

std

│ annotations.npy (ours)

│ <index>.png (provided by Jhamtani et al.)

| <index>_2.png (provided by Jhamtani et al.)

| ...

Note: The work by Jhamtani et al. can be found here.

Download original bg images as .tar.gz + Download synthetic text images as .h5.gz

synthtext_change

└───bg_imgs/ (original bg images)

| | ...

│ synthtext-change.h5 (images with synthetic text we generated)

Note: The code used to generate this dataset is modified from here.

Disclaimer - Don't forget to update the path_to_dataset in the relevant config files.

Training:

python main.py --method centernet --gpus 2 --config_file configs/detection_resnet50_3x_coam_layers_affine.yml --max_epochs 200 --decoder_attention scse

The codebase is heavily tied in with Pytorch Lightning and Weights and Biases. You may find the following flags helpful:

--no_logging(disables logging to weights and biases)--quick_prototype(runs 1 epoch of train, val and test cycle with 2 batches)--resume_from_checkpoint <path>--load_weights_from <path>(initialises the model with these weights)--wandb_id <id>(for weights and biases)--experiment_name <name>(for weights and biases)

Testing:

python main.py --method centernet --gpus 2 --config_file configs/detection_resnet50_3x_coam_layers_affine.yml --decoder_attention scse --test_from_checkpoint <path>

Demo/Inference:

python demo_single_pair.py --load_weights_from <path_to_checkpoint> --config_file configs/detection_resnet50_3x_coam_layers_affine.yml --decoder_attention scse

| Test pairs | COCO-Inpainted | Synthtext-Change | VIRAT-STD | Kubric-Change |

|---|---|---|---|---|

| pretrained-resnet50-3x-coam-scSE-affine.ckpt | 0.63 | 0.89 | 0.54 | 0.76 |

@InProceedings{Sachdeva_WACV_2023,

title = {The Change You Want to See},

author = {Sachdeva, Ragav and Zisserman, Andrew},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

year = {2023},

}