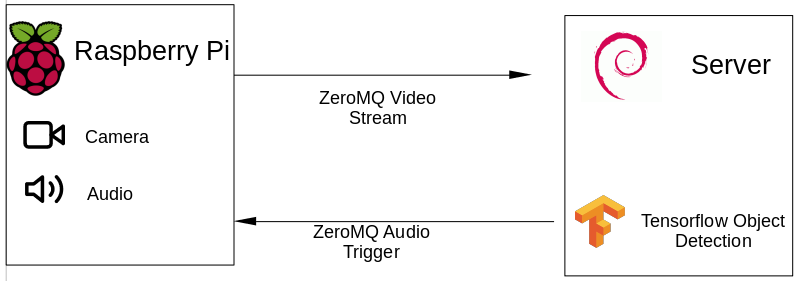

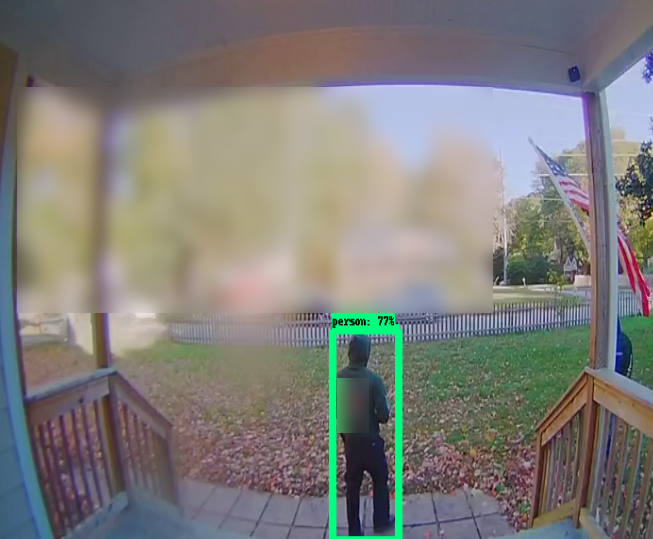

A Raspberry Pi powered, distributed (edge) computing camera setups that runs a Tensorflow object detection model to determine whether a person is on the camera. The Raspberry Pi is used for video streaming and triggering actions (such as playing audio, turning on lights, or triggering an Arduino), whereas a server or laptop runs the object detection. With a suitable TFLite installation, this can happen locally on the Raspberry as well.

Based on the detection criteria, a plugin model allows to trigger downstream actions.

Based on my blog.

Side note: The setup shown here only fits the use-case of edge to a degree, as we run local detection on a separate machine; technically, the Raspberry Pi is capable of running Tensorflow on board, e.g. through TFLite or esp32cam.

You can change this behavior by relying on a local tensorflor instance and having the ZMQ communication run over localhost.

This project requires:

- A Raspberry Pi + the camera module v2 (the

client) - Any Linux machine on the same network (the

server)

A helper script is available:

Use a virtual environment

python3 -m venv env

source env/bin/activate

bash ./sbin/install_tf_vidgear.sh [server/client]

Please see INSTALL.md for details.

Edit the conf/config.ini with the settings for your Raspberry and server.

For playing audio, please adjust conf/plugins.d/audio.ini.

[Audio]

Path=../audio_files

For an appropriate path.

If you want to change the model, please check the Model Zoo. The blog article used the outdated ssd_mobilenet_v1_coco_2017_11_17.

[Tensorflow]

ModelUrl=ssd_mobilenet_v3_large_coco_2019_08_14

python3 -m client.sender --input 0 # for picam

python3 -m client.sender --input '/path/to/video' # for local video

python3 -m server.receiver

A plugin model allows to trigger downstream actions. These actions are triggered based on the configuration.

Plugins can be enabled by setting the following in config.ini:

[Plugins]

Enabled=audio

Disabled=

Currently, the following plugins are avaibale:

| Plugin | Description | Requirements | Configuration | Base |

|---|---|---|---|---|

audio |

Plays audio files once a person is detected | Either playsound, pygame, or omxplayer |

conf/plugins.d/audio.ini |

ZMQ |

store_video |

Stores video files on the server, with a defined buffer or length | Path and Encoding settings |

conf/plugins.d/store_video.ini |

ZServerMQ |

This project is in an early state of development. Therefore, there are several open items that need to be covered. Please see TODO for details.

This project is licensed under the GNU GPLv3 License - see the LICENSE file for details.