Let us DO NOT expect Wall Street to open-source LLMs nor open APIs, due to FinTech institutes' internal regulations and policies.

We democratize Internet-scale data for financial large language models (FinLLMs) at FinNLP and FinNLP Website

Disclaimer: We are sharing codes for academic purposes under the MIT education license. Nothing herein is financial advice, and NOT a recommendation to trade real money. Please use common sense and always first consult a professional before trading or investing.

1). Finance is highly dynamic. BloombergGPT retrains an LLM using a mixed dataset of finance and general data sources, which is too expensive (53 days, a cost of around $3M). It is costly to retrain an LLM model every month or every week, so lightweight adaptation is highly favorable in finance. Instead of undertaking a costly and time-consuming process of retraining a model from scratch with every significant change in the financial landscape, FinGPT can be fine-tuned swiftly to align with new data (the cost of adaptation falls significantly, estimated at less than $=300 per fine-tuning).

2). Democratizing Internet-scale financial data is critical, which should allow timely updates (monthly or weekly updates) using an automatic data curation pipeline. But, BloombergGPT has privileged data access and APIs. FinGPT presents a more accessible alternative. It prioritizes lightweight adaptation, leveraging the strengths of some of the best available open-source LLMs, which are then fed with financial data and fine-tuned for financial language modeling.

3). The key technology is "RLHF (Reinforcement learning from human feedback)", which is missing in BloombergGPT. RLHF enables an LLM model to learn individual preferences (risk-aversion level, investing habits, personalized robo-advisor, etc.), which is the "secret" ingredient of ChatGPT and GPT4.

-

FinGPT V3 (Updated on 8/4/2023)

- FinGPT v3 series are LLMs finetuned with LoRA method on the News and Tweets sentiment analysis dataset which achieve best scores on most of the financial sentiment analysis datasets.

- FinGPT v3.1 uses chatglm2-6B as base model; FinGPT v3.2 uses llama2-7b as base model

- Benchmark Results:

Weighted F1 BloombergGPT ChatGLM2 Llama2 FinGPT v3.1 v3.1.1 (8bit) v3.1.2 (QLoRA) FinGPT v3.2 FPB 0.511 0.381 0.390 0.855 0.855 0.777 0.850 FiQA-SA 0.751 0.790 0.800 0.850 0.847 0.752 0.860 TFNS - 0.189 0.296 0.875 0.879 0.828 0.894 NWGI - 0.449 0.503 0.642 0.632 0.583 0.636 Devices 512 × A100 64 × A100 2048 × A100 8 × A100 A100 A100 8 × A100 Time 53 days 2.5 days 21 days 2 hours 6.47 hours 4.15 hours 2 hours Cost $2.67 million $ 14,976 $4.23 million $65.6 $25.88 $17.01 $65.6

Cost per GPU hour. For A100 GPUs, the AWS p4d.24xlarge instance, equipped with 8 A100 GPUs is used as a benchmark to estimate the costs. Note that BloombergGPT also used p4d.24xlarge As of July 11, 2023, the hourly rate for this instance stands at $32.773. Consequently, the estimated cost per GPU hour comes to $32.77 divided by 8, resulting in approximately $4.10. With this value as the reference unit price (1 GPU hour). BloombergGPT estimated cost= 512 x 53 x 24 = 651,264 GPU hours x $4.10 = $2,670,182.40

-

Reproduce the results by running benchmarks, and the detailed tutorial is on the way.

-

Finetune your own FinGPT v3 model with the LoRA method on only an RTX 3090 with this notebook in 8bit or this notebook in int4 (QLoRA)

-

- Let's train our own FinGPT in American Financial Market with LLaMA and LoRA (Low-Rank Adaptation)

-

- Let's train our own FinGPT in Chinese Financial Market with ChatGLM and LoRA (Low-Rank Adaptation)

- FinGPT: Powering the Future of Finance with 20 Cutting-Edge Applications

- FinGPT I: Why We Built the First Open-Source Large Language Model for Finance

- FinGPT II: Cracking the Financial Sentiment Analysis Task Using Instruction Tuning of General-Purpose Large Language Models

- Real-time data curation pipeline to democratize data for FinGPT

- Lightweight adaptation to democratize the FinGPT model for both individuals and institutes (frequent updates)

- Support various financial applications

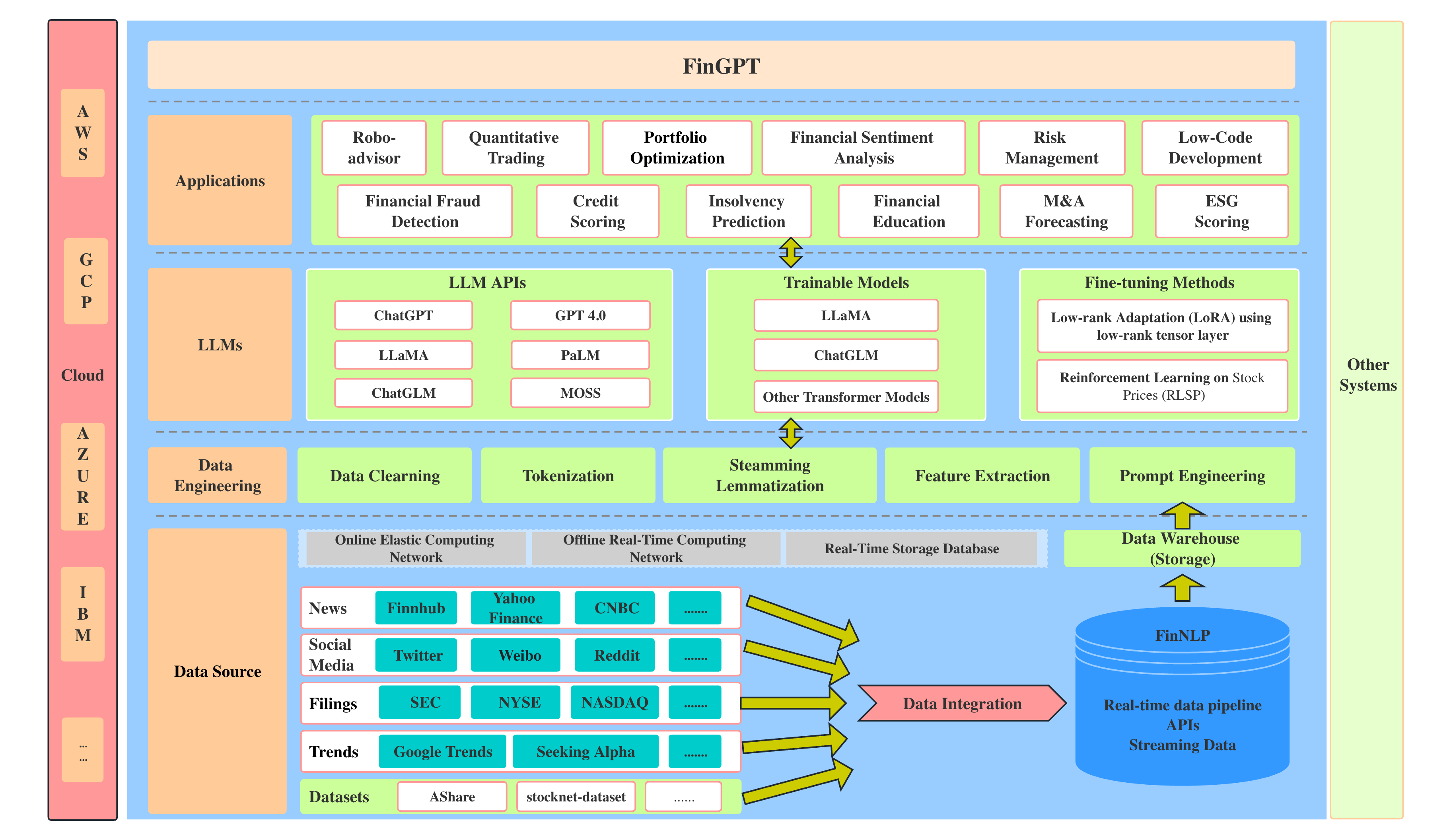

- FinNLP provides a playground for all people interested in LLMs and NLP in Finance. Here we provide full pipelines for LLM training and finetuning in the field of finance. The full architecture is shown in the following picture. Detail codes and introductions can be found here. Or you may refer to the wiki

- Data source layer: This layer assures comprehensive market coverage, addressing the temporal sensitivity of financial data through real-time information capture.

- Data engineering layer: Primed for real-time NLP data processing, this layer tackles the inherent challenges of high temporal sensitivity and low signal-to-noise ratio in financial data.

- LLMs layer: Focusing on a range of fine-tuning methodologies such as LoRA, this layer mitigates the highly dynamic nature of financial data, ensuring the model’s relevance and accuracy.

- Application layer: Showcasing practical applications and demos, this layer highlights the potential capability of FinGPT in the financial sector.

- Columbia Perspectives on ChatGPT

- [MIT Technology Review] ChatGPT is about to revolutionize the economy. We need to decide what that looks like

- [BloombergGPT] BloombergGPT: A Large Language Model for Finance

- [Finextra] ChatGPT and Bing AI to sit as panellists at fintech conference

- [YouTube video] I Built a Trading Bot with ChatGPT, combining ChatGPT and FinRL.

- Hey, ChatGPT! Explain FinRL code to me!

- ChatGPT Robo Advisor v2

- ChatGPT Robo Advisor v1

- A demo of using ChatGPT to build a Robo-advisor

- ChatGPT Trading Agent V2

- A FinRL agent that trades as smartly as ChatGPT by using the large language model behind ChatGPT

- ChatGPT Trading Agent V1

- Trade with the suggestions given by ChatGPT

- ChatGPT adds technical indicators into FinRL

- Sparks of artificial general intelligence: Early experiments with GPT-4

- [GPT-4] GPT-4 Technical Report

- [InstructGPT] Training language models to follow instructions with human feedback NeurIPS 2022.

The Journey of Open AI GPT models. GPT models explained. Open AI's GPT-1, GPT-2, GPT-3.

- [GPT-3] Language models are few-shot learners NeurIPS 2020.

- [GPT-2] Language Models are Unsupervised Multitask Learners

- [GPT-1] Improving Language Understanding by Generative Pre-Training

- [Transformer] Attention is All you Need NeurIPS 2017.

-

[BloombergGPT] BloombergGPT: A Large Language Model for Finance

-

WHAT’S IN MY AI? A Comprehensive Analysis of Datasets Used to Train GPT-1, GPT-2, GPT-3, GPT-NeoX-20B, Megatron-11B, MT-NLG, and Gopher

-

FinRL-Meta Repo and paper FinRL-Meta: Market Environments and Benchmarks for Data-Driven Financial Reinforcement Learning. Advances in Neural Information Processing Systems, 2022.

-

[AI4Finance] FinNLP Democratizing Internet-scale financial data.

- GPT-3 Creative Fiction Creative writing by OpenAI’s GPT-3 model, demonstrating poetry, dialogue, puns, literary parodies, and storytelling. Plus advice on effective GPT-3 prompt programming & avoiding common errors.

ChatGPT Trading Bot

- [YouTube video] ChatGPT Trading strategy 20097% returns

- [YouTube video] ChatGPT Coding - Make A Profitable Trading Strategy In Five Minutes!

- [YouTube video] Easy Automated Live Trading using ChatGPT (+9660.3% hands free)

- [YouTube video] ChatGPT Trading Strategy 893% Returns

- [YouTube video] ChatGPT 10 Million Trading Strategy

- [YouTube video] ChatGPT: Your Crypto Assistant

- [YouTube video] Generate Insane Trading Returns with ChatGPT and TradingView