This project has various demos on GKE. The project uses Ansible and everything is code. The main Ansible playbook is called deploy.yaml which creates the GKE cluster and then goes on to install Oracle 23c Free, PostgrSQL, Kafka, Hazelcast and any other software needed to demonstrate the capabilities.

- Service Account with

permissions to create GKE. The service account must be downloaded as a JSON file and located in

~/.gcp/credentials.json - Ansible installed in local machine. Used to orchastrate the deployment.

- kubectl installed in local machine. Used to interact with the GKE cluster.

- Helm installed in local machine. Used to install Kafka and Hazelcast.

- Hazelcast CLC installed in local machine. Used to deploy jobs to the Hazelcast Platform.

- Maven installed in local machine.

- Skaffold installed in local machine. This is optional but is needed for local development.

- Hazelcast License key present in file

~/hazelcast/hazelcast.licensein local machine. - Google Auth plugin installed pip -

pip3 install google-authin local machine - Install

gloudviabrew install google-cloud-sdkandgke-gcloud-auth-pluginviagcloud components install gke-gcloud-auth-pluginin local machine - A repository in GCP Artifact Registry with

name mapping to variable

repository_idin regionasia-south1. Ansible will push the docker image to this repository. Various parameters can be modified via the var files.

In general the ansible playbook, deploy.yaml does the following:

-

Creates a GKE cluster including VPC, subnets and nodes. See

gkerole. -

Creates a Postgres database. See

postgresrole. -

Creates a Kafka cluster and topic along with Prometheus. See

kafkarole. -

Create self-signed certificates for Hazelcast. See

hzrole. -

Creates two Hazelcast clusters with WAN replication and a Management Center. TLS is enabled. See

hzrole. -

Creates a Hazelcast streaming job. See

pmt-jobrole. -

Start Docker on the laptop and execute

ansible-playbook k8s/deploy.yaml. -

You can run individual tasks by using

tags. For example, following commands will create the GKE cluster, populate the database, configure ssl certificates, deploy Hazelcast clusters and start Management Center. The next command will deploy the pipeline and the next one starts producing the transactions.ansible-playbook k8s/deploy.yaml --tags="gke,init,postgres,ssl,hz-init,hz"ansible-playbook k8s/deploy.yaml --tags="pipeline"ansible-playbook k8s/deploy.yaml --tags="tx-prod"

-

You may check the cluster on MC on

http://<EXTERNAL-IP>:8080whereEXTERNAL-IPis the external IP of the servicehazelcast-mcservice. Runkubectl get svcto get the IP. -

To shutdown the cluster execute

ansible-playbook k8s/undeploy.yaml

- To delete kafka execute

ansible-playbook k8s/undeploy.yaml --tags="kafka"

- Local Docker

- Local Maven

- Hazelcast CLC

- Open file

pmt-producer/pom.xmland uncomment theplatformtag appropriate to your laptop (mac or linux). For GKE deployment, both platforms are built. See this

- Compile the java projects and run docker compose as

mvn install jib:dockerBuild -Dimage=pmt-producer && docker-compose up -d. See this and this. - You can view the deployment on Hazelcast Management Centre on

http://localhost:8080and adding the server with namedevand addresshz. - As a one time step you have to add local docker config for Hazelcast CLC using

clc config add docker cluster.address=localhost:5701 cluster.name=dev - Deploy data connection to the kafka service using

clc -c docker script ./k8s/roles/pmt-job/files/kafka-ds-docker.sql. - Deploy the job as

clc -c docker job submit ./pmt-job/target/pmt-job-1.0.0-SNAPSHOT.jar --class com.hz.demo.pmt.job.PaymentAggregator

Run Oracle 23c Free installed via a third-party helm chart along with Kafka and Hazelcast to demonstrate CDC from Oracle to Hazelcast.

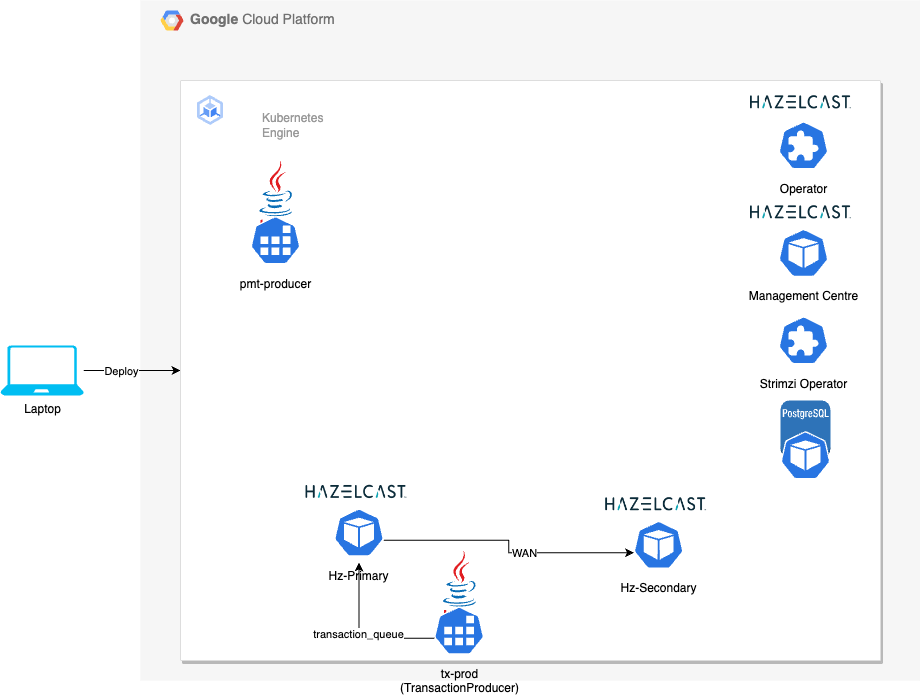

The idea is to process streaming payment messages using Hazelcast Platform. Payment messages are generated by a

java project called pmt-producer and are sent to a Kafka topic payments. A java project called pmt-job

is deployed as a Hazelcast pipeline to consume the messages from the Kafka topic and process them.

The functional goal is to display a dashboard which gets updated in real-time with the liquidity position of each bank. Payment messages are simple JSON that debits a bank and credits another. The Hazelcast Jet job processes the messages and updates the liquidity position of each bank. The dashboard is a simple web page that displays the liquidity position of each bank. The dashboard is updated in real-time as the payments are processed.

The demo is deployed on GKE using Ansible. Follow the pre-requisites and steps below to deploy the demo.

The demo can also be run on a local machine using Docker Compose.

- Hazelcast Platform - Hazelcast Platform is a unified real-time data platform that enables companies to act instantly on streaming data. It combines high-performance stream processing capabilities with a built-in fast data store to automate, streamline, and enhance business-critical processes and applications.

- Kafka. Kafka is deployed in RAFT mode with 3 replicas not requiring Zookeeper.

- Google Jib. Jib is a Java containerizer from Google that lets Java developers build containers using the Java tools they know. It is a Maven plugin that builds Docker and OCI images for your Java applications and is available as plugins for Maven and Gradle.

- Kafka is deployed using this blog

- This project builds on Trade Monitor Dashboard getting-started-with-cert-manager-on-google-kubernetes-engine-using-lets-encrypt-for-ingress-ssl/

- Jib is an interesting project

- Oracle registry

- Debezium example with Oracle part 2

- Part 2

- Part 3

- Configure Jeager and cert-manager in GKE.