Repo to obtain outputs from PaliGemma a Visual Language Model for object detection tasks and using the predictions as labels, visualized through VIA tool by VGG group.

Steps:

- Install dependencies

pip3 install -r requirements.txt - Get token from Hugging Face and set as env variable

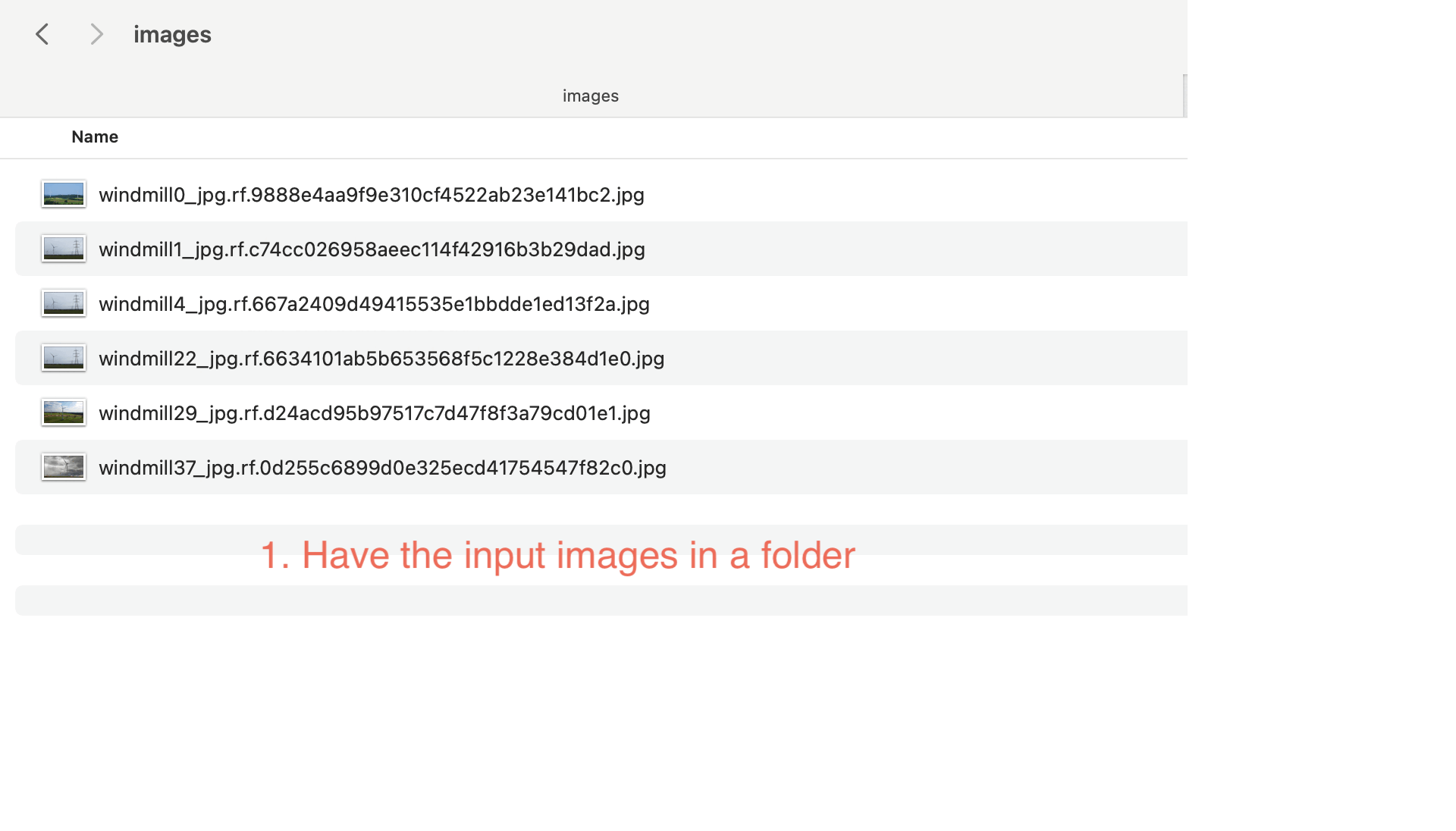

os.environ["HUGGINGFACE_API_TOKEN"] = "<enter the token here>" - Put the images for which labels are needed in the images folder

- Execute

python3 auto_labelling_paligemma.py - Download the VIA tool : https://www.robots.ox.ac.uk/~vgg/software/via/downloads/via-2.0.12.zip

- Click on via.html and upload the annotations generated

- Make sure to have the images folder inside the via folder as shown in

step 4 - Adjust/Add/Delete the annotations based on the need

References:

[1] https://github.com/NSTiwari/PaliGemma

[2] https://huggingface.co/docs/transformers/main/en/model_doc/paligemma

[3] https://www.kaggle.com/datasets/kylegraupe/wind-turbine-image-dataset-for-computer-vision

[4] https://www.robots.ox.ac.uk/~vgg/software/via/

Google Cloud credits are provided for this project #AISprint

If you use this project in your research, please cite it using the following BibTeX entry:

@misc{Bhat2024,

author = {Rajesh Shreedhar Bhat},

title = {Auto Labelling with Vision-Language Models},

year = {2024},

publisher = {GitHub},

howpublished = {\url{https://github.com/rajesh-bhat/auto_labelling_with_vlms}},

}