A program developed using MPI for distributed computation of Histogram for large data and their performance anaysis on multi-core systems. The program is written in C and uses SPMD architecture to fork processes and compute histogram. There are two implementations:

histo.c => Using point to point communication MPI_Send, MPI_Recv to communicate between processes

histo.c => Using collective communication MPI_Broadcast, MPI_Scatter and MPI_Gather to communicate between processesThe programs are compiled using mpicc and run using mpiexec. The compilation and execution instructions are shown below:

Compilation:

mpicc mpi_histo.c -o <executable_name>

Execution:

mpiexec -n <no_processes> <executable_name> <bin_count> <data_min> <data_max> <data_count>

Taken from command line arguments:

no_processes : Total number of processes to start. bin_count : Total number of bin_count for histogram. data_min : Minimum value for data data_max : Maximum value for data data_count : Number of random data points to generate.

By default this program creates <data_count> number of random data points between data_min and data_max and computes the histogram for <bin_count> number of data items. The code can be modified according to scenarios or make it read a large data file and compute the histogram on real data.

Example:

Compilation: mpicc mpi_histo.c -o mpi_histo

Execution: mpiexec -n 2 ./mpi_histo 10 1 100 10

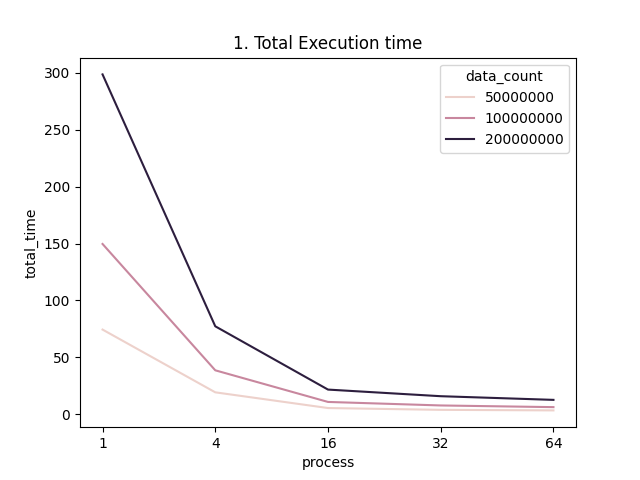

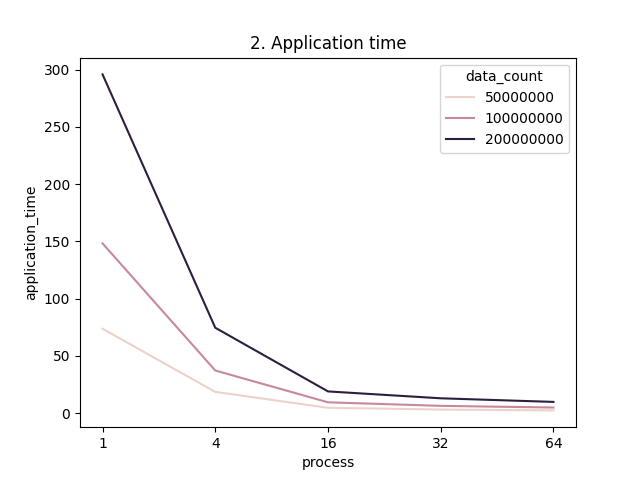

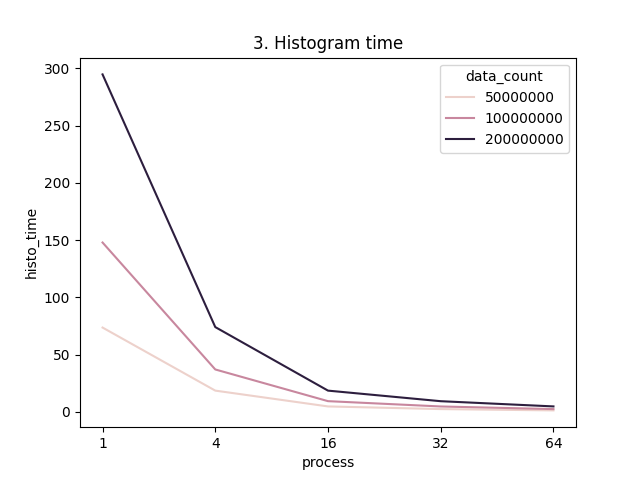

The histogram compuation was done for 50000000, 100000000 and 200000000 data points using 1 to 64 cores on a 4 node CHPC kingspeak cluster.

The results obtained are described here.

Total Execution time: It is the time taken by the program to complete the execution.

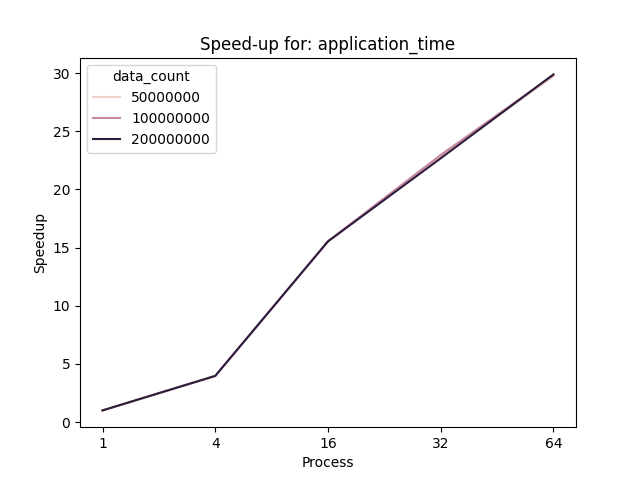

Application Time: This includes the time taken by the process to create and distribute data and compute histogram.

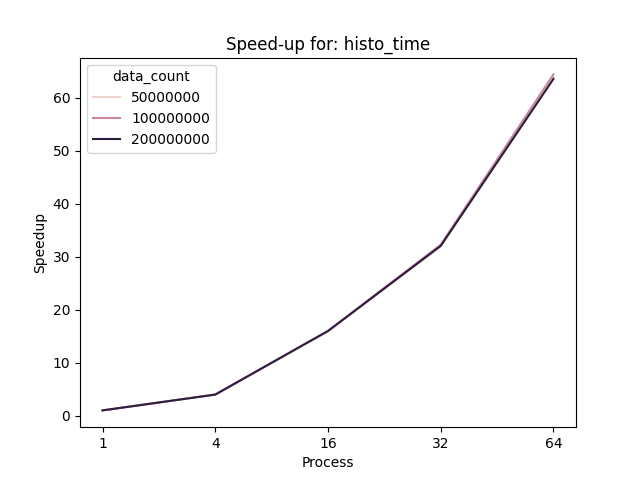

Histogram time: This is the time taken by one process to compute histogram on scattered data.

From the figures, for a serial program (process = 1) as the number of data elements increases the time taken to compute the histogram also increases. But as the number of processes is increased the total time to compute the histogram decreases as the work is divided among the processes. We can also see that the total time and the application time is greater than the time taken to compute the histogram. The reason behind this is, total execution time and application time also includes the time taken to scatter the data among the processes whereas, histogram time only accounts the maximum time taken by a process to compute the histogram.

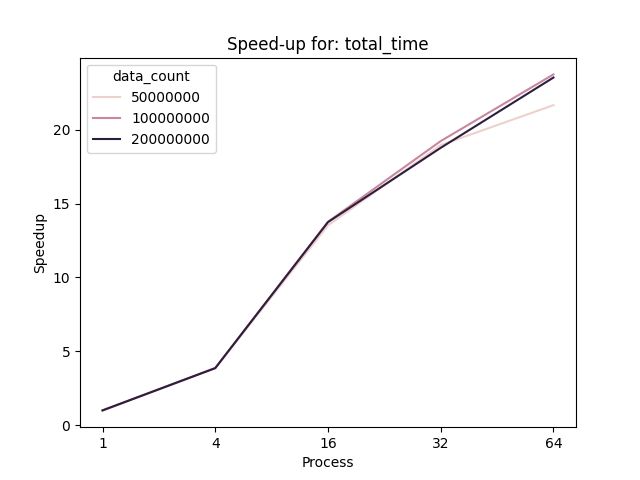

The speedup of a parallel program is given as: S(n,p) = Tserial(n) / Tparallel(n, p). From the figures we can see that the speedup for total time of the program is less than application and histogram computation time for a program. Similar to timing reports, total execution time of a program includes the time taken by process 0 to populate and scatter the data. But in the case of histogram time the speedup is drastically increased and is nearly a linear speedup.

Note: As the number of processes increases it adds a communication overhead on the system thus reducing the execution time.

Processes | Total Time | Application Time | Histogram Time | Data Count |

1 | 74.423788 | 73.756131 | 73.569383 | 50000000 |

4 | 19.308111 | 18.636843 | 18.439549 | 50000000 |

16 | 5.504118 | 4.754371 | 4.615857 | 50000000 |

32 | 3.926105 | 3.230799 | 2.302202 | 50000000 |

64 | 3.436093 | 2.481364 | 1.150515 | 50000000 |

1 | 149.605372 | 148.257896 | 147.896947 | 100000000 |

4 | 38.644788 | 37.317932 | 36.934477 | 100000000 |

16 | 10.867961 | 9.537738 | 9.233756 | 100000000 |

32 | 7.780161 | 6.458228 | 4.580765 | 100000000 |

64 | 6.301298 | 4.971914 | 2.294652 | 100000000 |

1 | 298.528679 | 295.804794 | 294.842392 | 200000000 |

4 | 77.303529 | 74.629462 | 73.983486 | 200000000 |

16 | 21.717287 | 19.049401 | 18.434128 | 200000000 |

32 | 15.902424 | 13.048625 | 9.19137 | 200000000 |

64 | 12.687857 | 9.89958 | 4.63593 | 200000000 |