This demo is about fraud detection in the realm of financial transactions. The goal is to demonstrate how to identify fraudulent ATM withdrawals in Europe. The rate of the incoming data stream is around 10,000 financial transactions, with a throughput of ca. 2MB/sec, resulting in some 7GB of log data per hour. A fraudulent ATM withdrawal in the context of this demo is defined as any sequence of consecutive withdrawals from the same account, in different locations. The underlying ATM location data stems from the OpenStreetMap project.

- MapR M5 Enterprise Edition for Apache Hadoop

- Python 2.7+

- heatmap.js for the WebUI (included in the client lib)

- cluster of three or more physical or virtual machines (local sandbox or cloud deployment in GCE or EC2)

- For the front-end, in order to work, you'll need an Internet connection (because of Google Maps)

Read the deployment notes to learn how to set up the environment and the app.

In the following I assume you've set up the M5 cluster and installed the FrDO

app locally on node mapr-demo-2. I created a volume called frdo mounted

at /mapr/frdo where I installed the app and which serves as the basis for the

scratch data.

The one and only script you need to run the demo is called frdo.sh and it has

the following options:

up... launches both the streaming source gess as well the stream processor Sisenikdown... shuts down gess/Sisenik, no more data producedgen... continuously generates heatmaps based on snapshots of the FrDO volumerun... launches the appserver and makes front-end available via http://mapr-demo-2:6996/

Note that you'll need adapt the config settings in frdo.sh before you can use

it (FrDO volume, Hive config, locations of scripts).

To demonstrate the data generation part of the demo, you first want to launch the streaming data generator gess and the stream processor Sisenik:

[mapr@mapr-demo-2 frdo]$ ./frdo.sh up

Note that in order to have some data to work with, let gess/Sisenik run for a

few minutes. In the default configuration, Sisenik dumps some 1MB/sec, that is,

say, for a 3 minutes run you'll end up with some 180MB (=3 x 60 x 1MB/s)

worth of data.

Next it's time to generate the heatmap data for the app server. To this end, make sure the Hive Thrift server is running:

[mapr@mapr-demo-2 cluster]$ pwd

/mapr/frdo/cluster

[mapr@mapr-demo-2 cluster]$ hive --service hiveserver &

[mapr@mapr-demo-2 cluster]$ disown

[mapr@mapr-demo-2 cluster]$ lsof -i:10000

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

java 28361 mapr 142u IPv4 101497 0t0 TCP *:ndmp (LISTEN)

and then tell FrDO to kick off the snapshot-based Hive aggregation task that

will continuously run until you exit with CTRL+C:

[mapr@mapr-demo-2 cluster]$ ./frdo.sh gen

Finally, to stop generating data, shut down it down as so:

[mapr@mapr-demo-2 cluster]$ ./frdo.sh down

To demo the consumption part you first have to launch the FrDo app server:

[mapr@mapr-demo-2 cluster]$ ./frdo.sh run

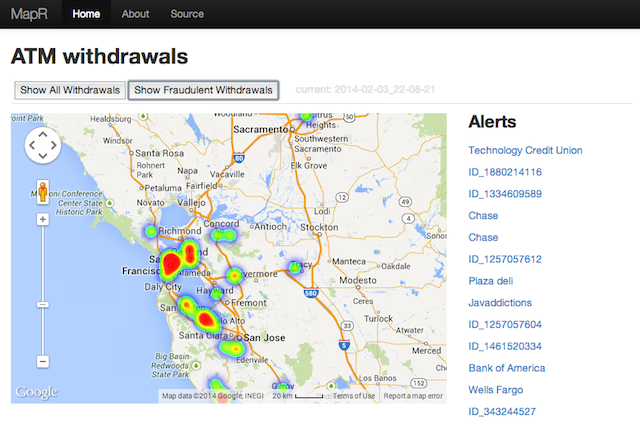

Then, to use the front-end launch a Web browser (tested under Chrome) and you should go to mapr-demo-2:6996/ where you should see the following:

Note: in case you see a bank map, check if you're online, because of Google Maps.

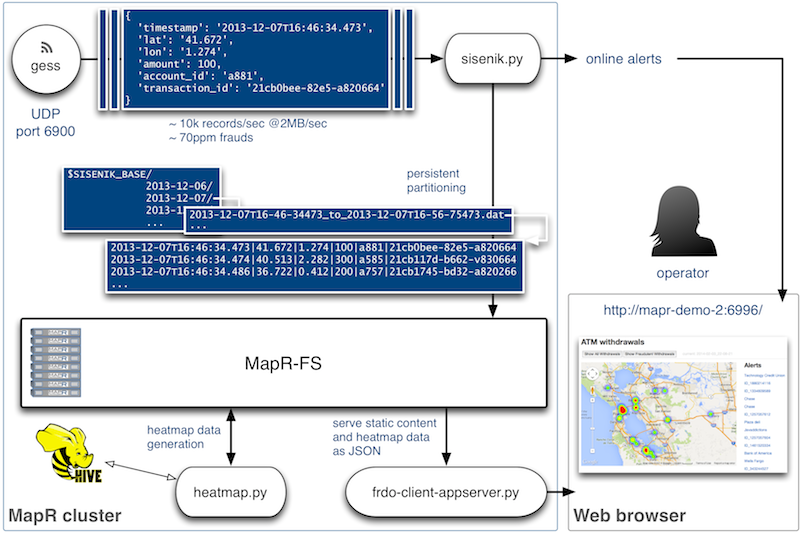

FrDO consists of two parts, the cluster part and the client part.

Cluster part:

- The source of the financial transactions is gess.

- For handling online alerts and creating persistent partitions of the data a script called Sisenik is used.

- Hive and MapR snapshots are used to compute the heat-map data.

See more details in the cluster documentation.

Client part:

- Online alerts are available via the command line (console of one of the cluster machines) as well as via the front-end.

- The app server serves static resources and a JSON representation of the heatmap data.

See also the architecture diagram as PDF.

The static data part is established through the ATM locations via the OpenStreetMap project via gess. See the respective gess documentation on how to add your own ATM locations to it.

The dynamic data part in FrDO is all around ATM withdrawals. It is generated by gess in the following form:

...

{

'timestamp': '2013-11-08T10:58:19.668225',

'lat': '36.7220096',

'lon': '-4.4186772',

'amount': 100,

'account_id': 'a335',

'transaction_id': '636adacc-49d2-11e3-a3d1-a820664821e3'

}

...

Once processed by Sisenik, the TSV data on disk is of the following shape:

...

2013-12-07T16:46:34.473346|41.6722814|1.2743908|100|a881|21cb0bee-5f5f-11e3-82e5-a820664821e3

2013-12-07T16:46:34.473491|41.6107162|2.2896272|300|a585|21cb117d-5f5f-11e3-b662-a820664821e3

2013-12-07T16:46:34.473635|36.7220096|-4.4186772|200|a757|21cb1745-5f5f-11e3-bd32-a820664821e3

2013-12-07T16:46:34.473811|39.7444347|3.429966|300|a883|21cb1e05-5f5f-11e3-8342-a820664821e3

...

In order to realise this app with vanilla Hadoop/HDFS/Hive, one would need

something like Apache Kafka to handle the incoming data

stream and partitioning. We do this here with a simple Python script (sisenik.py)

that has less than 150 LOC and this is only possible because MapRFS is a fully read/write,

POSIX compliant filesystem. Same is true for the app server, another Python script (frdo-client-appserver.py)

that runs directly against the mounted cluster filesystem which in the vanilla Hadoop

case would likely be realized via special connectors or exporting the resulting heat-maps.

I'd like to thank my colleague Andy Pernsteiner for test-driving this demo, providing very valuable feedback and bug fixes. Your time and dedication is very much appreciated, Andy!

All software in this repository is available under Apache License 2.0 and all other artifacts such as documentation or figures (drawings) are available under CC BY 3.0.