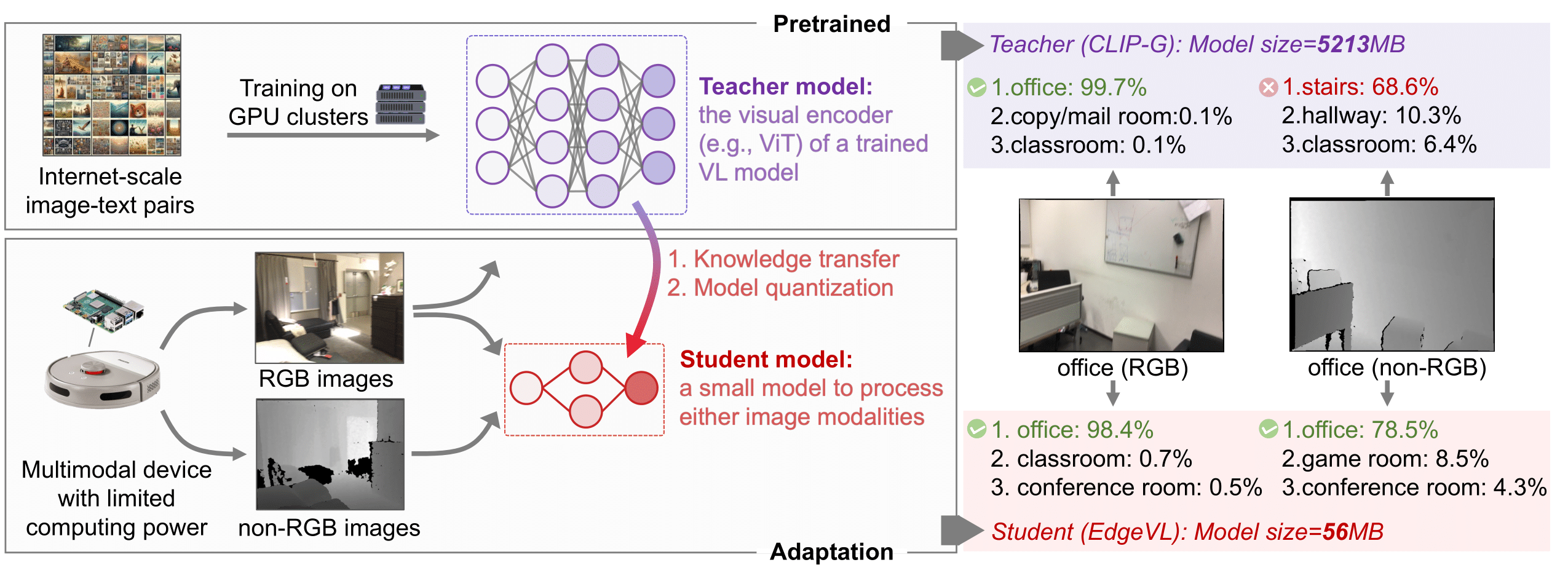

Self-Adapting Large Visual-Language Models to Edge Devices across Visual Modalities

Kaiwen Cai, Zhekai Duan, Gaowen Liu, Charles Fleming, Chris Xiaoxuan Lu

👉 Download the supplementary material of the paper

- [2024-03-15] Our preprint paper is available on arXiv.

- [2024-07-02] Our paper is accepted by ECCV 2024. 🎉

- [2024-07-20] Training and testing code is released.

Our EdgeVL consists of two stages:

# Stage1

DATASET=eurosat; CONFIG=swint_mix; QUANT_CONFIG=disable

python run.py --phase=train --config=configs/${DATASET}/${CONFIG}.yaml --quant_config=quantization_configs/${QUANT_CONFIG}.yaml

# Stage 2

DATASET=eurosat; CONFIG=swint_mix_ctrs; QUANT_CONFIG=jacob

python run.py --phase=train_ctrs --config=configs/${DATASET}/${CONFIG}.yaml --quant_config=quantization_configs/${QUANT_CONFIG}.yaml

RUN_NAME=[run_name]; QUANT_CONFIG=jacob; TEST_MODAL=depth

python run.py --phase=test --run_name=${RUN_NAME} --quant_config=quantization_configs/${QUANT_CONFIG}.yaml --test_modal=${TEST_MODAL} --static_or_dynamic=static

You might want to download the pretrained weights from Hugging Face:

cd edgevl

git lfs install

git clone https://huggingface.co/ramfais/edgevl_weights

mkdir logs && mv edgevl_weights/* logs

Then select a model for inference by setting RUN_NAME=datt_scannet|datt_eurosat|swint_scannet|swint_eurosat|vits_scannet|vits_eurosat

RUN_NAME=datt_scannet; QUANT_CONFIG=jacob; TEST_MODAL=depth

python run.py --phase=test --run_name=${RUN_NAME} --quant_config=quantization_configs/${QUANT_CONFIG}.yaml --test_modal=${TEST_MODAL} --static_or_dynamic=static

@inproceedings{cai2024selfadapting,

author = {Cai, Kaiwen and Duan, Zhekai and Liu, Gaowen and Fleming, Charles and Lu, Chris Xiaoxuan},

booktitle = {European {Conference} on {Computer} {Vision} ({ECCV})},

year = {2024},

pages = {},

publisher = {},

title = {Self-{Adapting} {Large} {Visual}-{Language} {Models} to {Edge} {Devices} across {Visual} {Modalities}},

}