Created with ❤️

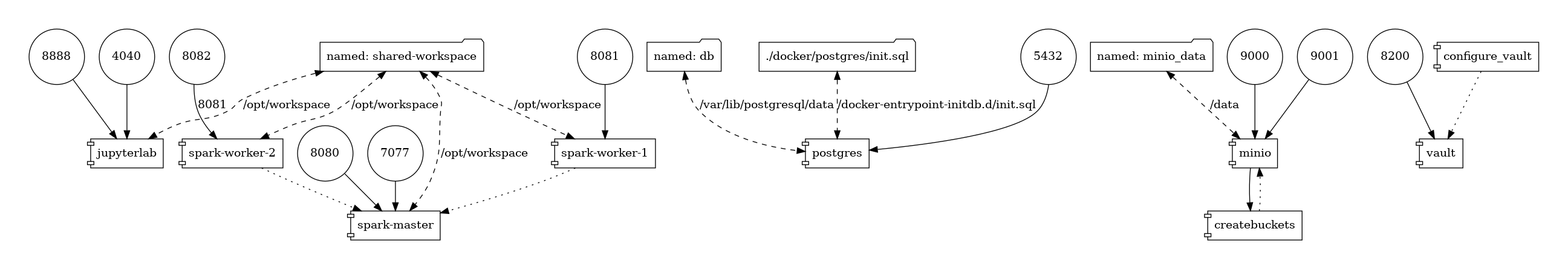

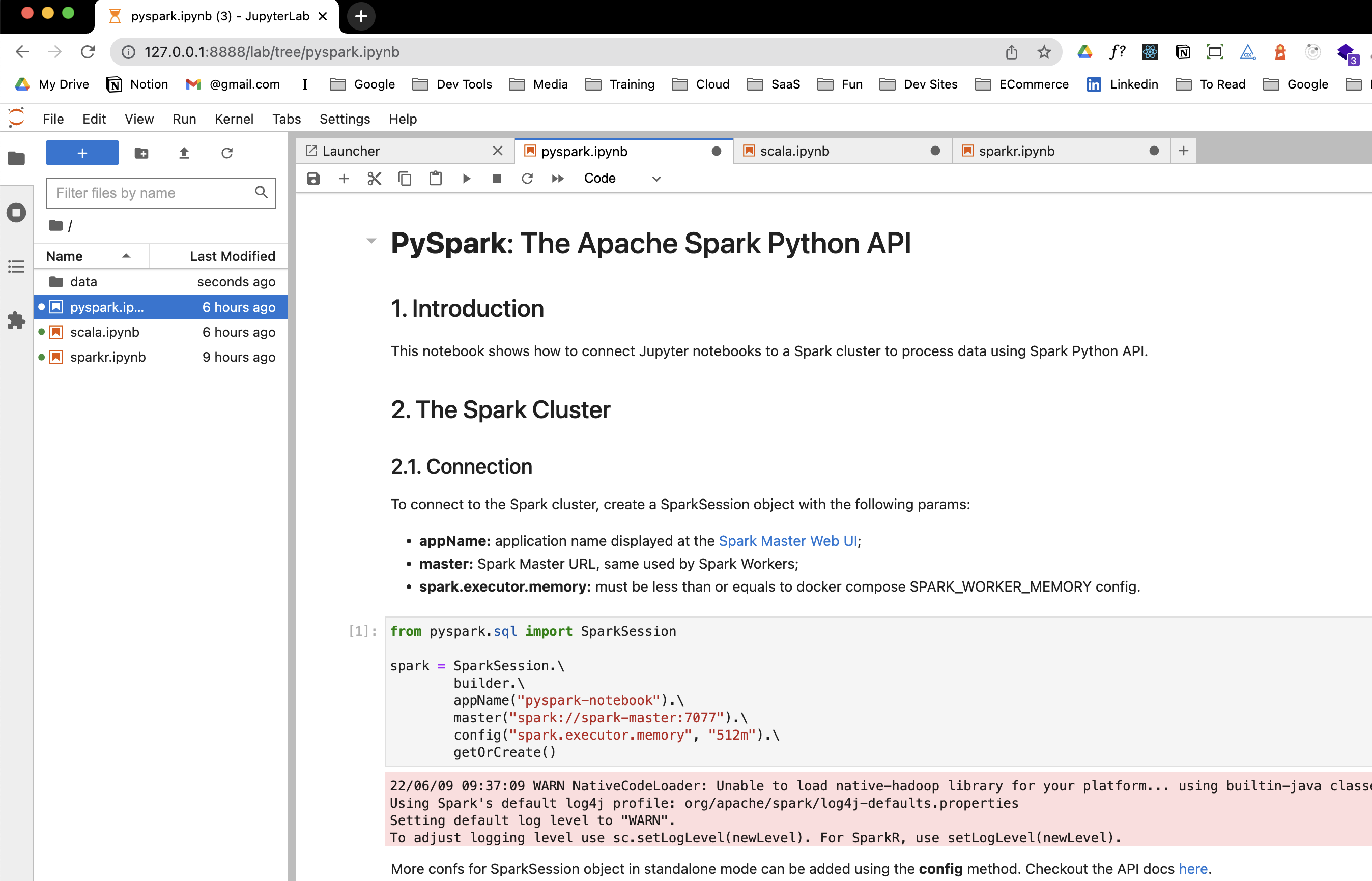

- Spark

- 1 master node

- 2 worker

- Jupyterlab

- Python

- Scala

- R

- Postgres

- Almond A Scala Kernel for Jupiter 🧠

- Jupiterlab Jupiter Lab 🪐

- Spark Spark ⚡️

- Minio Minio S3 compatble object storage 🏪

- Hashicorp Vault A secret manager 🔐

Clone the repository

git clone https://github.com/raphaelmansuy/spark-jupyter-env-dockerEnter the project directory

cd spark-jupyter-env-docker./build.shdocker-compose up --build🧓 user: minioadmin 🔐 password: minioadmin

🔐 token myrootid

💣 This instruction delete all the containers and their volumes

docker-compose down --volumesVoilà 🚀