UNOFFICIAL, Stable-Diffusion api using FastAPI

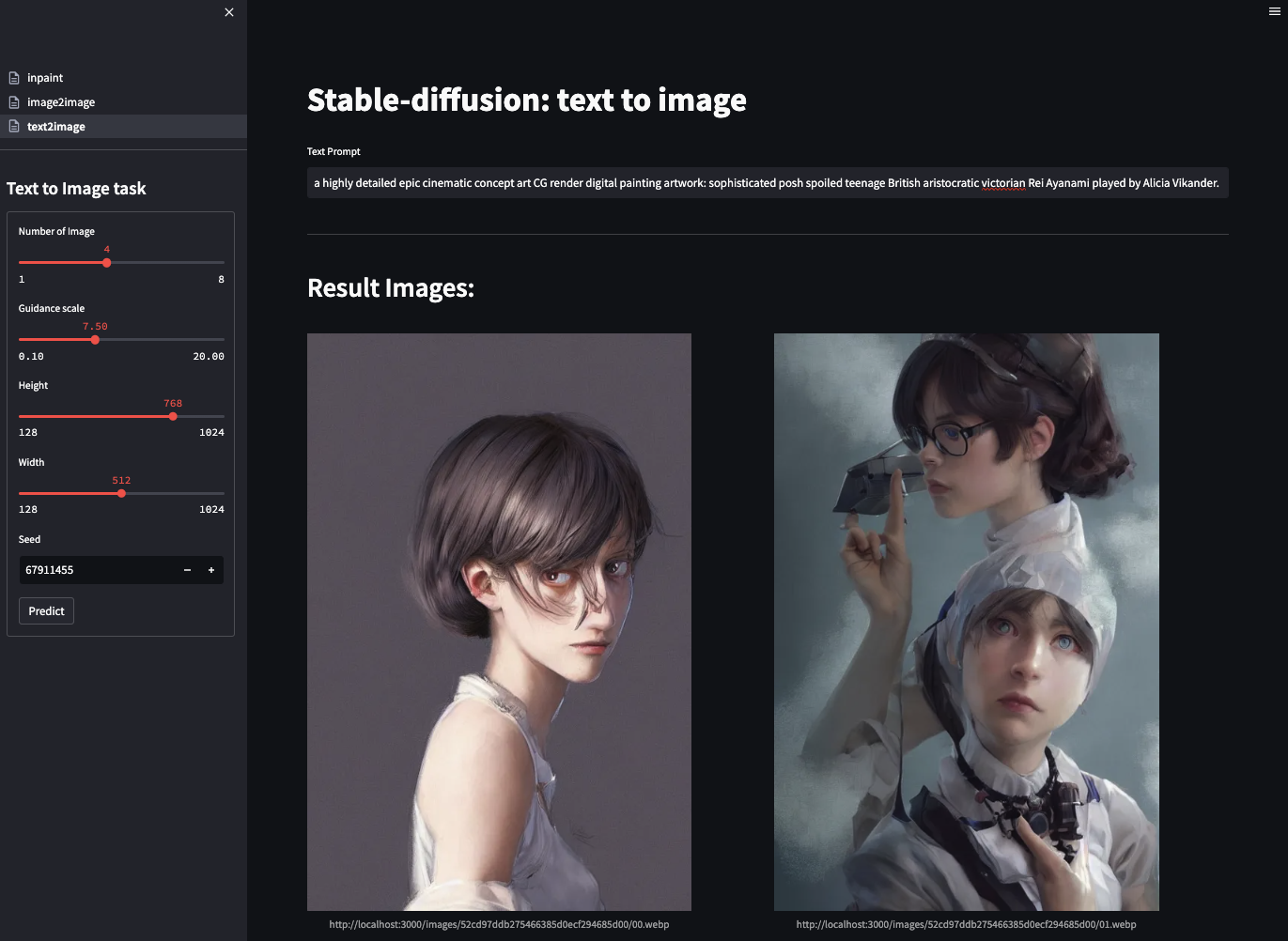

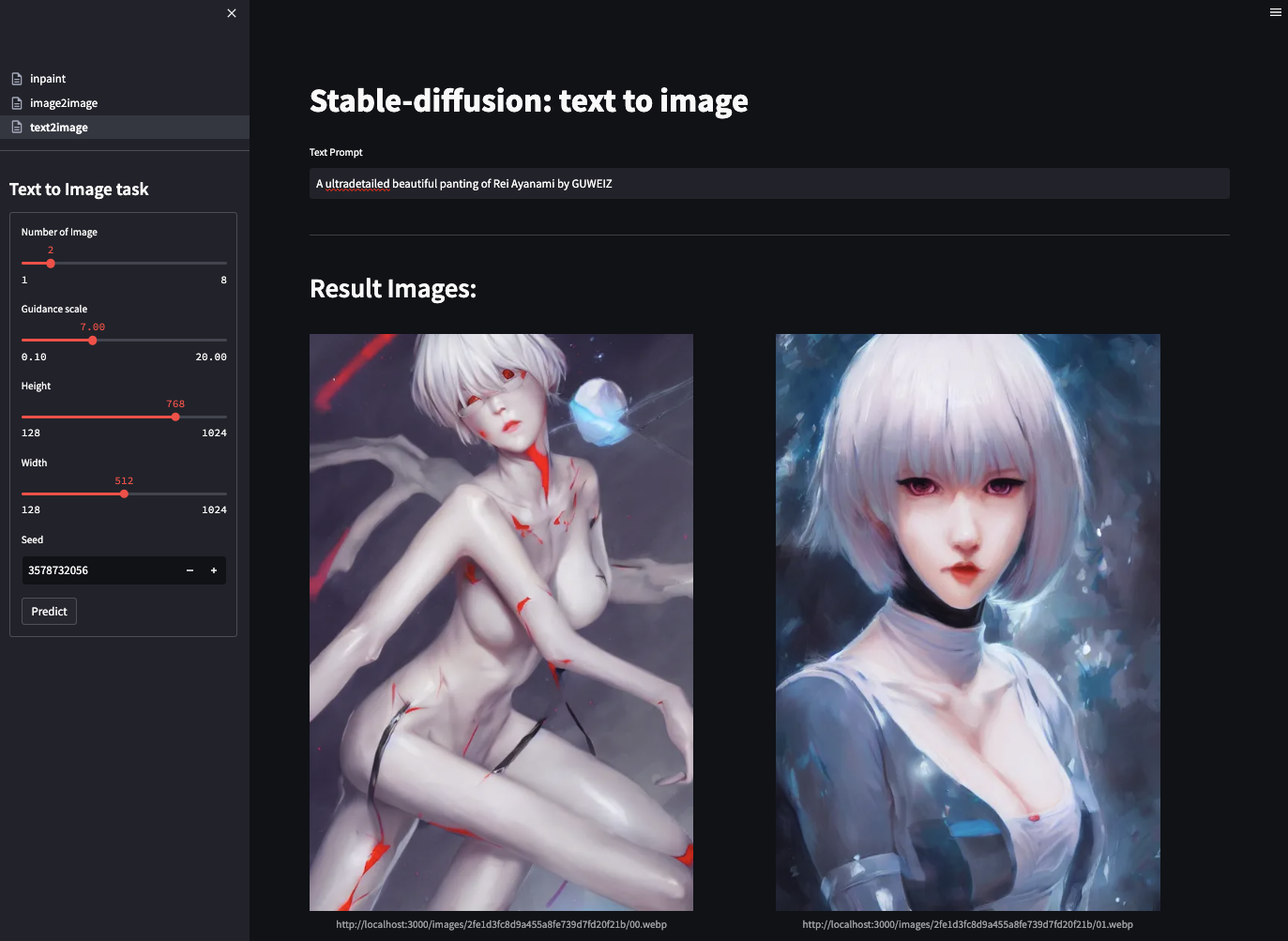

| Text2Image-01 | Text2Image-02 |

|---|---|

|

|

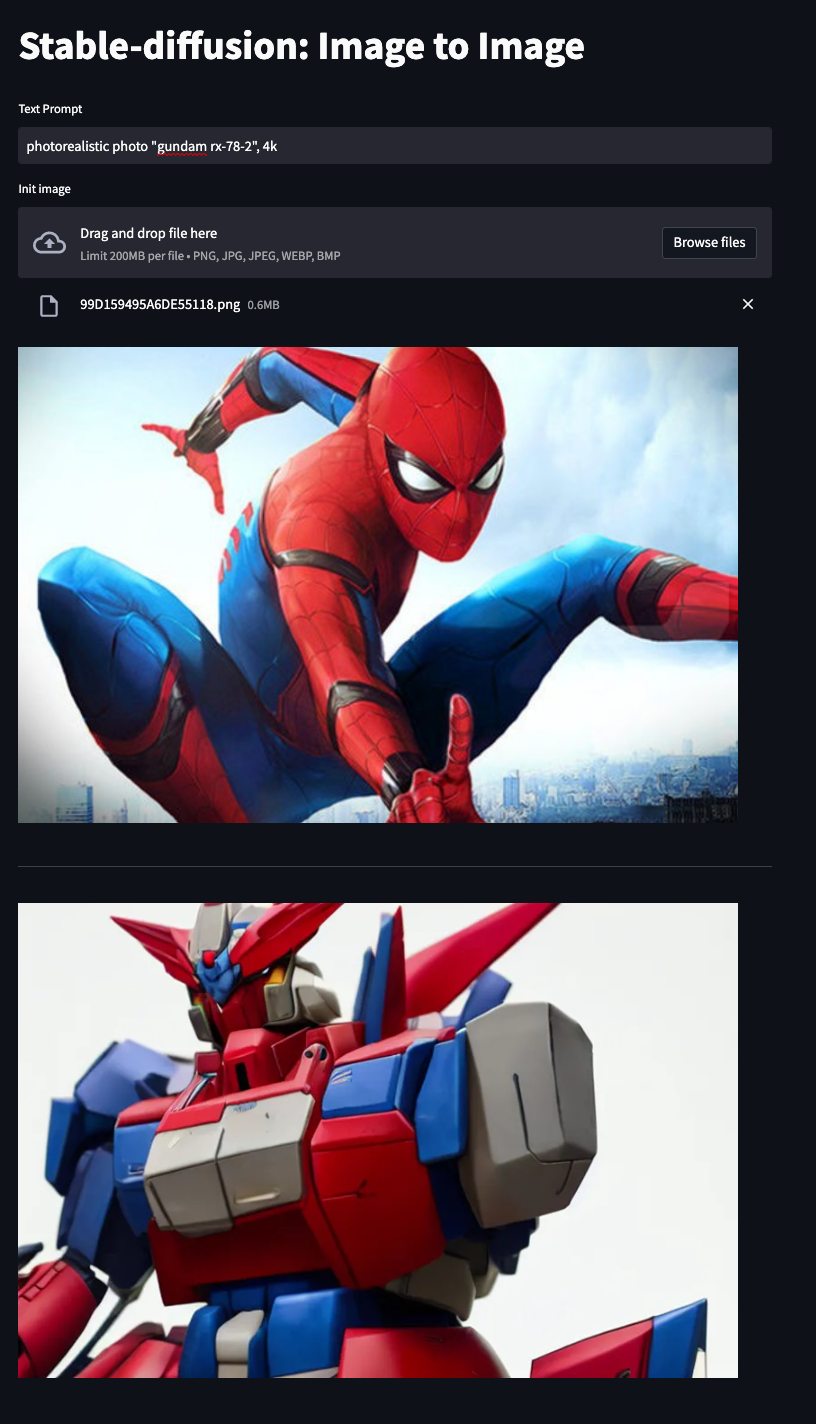

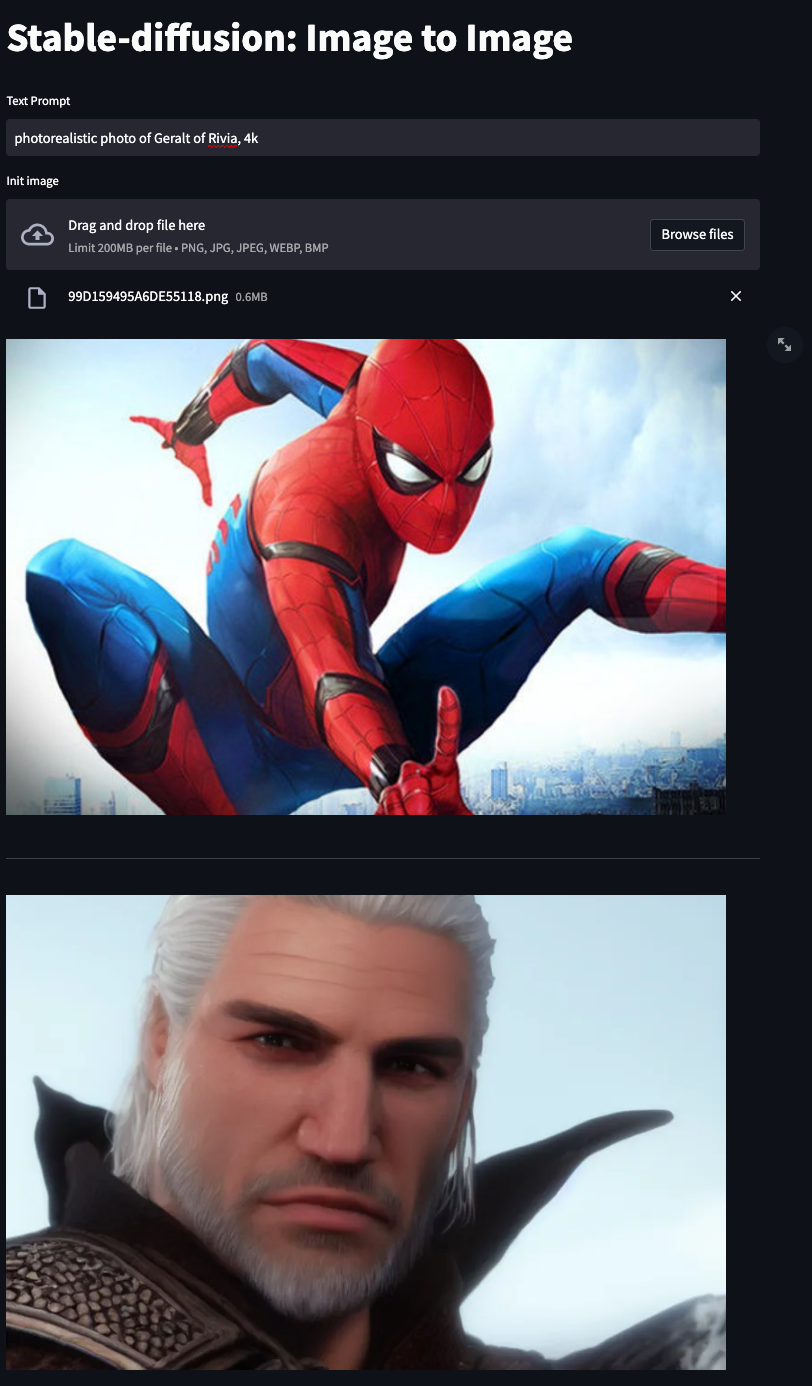

| Image2Image-01 | Image2Image-02 |

|

|

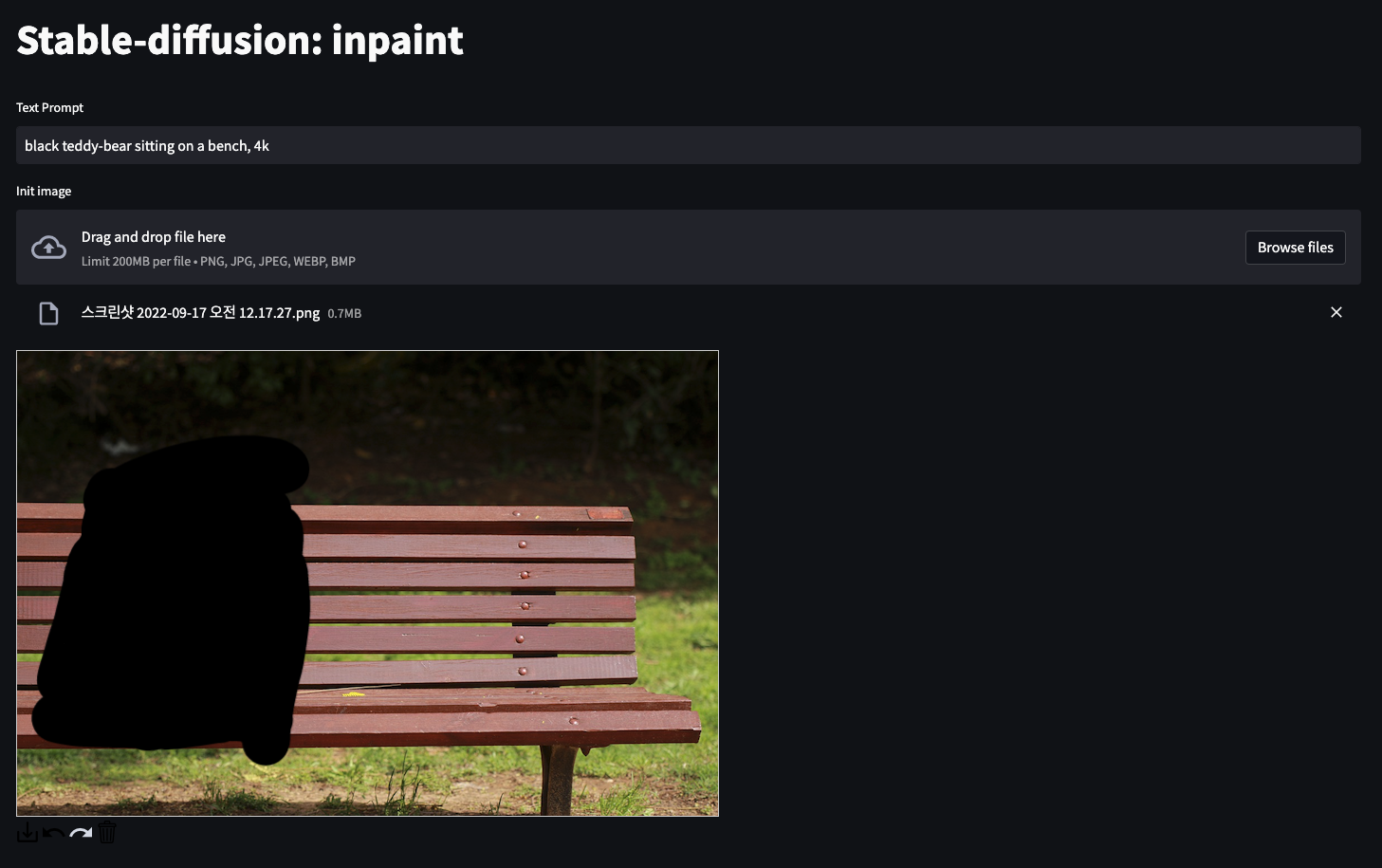

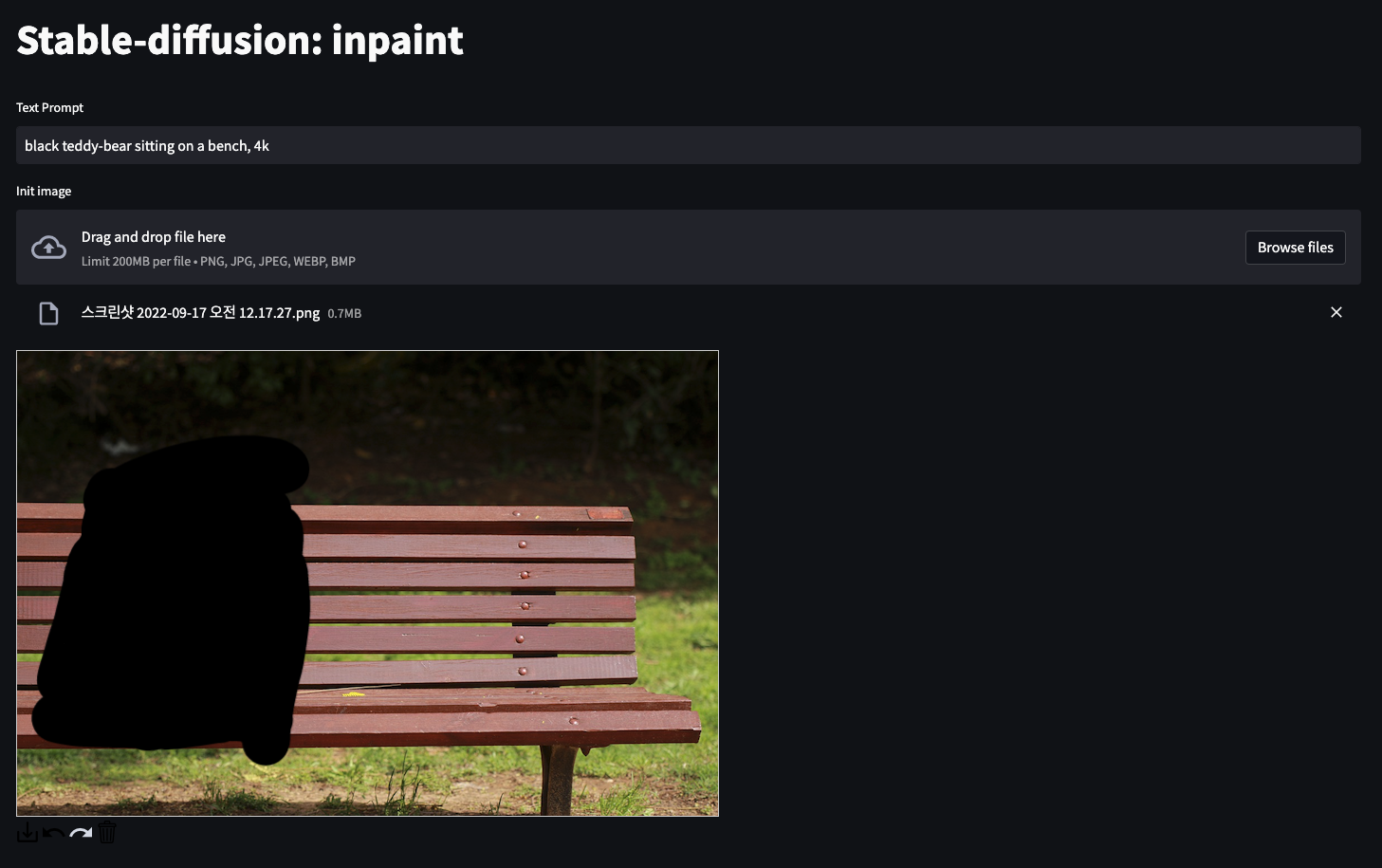

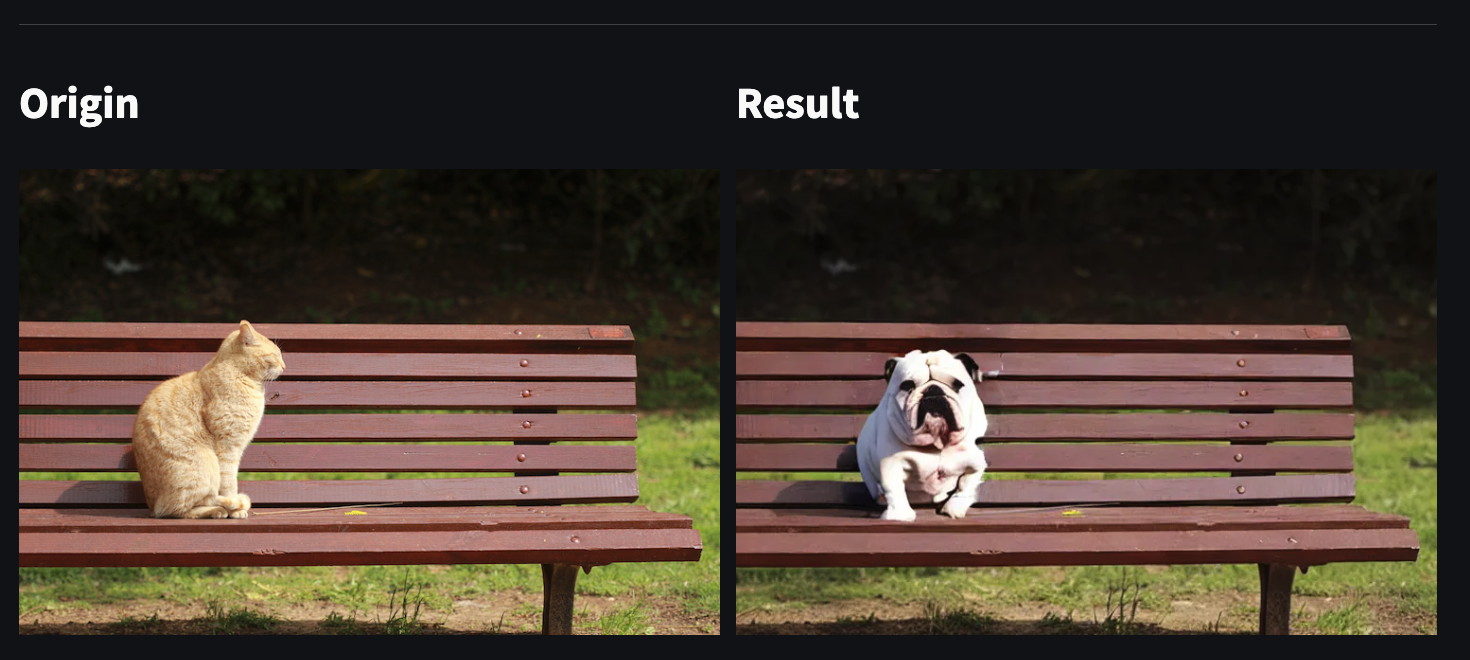

| Inpaint-01 | Inpaint-02 |

|

|

|

|

- long-prompt-weighting support

- text2image

- image2image

- inpaints

- negative-prompt

- celery async task (check celery_task branch)

- original

ckptformat support - object storage support

- stable-diffusion 2.0 support

- token size checker

- JAX/Flax pipeline

fastapi[all]==0.80.0

fastapi-restful==0.4.3

fastapi-health==0.4.0

service-streamer==0.1.2

pydantic==1.9.2

diffusers==0.3.0

transformers==4.19.2

scipy

ftfystreamlit==1.12.2

requests==2.27.1

requests-toolbelt==0.9.1

pydantic==1.8.2

streamlit-drawable-canvas==0.9.2create image from input prompt

inputs:

- prompt(str): text prompt

- num_images(int): number of images

- guidance_scale(float): guidance scale for stable-diffusion

- height(int): image height

- width(int): image width

- seed(int): generator seed

outputs:

- prompt(str): input text prompt

- task_id(str): uuid4 hex string

- image_urls(str): generated images url

create image from input image

inputs:

- prompt(str): text prompt

- init_image(imagefile): init image for i2i task

- num_images(int): number of images

- guidance_scale(float): guidance scale for stable-diffusion

- seed(int): generator seed

outputs:

- prompt(str): input text prompt

- task_id(str): uuid4 hex string

- image_urls(str): generated images url

# env setting is in

>> ./core/settings/settings.py| Name | Default | Desc |

|---|---|---|

| MODEL_ID | CompVis/stable-diffusion-v1-4 | huggingface repo id or model path |

| ENABLE_ATTENTION_SLICING | True | Enable sliced attention computation. |

| CUDA_DEVICE | "cuda" | target cuda device |

| CUDA_DEVICES | [0] | visible cuda device |

| MB_BATCH_SIZE | 1 | Micro Batch: MAX Batch size |

| MB_TIMEOUT | 120 | Micro Batch: timeout sec |

| HUGGINGFACE_TOKEN | None | huggingface access token |

| IMAGESERVER_URL | None | result image base url |

| SAVE_DIR | static | result image save dir |

| CORS_ALLOW_ORIGINS | [*] | cross origin resource sharing setting for FastAPI |

pip install -r requirements.txtpython huggingface_model_download.py

# check stable-diffusion model in huggingface cache dir

[[ -d ~/.cache/huggingface/diffusers/models--CompVis--stable-diffusion-v1-4 ]] && echo "exist"

>> exist# example

class ModelSetting(BaseSettings):

MODEL_ID: str = "CompVis/stable-diffusion-v1-4" # huggingface repo id

ENABLE_ATTENTION_SLICING: bool = True

...

class Settings(

...

):

HUGGINGFACE_TOKEN: str = "YOUR HUGGINGFACE ACCESS TOKEN"

IMAGESERVER_URL: str = "http://localhost:3000/images"

SAVE_DIR: str = 'static'

...bash docker/api/start.shpip install \

streamlit==1.12.2 \

requests==2.27.1 \

requests-toolbelt==0.9.1 \

pydantic==1.8.2 \

streamlit-drawable-canvas==0.9.2streamlit run inpaint.pydocker-compose buildversion: "3.7"

services:

api:

...

volumes:

# mount huggingface model cache dir path to container root user home dir

- /model:/model # if you load pretraind model

- ...

environment:

...

MODEL_ID: "CompVis/stable-diffusion-v1-4"

HUGGINGFACE_TOKEN: {YOUR HUGGINGFACE ACCESS TOKEN}

...

deploy:

...

frontend:

...docker-compose up -d

# or API only

docker-compsoe up -d api

# or frontend only

docker-compsoe up -d frontend