Videos submission for the lablab hackathon

Simple frontend demo

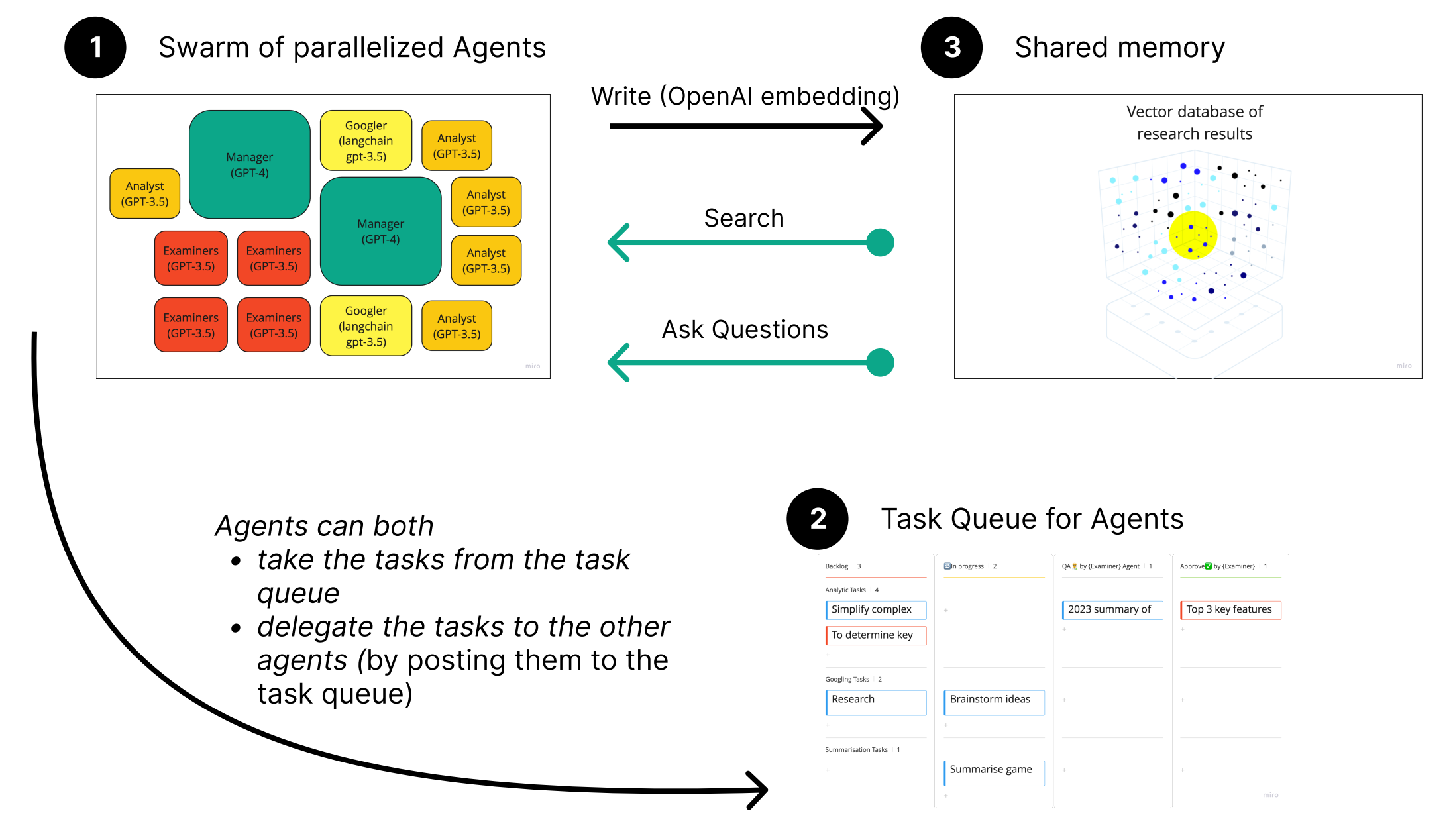

GPT-Swarm is a groundbreaking project that combines swarm intelligence and advanced language models to tackle complex tasks across diverse domains. Our innovative framework is robust, adaptive, and scalable, outperforming single models by leveraging the power of collective problem-solving and distributed decision-making. Not to mention the lightning-fast speed with which it performs the research.

GPT-Swarm is inspired by the principles of emergence. In nature, when you allow simple agents to interract with each other, they show fundamentally new capabilities. Typical examples are bees or ant hives, or even countries and cultures.

You can add any models with any capabilities to the swarm and make them work together with each other.

By utilizing shared vector-based memory, and giving the swarm the ability to adjust itself and it's behavior, we achieve similar adaptability as in reinforcement learning, but without expensive retraining of base-models.

Swarm is the only intelligence system to date that can effectively do complex tasks like performing market research or generating whole software solutions.

- Bees algorithm Wiki

- Multi agent intelligence Wiki

- Swarm intelligence Wiki

- Swarm Intelligence: From Natural to Artificial Systems, by Eric Bonabeau

-

First, you need to create a

keys.jsonfile in the root folder.GOOGLE_API_KEYandCUSTOM_SEARCH_ENGINE_IDare needed for the models to be able to use google search.{ "OPENAI_API_KEY": "sk-YoUrKey", "GOOGLE_API_KEY": "blablablaapiKey", "CUSTOM_SEARCH_ENGINE_ID": "12345678aa25" } -

Then you can specify the swarm configuration and tasks in

swarm_config.yaml -

After that, to run the swarm simply run

# On Linux or Mac: ./run.sh start # On Windows: .\run.bat

-

After some time the swarm will start producing a report and storing it in the run folder. By default it's in:

./tmp/swarm/output.txtand./tmp/swarm/output.json

-

If you are brave, you can go though the logs. Be careful, because the swarm produces incredible amount of data very fast. You can find logs in

./tmp/swarm/swarm.json. You can also use./tests/_explore_logs.ipynbto more easily digest the logs. -

The shared memory in the run is persistent. You can ask additional questions to this memory using

./tests/_task_to_vdb.ipynb

- Make adding new models as easy as possible, including custom deployed ones like llama

- Multi-key support for higher scalability

Build Multi-Arch image:

docker buildx build --platform linux/amd64,linux/arm64 --tag gpt-swarm/gpt-swarm:0.0.0 .- Follow the SOLID principles and don't break the abstractions

- Create bite-sized PRs