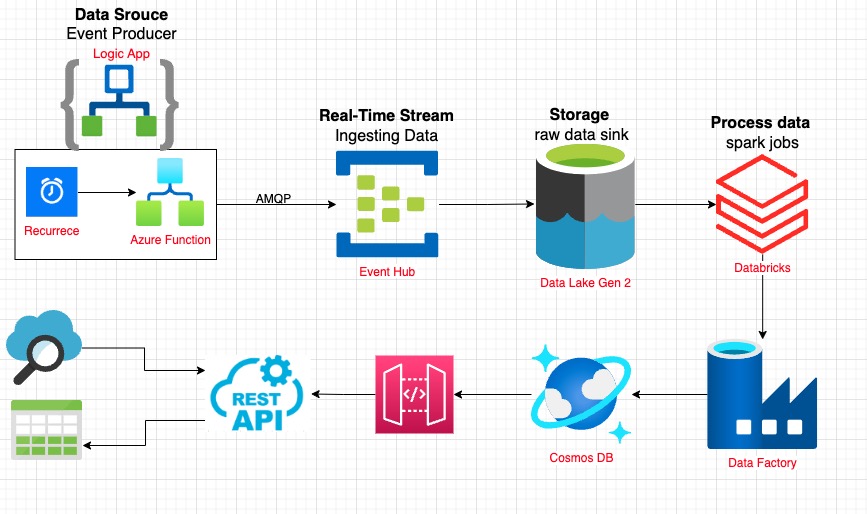

AS a Data Engineer, I WANTED TO build a SERVERLESS infrastructure in Azure that simulates the behavior of a real-world big data system, SO THAT it can provide other team members (Data Scientists or Data Analysts) to access the processed data.

- MS Azure

- Terraform

- Dash Plotly (to do)

- Python

- SQL

Using Terraform to set up Azure Infrastructure and Resources.

- Data Lake

- Azure Functions

- Azure Data Factory

- Event Hub

- Logic App

- Databricks

- Cosmos DB

- API management

- Azure Active Directory

- App Registration

Dummy data for this demo, the reason beings that this demo is to simulate the behavior of a real-world data source, i.e., "IoT data" or "web scraping".

Data Generator in Local: This Python Script to generate dummy JSON object every 10s - used car sales records, saved in bronze data folder.

Data Generator in Azure: This Python Script to generate dummy data, which will be designed into Azure Logic App. It is a batch processing solution, and the data is generated in intervals (defined by the recurrence time)

- loading Libs Python

pip install azure-eventhub

pip install azure-identity

pip install aiohttp- Authentication

2.1. ADD (real-world application)

retrieve the resource ID

az servicebus namespace show -g '<your-event-hub-resource-group>' -n '<your-event-hub-name> --query id

assign roles

az role assignment create --assignee "<user@domain>" \

--role "Azure Event Hubs Data Owner" \

--scope "<your-resource-id>"

2.2. Connection String is an easier way to establish and authorise the connection between Event Hubs and another application.

- NFS 3.0 when creating the storage account

- Access Key - found it in the specific Azure Data Lake account, this is an easier solution to mount

DatabrickstoAzure Data Lake

to run spark job to do `join` and 'transformation'

-

Mount Data Lake folder to Databricks - this allows the synchronisation so that 'spark job' can access to

Data Lake files

- Define schema:

StructTypedefines the schema for the data frame, with each field specified using theStructFieldtype. This is to keep all columns in an order, and reduce size of the data by re-defining datatypes. - Join job: read and join all JSON format files saved in Data Lakes

- Transformation: [Turnover] = [Date removed] - [Date listed]

raw folderas hot-tier access, designed as a landing zone for all ingested data.silver folderas a hot-tier access, designed as a processed zone for all processed data.archive folderas a cold-tier access, to store those raw data which has been processed by Spark job. This can help reduce storage costs while still allowing us to access the JSON objects if needed.

Because Databricks storage doesn't support wildcard search, so it is not ideal to code within the spark job. So, Logic App is here to manage the Data Flow.

- Create an Azure Cosmos DB account

- use ADF or Airflow to load silver data from Datalake

- check Schema

- ...

-

Data Management life circle enables hot-tier and cold-tier.

-

Create Create Secret Scopehttps://mydatabricks-instance#secrets/createScope (change mydatabricks-instance into my instance name)- use this link to create a Secret Scope inDatabricksso that the credentials will not expose when mountingAzure Data Lake